fit ebpmf to sla full data with non-negative constraints, new version with different baseline

DongyueXie

2023-08-06

Last updated: 2023-08-07

Checks: 7 0

Knit directory: gsmash/

This reproducible R Markdown analysis was created with workflowr (version 1.6.2). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20220606) was run prior to running

the code in the R Markdown file. Setting a seed ensures that any results

that rely on randomness, e.g. subsampling or permutations, are

reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility.

The results in this page were generated with repository version d0c8224. See the Past versions tab to see a history of the changes made to the R Markdown and HTML files.

Note that you need to be careful to ensure that all relevant files for

the analysis have been committed to Git prior to generating the results

(you can use wflow_publish or

wflow_git_commit). workflowr only checks the R Markdown

file, but you know if there are other scripts or data files that it

depends on. Below is the status of the Git repository when the results

were generated:

Ignored files:

Ignored: .Rhistory

Ignored: .Rproj.user/

Untracked files:

Untracked: analysis/GO_ORA_montoro.Rmd

Untracked: analysis/GO_ORA_pbmc_purified.Rmd

Untracked: analysis/fit_ebpmf_sla_2000.Rmd

Untracked: analysis/poisson_deviance.Rmd

Untracked: analysis/sla_data.Rmd

Untracked: chipexo_rep1_reverse.rds

Untracked: data/Citation.RData

Untracked: data/SLA/

Untracked: data/abstract.txt

Untracked: data/abstract.vocab.txt

Untracked: data/ap.txt

Untracked: data/ap.vocab.txt

Untracked: data/sla_2000.rds

Untracked: data/sla_full.rds

Untracked: data/text.R

Untracked: data/tpm3.rds

Untracked: output/driving_gene_pbmc.rds

Untracked: output/pbmc_gsea.rds

Untracked: output/plots/

Untracked: output/tpm3_fit_fasttopics.rds

Untracked: output/tpm3_fit_stm.rds

Untracked: output/tpm3_fit_stm_slow.rds

Untracked: sla.rds

Unstaged changes:

Modified: analysis/PMF_splitting.Rmd

Modified: analysis/fit_ebpmf_sla.Rmd

Modified: code/poisson_STM/structure_plot.R

Modified: code/poisson_mean/pois_log_normal_mle.R

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the repository in which changes were

made to the R Markdown

(analysis/fit_ebpmf_sla_full_nonneg2.Rmd) and HTML

(docs/fit_ebpmf_sla_full_nonneg2.html) files. If you’ve

configured a remote Git repository (see ?wflow_git_remote),

click on the hyperlinks in the table below to view the files as they

were in that past version.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| Rmd | d0c8224 | DongyueXie | 2023-08-07 | wflow_publish(c("analysis/fit_ebpmf_sla_nonneg.Rmd", "analysis/fit_ebpmf_sla_full_nonneg.Rmd", |

Introduction

Previously i filtered out words that appear in less than 5 documents. That resulted in around 2000 words

Here i filtered out words that appear in less than 3 documents. This resulted in around 4000 words

library(Matrix)

datax = readRDS('data/sla_full.rds')

dim(datax$data)[1] 3207 10104sum(datax$data==0)/prod(dim(datax$data))[1] 0.9948157datax$data = Matrix(datax$data,sparse = TRUE)Data filtering

filter out some documents

doc_to_use = order(rowSums(datax$data),decreasing = T)[1:round(nrow(datax$data)*0.6)]

mat = datax$data[doc_to_use,]

samples = datax$samples

samples = lapply(samples, function(z){z[doc_to_use]})word_to_use = which(colSums(mat>0)>=3)

mat = mat[,word_to_use]model fitting

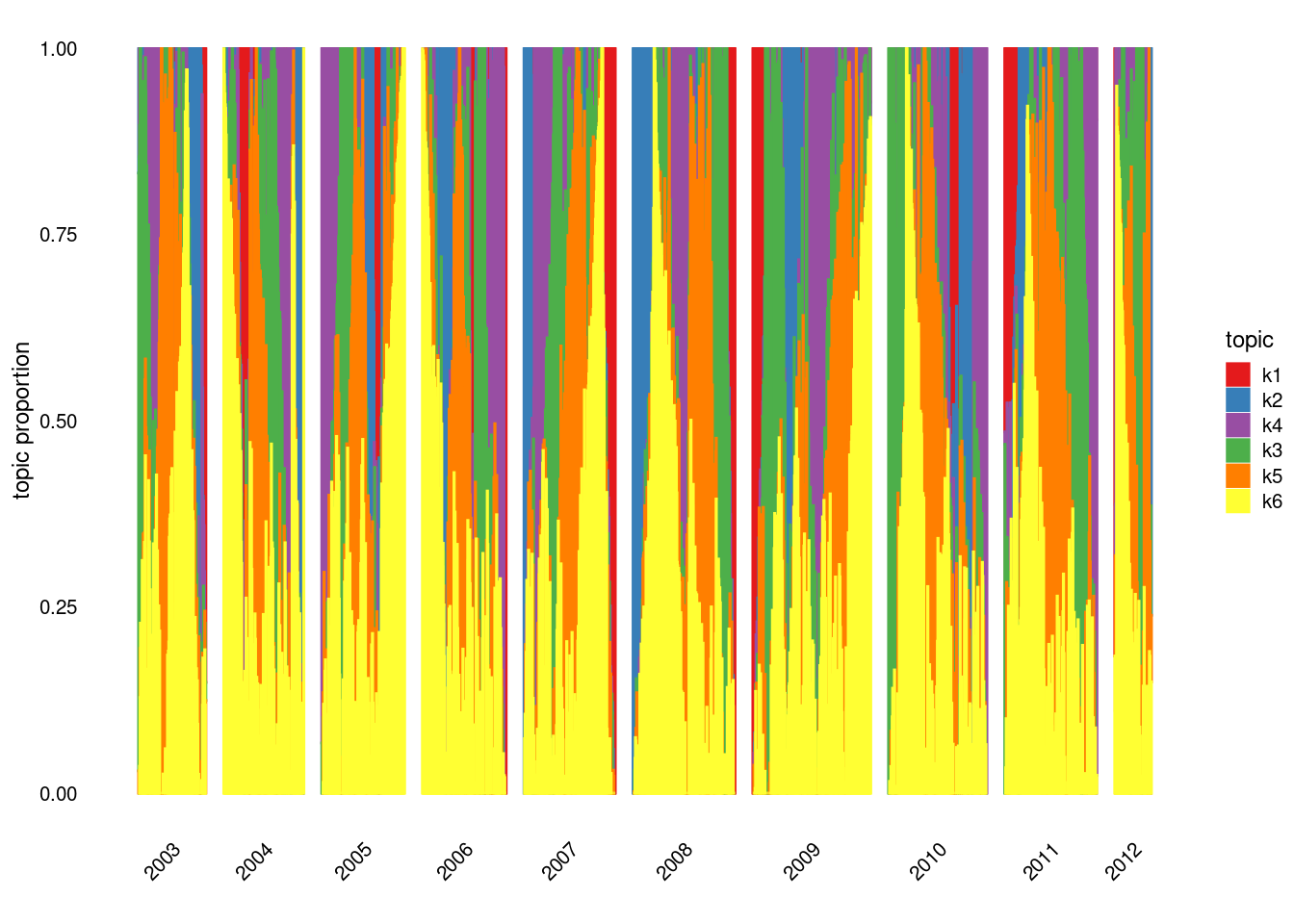

Topic model

library(fastTopics)

fit_tm = fit_topic_model(mat,k=6)Initializing factors using Topic SCORE algorithm.

Initializing loadings by running 10 SCD updates.

Fitting rank-6 Poisson NMF to 1924 x 3034 sparse matrix.

Running 100 EM updates, without extrapolation (fastTopics 0.6-142).

Refining model fit.

Fitting rank-6 Poisson NMF to 1924 x 3034 sparse matrix.

Running 100 SCD updates, with extrapolation (fastTopics 0.6-142).structure_plot(fit_tm,grouping = samples$journal,gap = 40)Running tsne on 508 x 6 matrix.Read the 508 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 100.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.17 seconds (sparsity = 0.735686)!

Learning embedding...

Iteration 50: error is 48.624059 (50 iterations in 0.07 seconds)

Iteration 100: error is 48.624059 (50 iterations in 0.07 seconds)

Iteration 150: error is 48.624059 (50 iterations in 0.07 seconds)

Iteration 200: error is 48.624059 (50 iterations in 0.08 seconds)

Iteration 250: error is 48.624059 (50 iterations in 0.08 seconds)

Iteration 300: error is 0.629805 (50 iterations in 0.07 seconds)

Iteration 350: error is 0.627235 (50 iterations in 0.06 seconds)

Iteration 400: error is 0.627244 (50 iterations in 0.06 seconds)

Iteration 450: error is 0.627246 (50 iterations in 0.06 seconds)

Iteration 500: error is 0.627246 (50 iterations in 0.06 seconds)

Iteration 550: error is 0.627247 (50 iterations in 0.07 seconds)

Iteration 600: error is 0.627244 (50 iterations in 0.06 seconds)

Iteration 650: error is 0.627244 (50 iterations in 0.07 seconds)

Iteration 700: error is 0.627245 (50 iterations in 0.06 seconds)

Iteration 750: error is 0.627244 (50 iterations in 0.06 seconds)

Iteration 800: error is 0.627244 (50 iterations in 0.06 seconds)

Iteration 850: error is 0.627244 (50 iterations in 0.06 seconds)

Iteration 900: error is 0.627245 (50 iterations in 0.06 seconds)

Iteration 950: error is 0.627244 (50 iterations in 0.06 seconds)

Iteration 1000: error is 0.627244 (50 iterations in 0.07 seconds)

Fitting performed in 1.31 seconds.Running tsne on 280 x 6 matrix.Read the 280 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 92.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.08 seconds (sparsity = 0.996250)!

Learning embedding...

Iteration 50: error is 42.308315 (50 iterations in 0.03 seconds)

Iteration 100: error is 42.298743 (50 iterations in 0.03 seconds)

Iteration 150: error is 42.305444 (50 iterations in 0.03 seconds)

Iteration 200: error is 42.301437 (50 iterations in 0.03 seconds)

Iteration 250: error is 42.307227 (50 iterations in 0.03 seconds)

Iteration 300: error is 0.460297 (50 iterations in 0.03 seconds)

Iteration 350: error is 0.459706 (50 iterations in 0.03 seconds)

Iteration 400: error is 0.459717 (50 iterations in 0.03 seconds)

Iteration 450: error is 0.459717 (50 iterations in 0.03 seconds)

Iteration 500: error is 0.459717 (50 iterations in 0.03 seconds)

Iteration 550: error is 0.459717 (50 iterations in 0.03 seconds)

Iteration 600: error is 0.459717 (50 iterations in 0.02 seconds)

Iteration 650: error is 0.459717 (50 iterations in 0.02 seconds)

Iteration 700: error is 0.459717 (50 iterations in 0.02 seconds)

Iteration 750: error is 0.459717 (50 iterations in 0.02 seconds)

Iteration 800: error is 0.459717 (50 iterations in 0.03 seconds)

Iteration 850: error is 0.459717 (50 iterations in 0.03 seconds)

Iteration 900: error is 0.459717 (50 iterations in 0.03 seconds)

Iteration 950: error is 0.459717 (50 iterations in 0.03 seconds)

Iteration 1000: error is 0.459717 (50 iterations in 0.02 seconds)

Fitting performed in 0.55 seconds.Running tsne on 885 x 6 matrix.Read the 885 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 100.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.32 seconds (sparsity = 0.426042)!

Learning embedding...

Iteration 50: error is 55.411741 (50 iterations in 0.15 seconds)

Iteration 100: error is 53.843041 (50 iterations in 0.14 seconds)

Iteration 150: error is 53.702830 (50 iterations in 0.14 seconds)

Iteration 200: error is 53.702753 (50 iterations in 0.14 seconds)

Iteration 250: error is 53.702748 (50 iterations in 0.14 seconds)

Iteration 300: error is 0.794966 (50 iterations in 0.14 seconds)

Iteration 350: error is 0.746091 (50 iterations in 0.13 seconds)

Iteration 400: error is 0.738957 (50 iterations in 0.14 seconds)

Iteration 450: error is 0.738239 (50 iterations in 0.14 seconds)

Iteration 500: error is 0.738171 (50 iterations in 0.14 seconds)

Iteration 550: error is 0.738167 (50 iterations in 0.14 seconds)

Iteration 600: error is 0.738168 (50 iterations in 0.13 seconds)

Iteration 650: error is 0.738168 (50 iterations in 0.14 seconds)

Iteration 700: error is 0.738168 (50 iterations in 0.14 seconds)

Iteration 750: error is 0.738168 (50 iterations in 0.14 seconds)

Iteration 800: error is 0.738169 (50 iterations in 0.14 seconds)

Iteration 850: error is 0.738168 (50 iterations in 0.14 seconds)

Iteration 900: error is 0.738168 (50 iterations in 0.14 seconds)

Iteration 950: error is 0.738168 (50 iterations in 0.14 seconds)

Iteration 1000: error is 0.738168 (50 iterations in 0.14 seconds)

Fitting performed in 2.79 seconds.Running tsne on 251 x 6 matrix.Read the 251 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 82.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.06 seconds (sparsity = 0.995508)!

Learning embedding...

Iteration 50: error is 42.570451 (50 iterations in 0.03 seconds)

Iteration 100: error is 42.563046 (50 iterations in 0.03 seconds)

Iteration 150: error is 42.576891 (50 iterations in 0.03 seconds)

Iteration 200: error is 42.564532 (50 iterations in 0.02 seconds)

Iteration 250: error is 42.558228 (50 iterations in 0.02 seconds)

Iteration 300: error is 0.537460 (50 iterations in 0.02 seconds)

Iteration 350: error is 0.535626 (50 iterations in 0.02 seconds)

Iteration 400: error is 0.535610 (50 iterations in 0.03 seconds)

Iteration 450: error is 0.535612 (50 iterations in 0.02 seconds)

Iteration 500: error is 0.535612 (50 iterations in 0.02 seconds)

Iteration 550: error is 0.535612 (50 iterations in 0.02 seconds)

Iteration 600: error is 0.535612 (50 iterations in 0.02 seconds)

Iteration 650: error is 0.535612 (50 iterations in 0.02 seconds)

Iteration 700: error is 0.535612 (50 iterations in 0.03 seconds)

Iteration 750: error is 0.535612 (50 iterations in 0.02 seconds)

Iteration 800: error is 0.535612 (50 iterations in 0.02 seconds)

Iteration 850: error is 0.535612 (50 iterations in 0.03 seconds)

Iteration 900: error is 0.535612 (50 iterations in 0.03 seconds)

Iteration 950: error is 0.535612 (50 iterations in 0.02 seconds)

Iteration 1000: error is 0.535612 (50 iterations in 0.02 seconds)

Fitting performed in 0.47 seconds.

structure_plot(fit_tm,grouping = samples$year,gap = 40)Running tsne on 152 x 6 matrix.Read the 152 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 49.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.02 seconds (sparsity = 0.992382)!

Learning embedding...

Iteration 50: error is 45.481774 (50 iterations in 0.01 seconds)

Iteration 100: error is 45.543350 (50 iterations in 0.02 seconds)

Iteration 150: error is 45.005751 (50 iterations in 0.01 seconds)

Iteration 200: error is 45.433866 (50 iterations in 0.02 seconds)

Iteration 250: error is 46.800353 (50 iterations in 0.01 seconds)

Iteration 300: error is 0.682721 (50 iterations in 0.02 seconds)

Iteration 350: error is 0.557092 (50 iterations in 0.01 seconds)

Iteration 400: error is 0.550732 (50 iterations in 0.01 seconds)

Iteration 450: error is 0.550728 (50 iterations in 0.01 seconds)

Iteration 500: error is 0.550727 (50 iterations in 0.01 seconds)

Iteration 550: error is 0.550727 (50 iterations in 0.01 seconds)

Iteration 600: error is 0.550727 (50 iterations in 0.01 seconds)

Iteration 650: error is 0.550727 (50 iterations in 0.01 seconds)

Iteration 700: error is 0.550727 (50 iterations in 0.01 seconds)

Iteration 750: error is 0.550727 (50 iterations in 0.01 seconds)

Iteration 800: error is 0.550727 (50 iterations in 0.01 seconds)

Iteration 850: error is 0.550727 (50 iterations in 0.01 seconds)

Iteration 900: error is 0.550727 (50 iterations in 0.01 seconds)

Iteration 950: error is 0.550727 (50 iterations in 0.01 seconds)

Iteration 1000: error is 0.550727 (50 iterations in 0.01 seconds)

Fitting performed in 0.23 seconds.Running tsne on 181 x 6 matrix.Read the 181 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 59.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.03 seconds (sparsity = 0.994109)!

Learning embedding...

Iteration 50: error is 44.663381 (50 iterations in 0.01 seconds)

Iteration 100: error is 44.179587 (50 iterations in 0.01 seconds)

Iteration 150: error is 42.735786 (50 iterations in 0.01 seconds)

Iteration 200: error is 42.844862 (50 iterations in 0.02 seconds)

Iteration 250: error is 43.195054 (50 iterations in 0.02 seconds)

Iteration 300: error is 0.688789 (50 iterations in 0.02 seconds)

Iteration 350: error is 0.606633 (50 iterations in 0.01 seconds)

Iteration 400: error is 0.606531 (50 iterations in 0.01 seconds)

Iteration 450: error is 0.606530 (50 iterations in 0.02 seconds)

Iteration 500: error is 0.606530 (50 iterations in 0.01 seconds)

Iteration 550: error is 0.606530 (50 iterations in 0.01 seconds)

Iteration 600: error is 0.606530 (50 iterations in 0.01 seconds)

Iteration 650: error is 0.606530 (50 iterations in 0.01 seconds)

Iteration 700: error is 0.606530 (50 iterations in 0.02 seconds)

Iteration 750: error is 0.606530 (50 iterations in 0.01 seconds)

Iteration 800: error is 0.606530 (50 iterations in 0.02 seconds)

Iteration 850: error is 0.606530 (50 iterations in 0.01 seconds)

Iteration 900: error is 0.606530 (50 iterations in 0.01 seconds)

Iteration 950: error is 0.606530 (50 iterations in 0.02 seconds)

Iteration 1000: error is 0.606530 (50 iterations in 0.01 seconds)

Fitting performed in 0.27 seconds.Running tsne on 187 x 6 matrix.Read the 187 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 61.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.03 seconds (sparsity = 0.994252)!

Learning embedding...

Iteration 50: error is 42.998573 (50 iterations in 0.02 seconds)

Iteration 100: error is 43.005192 (50 iterations in 0.02 seconds)

Iteration 150: error is 43.328294 (50 iterations in 0.02 seconds)

Iteration 200: error is 43.363071 (50 iterations in 0.02 seconds)

Iteration 250: error is 43.009872 (50 iterations in 0.02 seconds)

Iteration 300: error is 0.478377 (50 iterations in 0.02 seconds)

Iteration 350: error is 0.475423 (50 iterations in 0.02 seconds)

Iteration 400: error is 0.475437 (50 iterations in 0.02 seconds)

Iteration 450: error is 0.475437 (50 iterations in 0.01 seconds)

Iteration 500: error is 0.475437 (50 iterations in 0.01 seconds)

Iteration 550: error is 0.475437 (50 iterations in 0.02 seconds)

Iteration 600: error is 0.475437 (50 iterations in 0.02 seconds)

Iteration 650: error is 0.475437 (50 iterations in 0.01 seconds)

Iteration 700: error is 0.475437 (50 iterations in 0.01 seconds)

Iteration 750: error is 0.475437 (50 iterations in 0.02 seconds)

Iteration 800: error is 0.475437 (50 iterations in 0.02 seconds)

Iteration 850: error is 0.475437 (50 iterations in 0.01 seconds)

Iteration 900: error is 0.475437 (50 iterations in 0.01 seconds)

Iteration 950: error is 0.475437 (50 iterations in 0.02 seconds)

Iteration 1000: error is 0.475436 (50 iterations in 0.01 seconds)

Fitting performed in 0.33 seconds.Running tsne on 189 x 6 matrix.Read the 189 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 61.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.03 seconds (sparsity = 0.993757)!

Learning embedding...

Iteration 50: error is 42.980711 (50 iterations in 0.02 seconds)

Iteration 100: error is 43.465452 (50 iterations in 0.02 seconds)

Iteration 150: error is 43.119741 (50 iterations in 0.02 seconds)

Iteration 200: error is 43.722308 (50 iterations in 0.02 seconds)

Iteration 250: error is 43.317550 (50 iterations in 0.02 seconds)

Iteration 300: error is 0.556605 (50 iterations in 0.02 seconds)

Iteration 350: error is 0.550586 (50 iterations in 0.02 seconds)

Iteration 400: error is 0.550116 (50 iterations in 0.01 seconds)

Iteration 450: error is 0.550117 (50 iterations in 0.01 seconds)

Iteration 500: error is 0.550117 (50 iterations in 0.02 seconds)

Iteration 550: error is 0.550117 (50 iterations in 0.01 seconds)

Iteration 600: error is 0.550117 (50 iterations in 0.01 seconds)

Iteration 650: error is 0.550117 (50 iterations in 0.03 seconds)

Iteration 700: error is 0.550117 (50 iterations in 0.01 seconds)

Iteration 750: error is 0.550117 (50 iterations in 0.02 seconds)

Iteration 800: error is 0.550117 (50 iterations in 0.01 seconds)

Iteration 850: error is 0.550117 (50 iterations in 0.01 seconds)

Iteration 900: error is 0.550117 (50 iterations in 0.02 seconds)

Iteration 950: error is 0.550117 (50 iterations in 0.01 seconds)

Iteration 1000: error is 0.550117 (50 iterations in 0.02 seconds)

Fitting performed in 0.33 seconds.Running tsne on 206 x 6 matrix.Read the 206 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 67.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.04 seconds (sparsity = 0.994580)!

Learning embedding...

Iteration 50: error is 43.603362 (50 iterations in 0.02 seconds)

Iteration 100: error is 43.532992 (50 iterations in 0.03 seconds)

Iteration 150: error is 43.133348 (50 iterations in 0.02 seconds)

Iteration 200: error is 43.496997 (50 iterations in 0.02 seconds)

Iteration 250: error is 43.241726 (50 iterations in 0.02 seconds)

Iteration 300: error is 0.505280 (50 iterations in 0.02 seconds)

Iteration 350: error is 0.501990 (50 iterations in 0.02 seconds)

Iteration 400: error is 0.501982 (50 iterations in 0.02 seconds)

Iteration 450: error is 0.501982 (50 iterations in 0.02 seconds)

Iteration 500: error is 0.501982 (50 iterations in 0.02 seconds)

Iteration 550: error is 0.501982 (50 iterations in 0.02 seconds)

Iteration 600: error is 0.501982 (50 iterations in 0.02 seconds)

Iteration 650: error is 0.501982 (50 iterations in 0.02 seconds)

Iteration 700: error is 0.501982 (50 iterations in 0.02 seconds)

Iteration 750: error is 0.501982 (50 iterations in 0.02 seconds)

Iteration 800: error is 0.501982 (50 iterations in 0.02 seconds)

Iteration 850: error is 0.501982 (50 iterations in 0.02 seconds)

Iteration 900: error is 0.501982 (50 iterations in 0.01 seconds)

Iteration 950: error is 0.501982 (50 iterations in 0.01 seconds)

Iteration 1000: error is 0.501982 (50 iterations in 0.01 seconds)

Fitting performed in 0.38 seconds.Running tsne on 230 x 6 matrix.Read the 230 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 75.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.05 seconds (sparsity = 0.995236)!

Learning embedding...

Iteration 50: error is 43.308417 (50 iterations in 0.02 seconds)

Iteration 100: error is 43.056575 (50 iterations in 0.03 seconds)

Iteration 150: error is 42.603595 (50 iterations in 0.03 seconds)

Iteration 200: error is 42.596436 (50 iterations in 0.03 seconds)

Iteration 250: error is 42.595335 (50 iterations in 0.02 seconds)

Iteration 300: error is 0.416353 (50 iterations in 0.02 seconds)

Iteration 350: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 400: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 450: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 500: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 550: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 600: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 650: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 700: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 750: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 800: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 850: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 900: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 950: error is 0.414936 (50 iterations in 0.02 seconds)

Iteration 1000: error is 0.414936 (50 iterations in 0.02 seconds)

Fitting performed in 0.43 seconds.Running tsne on 266 x 6 matrix.Read the 266 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 87.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.07 seconds (sparsity = 0.995916)!

Learning embedding...

Iteration 50: error is 42.594675 (50 iterations in 0.03 seconds)

Iteration 100: error is 42.579899 (50 iterations in 0.03 seconds)

Iteration 150: error is 42.595014 (50 iterations in 0.03 seconds)

Iteration 200: error is 42.577592 (50 iterations in 0.03 seconds)

Iteration 250: error is 42.578009 (50 iterations in 0.03 seconds)

Iteration 300: error is 0.561961 (50 iterations in 0.03 seconds)

Iteration 350: error is 0.561519 (50 iterations in 0.02 seconds)

Iteration 400: error is 0.560708 (50 iterations in 0.03 seconds)

Iteration 450: error is 0.560723 (50 iterations in 0.03 seconds)

Iteration 500: error is 0.560719 (50 iterations in 0.02 seconds)

Iteration 550: error is 0.560719 (50 iterations in 0.02 seconds)

Iteration 600: error is 0.560719 (50 iterations in 0.03 seconds)

Iteration 650: error is 0.560719 (50 iterations in 0.03 seconds)

Iteration 700: error is 0.560719 (50 iterations in 0.03 seconds)

Iteration 750: error is 0.560719 (50 iterations in 0.03 seconds)

Iteration 800: error is 0.560719 (50 iterations in 0.03 seconds)

Iteration 850: error is 0.560719 (50 iterations in 0.03 seconds)

Iteration 900: error is 0.560719 (50 iterations in 0.03 seconds)

Iteration 950: error is 0.560719 (50 iterations in 0.03 seconds)

Iteration 1000: error is 0.560719 (50 iterations in 0.03 seconds)

Fitting performed in 0.57 seconds.Running tsne on 222 x 6 matrix.Read the 222 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 72.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.04 seconds (sparsity = 0.994806)!

Learning embedding...

Iteration 50: error is 42.677605 (50 iterations in 0.02 seconds)

Iteration 100: error is 42.715635 (50 iterations in 0.02 seconds)

Iteration 150: error is 42.688011 (50 iterations in 0.03 seconds)

Iteration 200: error is 42.684076 (50 iterations in 0.02 seconds)

Iteration 250: error is 42.687816 (50 iterations in 0.02 seconds)

Iteration 300: error is 0.560556 (50 iterations in 0.02 seconds)

Iteration 350: error is 0.559813 (50 iterations in 0.02 seconds)

Iteration 400: error is 0.559813 (50 iterations in 0.01 seconds)

Iteration 450: error is 0.559813 (50 iterations in 0.02 seconds)

Iteration 500: error is 0.559813 (50 iterations in 0.02 seconds)

Iteration 550: error is 0.559813 (50 iterations in 0.02 seconds)

Iteration 600: error is 0.559813 (50 iterations in 0.02 seconds)

Iteration 650: error is 0.559813 (50 iterations in 0.02 seconds)

Iteration 700: error is 0.559813 (50 iterations in 0.01 seconds)

Iteration 750: error is 0.559814 (50 iterations in 0.01 seconds)

Iteration 800: error is 0.559814 (50 iterations in 0.01 seconds)

Iteration 850: error is 0.559813 (50 iterations in 0.02 seconds)

Iteration 900: error is 0.559809 (50 iterations in 0.02 seconds)

Iteration 950: error is 0.559813 (50 iterations in 0.02 seconds)

Iteration 1000: error is 0.559813 (50 iterations in 0.02 seconds)

Fitting performed in 0.37 seconds.Running tsne on 208 x 6 matrix.Read the 208 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 68.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.03 seconds (sparsity = 0.994869)!

Learning embedding...

Iteration 50: error is 42.651238 (50 iterations in 0.02 seconds)

Iteration 100: error is 42.643615 (50 iterations in 0.02 seconds)

Iteration 150: error is 42.630252 (50 iterations in 0.02 seconds)

Iteration 200: error is 42.655191 (50 iterations in 0.02 seconds)

Iteration 250: error is 42.631064 (50 iterations in 0.02 seconds)

Iteration 300: error is 0.518707 (50 iterations in 0.02 seconds)

Iteration 350: error is 0.516471 (50 iterations in 0.02 seconds)

Iteration 400: error is 0.516474 (50 iterations in 0.02 seconds)

Iteration 450: error is 0.516474 (50 iterations in 0.02 seconds)

Iteration 500: error is 0.516474 (50 iterations in 0.02 seconds)

Iteration 550: error is 0.516474 (50 iterations in 0.02 seconds)

Iteration 600: error is 0.516474 (50 iterations in 0.02 seconds)

Iteration 650: error is 0.516475 (50 iterations in 0.02 seconds)

Iteration 700: error is 0.516475 (50 iterations in 0.02 seconds)

Iteration 750: error is 0.516475 (50 iterations in 0.02 seconds)

Iteration 800: error is 0.516474 (50 iterations in 0.02 seconds)

Iteration 850: error is 0.516474 (50 iterations in 0.02 seconds)

Iteration 900: error is 0.516474 (50 iterations in 0.02 seconds)

Iteration 950: error is 0.516474 (50 iterations in 0.02 seconds)

Iteration 1000: error is 0.516475 (50 iterations in 0.02 seconds)

Fitting performed in 0.40 seconds.Running tsne on 83 x 6 matrix.Read the 83 x 6 data matrix successfully!

OpenMP is working. 1 threads.

Using no_dims = 1, perplexity = 26.000000, and theta = 0.100000

Computing input similarities...

Building tree...

Done in 0.01 seconds (sparsity = 0.983307)!

Learning embedding...

Iteration 50: error is 49.960200 (50 iterations in 0.00 seconds)

Iteration 100: error is 52.048786 (50 iterations in 0.00 seconds)

Iteration 150: error is 53.640281 (50 iterations in 0.01 seconds)

Iteration 200: error is 52.654343 (50 iterations in 0.00 seconds)

Iteration 250: error is 49.554401 (50 iterations in 0.01 seconds)

Iteration 300: error is 1.257606 (50 iterations in 0.00 seconds)

Iteration 350: error is 0.667181 (50 iterations in 0.01 seconds)

Iteration 400: error is 0.656387 (50 iterations in 0.00 seconds)

Iteration 450: error is 0.656350 (50 iterations in 0.01 seconds)

Iteration 500: error is 0.656351 (50 iterations in 0.00 seconds)

Iteration 550: error is 0.656350 (50 iterations in 0.01 seconds)

Iteration 600: error is 0.656350 (50 iterations in 0.00 seconds)

Iteration 650: error is 0.656350 (50 iterations in 0.00 seconds)

Iteration 700: error is 0.656350 (50 iterations in 0.00 seconds)

Iteration 750: error is 0.656350 (50 iterations in 0.00 seconds)

Iteration 800: error is 0.656350 (50 iterations in 0.01 seconds)

Iteration 850: error is 0.656350 (50 iterations in 0.00 seconds)

Iteration 900: error is 0.656350 (50 iterations in 0.01 seconds)

Iteration 950: error is 0.656350 (50 iterations in 0.00 seconds)

Iteration 1000: error is 0.656350 (50 iterations in 0.00 seconds)

Fitting performed in 0.07 seconds.

for(k in 1:6){

print(colnames(mat)[order(fit_tm$F[,k],decreasing = T)[1:20]])

} [1] "test" "procedur" "statist" "distribut" "power" "control"

[7] "asymptot" "null" "bootstrap" "hypothesi" "rate" "propos"

[13] "problem" "sampl" "base" "method" "detect" "fals"

[19] "confid" "altern"

[1] "studi" "treatment" "data" "random" "method" "effect"

[7] "outcom" "design" "estim" "trial" "popul" "analysi"

[13] "cancer" "diseas" "model" "observ" "patient" "risk"

[19] "survey" "individu"

[1] "method" "select" "estim" "regress" "model" "variabl"

[7] "problem" "optim" "sampl" "propos" "design" "algorithm"

[13] "perform" "number" "predict" "linear" "predictor" "adapt"

[19] "simul" "size"

[1] "function" "estim" "densiti" "distribut" "random" "problem"

[7] "sampl" "asymptot" "matrix" "space" "paramet" "point"

[13] "observ" "set" "case" "rate" "curv" "converg"

[19] "bound" "class"

[1] "model" "data" "process" "bayesian" "method" "time"

[7] "distribut" "prior" "spatial" "approach" "comput" "markov"

[13] "posterior" "algorithm" "propos" "carlo" "mont" "structur"

[19] "cluster" "analysi"

[1] "estim" "model" "propos" "data" "likelihood"

[6] "function" "method" "regress" "paramet" "covari"

[11] "studi" "asymptot" "distribut" "nonparametr" "simul"

[16] "effici" "approach" "general" "linear" "time" perform de analysis to find driving genes for each cluster

de = de_analysis(fit_tm,mat)Fitting 3034 Poisson models with k=6 using method="scd".

Computing log-fold change statistics from 3034 Poisson models with k=6.

Stabilizing posterior log-fold change estimates using adaptive shrinkage.saveRDS(list(fit_tm=fit_tm,de=de),file='/project2/mstephens/dongyue/poisson_mf/sla/sla_full_tm_fit_w3.rds')

for(k in 1:6){

dat <- data.frame(postmean = de$postmean[,k],

z = de$z[,k],

lfsr = de$lfsr[,k])

rownames(dat) <- colnames(mat)

dat <- subset(dat,lfsr < 0.01)

dat <- dat[order(dat$postmean,decreasing = TRUE),]

print(head(dat,n=10))

print(tail(dat,n=10))

#print(colnames(datax$data)[order(temp$lfsr[,k],decreasing = F)[1:10]])

} postmean z lfsr

power 4.160204 11.276361 8.249676e-28

confid 3.312390 9.079762 2.336386e-17

detect 2.636281 9.629694 3.039016e-19

conserv 2.585438 4.502628 5.916119e-04

resampl 2.521939 4.584298 4.561056e-04

interv 2.501495 6.585533 1.195959e-08

shift 2.166048 4.009285 2.903511e-03

block 2.142925 3.877538 3.918418e-03

critic 2.108076 5.227761 3.454846e-05

formula 2.101984 3.811435 4.531006e-03

postmean z lfsr

formula 2.101984 3.811435 4.531006e-03

procedur 2.024595 13.056927 7.885598e-36

statist 2.018728 13.863256 1.622205e-40

altern 1.848044 7.415730 5.796307e-11

control 1.805739 12.119941 1.079589e-30

sequenti 1.628459 4.983620 1.265348e-04

ratio 1.542885 5.924435 1.042817e-06

rank 1.484279 5.312290 2.776564e-05

multipl 1.383647 4.027696 3.006176e-03

valid 1.038529 3.976431 3.092879e-03

postmean z lfsr

assign 3.961965 6.603757 1.299230e-09

clinic 3.556808 7.406548 1.216056e-11

instrument 2.855662 4.365055 7.969606e-04

sensit 2.582189 5.350734 1.419823e-05

prevent 2.451624 4.572185 4.949116e-04

heart 2.438302 3.600126 6.384354e-03

propens 2.386969 5.758286 1.841871e-06

status 2.326396 4.429766 8.575141e-04

event 2.236792 7.688787 6.295895e-12

adjust 2.126826 5.894134 9.913858e-07

postmean z lfsr

effect 1.1691502 6.416187 5.727151e-08

risk 0.8438634 6.761301 4.154832e-09

error -0.6593077 -16.796150 0.000000e+00

time -0.6653684 -16.570688 0.000000e+00

miss -0.7898867 -107.927426 0.000000e+00

trend -0.9693631 -5.197883 3.868989e-05

model -1.0602626 -10.350348 0.000000e+00

estim -1.5968427 -7.470094 4.278267e-11

control -1.8057385 -12.119941 1.110223e-16

distribut -2.0115811 -45.205978 0.000000e+00

postmean z lfsr

select 3.336466 10.351516 1.106642e-22

dimension 2.769589 7.478573 2.071947e-11

classifi 2.516610 4.990449 8.481279e-05

predictor 2.483349 5.594347 4.244913e-06

reduct 2.339292 5.624712 3.944049e-06

bandwidth 2.260510 3.595671 6.669997e-03

optim 2.053143 8.886002 3.958868e-16

fast 2.052927 3.795430 4.717475e-03

solut 2.003312 5.646151 4.176386e-06

choic 1.705542 4.877042 1.968473e-04

postmean z lfsr

choic 1.7055420 4.877042 1.968473e-04

variabl 1.3788009 13.773192 7.101085e-40

adapt 1.1900014 4.511849 7.593234e-04

achiev 1.1519314 3.497199 8.202185e-03

high 1.1160161 3.836255 4.326231e-03

model 1.0848604 10.295746 8.399924e-22

perform 0.9454902 4.533670 5.860754e-04

predict 0.6647933 4.143902 1.395385e-03

method 0.6225513 6.132097 9.777663e-08

asymptot -1.8513853 -4.690888 3.994326e-04

postmean z lfsr

theorem 3.149735 4.814447 1.052895e-04

beta 3.136865 4.539268 3.317388e-04

matric 3.006546 3.348622 8.386012e-03

curv 2.869921 6.375726 3.332196e-08

definit 2.524392 6.055003 3.082176e-07

bound 2.416781 6.740909 4.603137e-09

invari 2.206601 3.721100 5.323767e-03

satisfi 2.204368 5.272107 2.650769e-05

princip 2.179660 5.638650 3.994924e-06

gaussian 1.932677 9.149637 4.018161e-17

postmean z lfsr

uniform 1.3171426 3.954091 3.520474e-03

transform 1.2563043 5.107259 7.317658e-05

point 1.2006961 6.795998 5.061422e-09

unknown 1.1149727 5.634814 5.034475e-06

function 0.9587833 10.937975 9.286406e-25

paramet 0.4199830 7.480741 1.855855e-11

rate -0.2381620 -6.898450 3.733975e-09

highdimension -0.5207637 -213.222001 0.000000e+00

adapt -1.1452391 -5.535680 8.614225e-06

imag -1.2071217 -5.580734 6.973902e-06

postmean z lfsr

bayesian 3.642790 5.897915 1.896035e-07

price 2.887066 4.748112 1.841144e-04

mont 2.549975 9.879169 2.915954e-20

carlo 2.549645 9.029408 8.223951e-17

site 2.363606 4.693033 3.272670e-04

gene 2.242204 5.915088 8.274081e-07

captur 2.030773 5.652906 3.976380e-06

prior 1.996720 6.565220 1.818837e-08

process 1.508486 8.746347 1.662678e-15

structur 1.411899 5.284221 3.207260e-05

postmean z lfsr

prior 1.9967205 6.565220 1.818837e-08

process 1.5084855 8.746347 1.662678e-15

structur 1.4118985 5.284221 3.207260e-05

cluster 1.2958976 5.700693 3.728320e-06

detect 0.8473609 6.423631 3.577870e-08

factor -0.3046235 -4.926348 1.910552e-04

predict -0.6647933 -4.143902 1.395385e-03

nonparametr -0.7378801 -5.807886 9.152752e-07

human -0.9568054 -16.289250 0.000000e+00

paper -0.9599626 -21.483433 0.000000e+00

postmean z lfsr

likelihood 2.726655 10.286339 4.365425e-22

equat 2.694016 5.153628 3.470808e-05

hazard 2.444796 4.246940 1.436469e-03

parametr 2.345180 5.605167 4.367706e-06

nonparametr 1.932978 6.675058 9.215526e-09

covari 1.702887 7.939562 1.182525e-12

margin 1.447340 4.475438 8.936854e-04

robust 1.178930 3.572040 7.248075e-03

varianc 1.132425 4.224567 1.747583e-03

effici 1.091473 3.764331 4.961223e-03

postmean z lfsr

varianc 1.1324248 4.224567 1.747583e-03

effici 1.0914732 3.764331 4.961223e-03

estim 1.0748743 13.788489 6.149956e-40

illustr 1.0431311 3.918374 3.531181e-03

paramet 1.0118190 7.788797 4.066687e-12

propos 0.8573053 7.932773 1.016213e-12

simul 0.7557374 4.600455 3.315268e-04

function -0.9587833 -10.937975 0.000000e+00

transform -1.2545587 -5.168569 5.507170e-05

associ -1.5726865 -97.805546 0.000000e+00EBNMF fit

library(flashier)Loading required package: magrittrLoading required package: ebnmlibrary(ebpmf)

Y_tilde = log_for_ebmf(mat)

fit_flash = flash(Y_tilde,ebnm_fn = ebnm::ebnm_point_exponential,var_type = 2,backfit = T,greedy_Kmax = 10)Adding factor 1 to flash object...

Adding factor 2 to flash object...

Adding factor 3 to flash object...

Adding factor 4 to flash object...

Adding factor 5 to flash object...

Adding factor 6 to flash object...

Adding factor 7 to flash object...

Adding factor 8 to flash object...

Adding factor 9 to flash object...

Adding factor 10 to flash object...

Wrapping up...

Done.

Backfitting 10 factors (tolerance: 8.70e-02)...

--Estimate of factor 4 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

--Estimate of factor 1 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

Difference between iterations is within 1.0e+04...

--Estimate of factor 1 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

Difference between iterations is within 1.0e+03...

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

Difference between iterations is within 1.0e+02...

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

Difference between iterations is within 1.0e+01...

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

Difference between iterations is within 1.0e+00...

--Estimate of factor 1 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

--Estimate of factor 3 is numerically zero!

--Estimate of factor 5 is numerically zero!

Difference between iterations is within 1.0e-01...

Wrapping up...

Done.

Nullchecking 10 factors...

One factor is identically zero.

Wrapping up...

Removed one factor.

Done.for(k in 1:fit_flash$n_factors){

print(colnames(mat)[order(fit_flash$F_pm[,k],decreasing = T)[1:20]])

} [1] "model" "estim" "data" "method" "propos" "studi"

[7] "function" "distribut" "simul" "sampl" "paramet" "approach"

[13] "asymptot" "statist" "problem" "base" "regress" "general"

[19] "test" "analysi"

[1] "hazard" "acut" "cox" "surviv" "work"

[6] "proport" "transplant" "censor" "transit" "quantil"

[11] "basic" "stroke" "integr" "overcom" "leukaemia"

[16] "freeli" "ying" "bone" "aalen" "multist"

[1] "treatment" "random" "trial" "assign" "effect" "complianc"

[7] "placebo" "assumpt" "patient" "causal" "outcom" "drug"

[13] "adher" "noncompli" "subject" "clinic" "arm" "dose"

[19] "infer" "receiv"

[1] "fals" "procedur" "control" "test" "rate" "reject"

[7] "discoveri" "hypothes" "null" "multipl" "pvalu" "number"

[13] "fdr" "statist" "depend" "error" "kfwer" "stepdown"

[19] "hochberg" "familywis"

[1] "empir" "confid" "ratio" "likelihood"

[5] "region" "limit" "selfscal" "plugin"

[9] "invari" "accuraci" "undersmooth" "correct"

[13] "need" "chisquar" "likelihoodbas" "attack"

[17] "nconsist" "bias" "distort" "biascorrect"

[1] "simex" "measur" "error"

[4] "simulationextrapol" "undersmooth" "asymptot"

[7] "longer" "presenc" "unobserv"

[10] "simul" "polynomi" "errorpron"

[13] "frailti" "principl" "repeat"

[16] "easi" "method" "finitesampl"

[19] "cook" "nutrit"

[1] "asymptot" "densiti" "rank" "hallin" "sign"

[6] "rankbas" "assumpt" "base" "semiparametr" "ellipt"

[11] "classic" "innov" "effici" "requir" "uniform"

[16] "radial" "ordinari" "consid" "cam" "bernoulli"

[1] "plasma" "patient" "varyingcoeffici" "viral"

[5] "covariateadjust" "mutat" "virus" "resist"

[9] "therapi" "drug" "multipl" "determin"

[13] "clone" "pathway" "serum" "thought"

[17] "sequenc" "fashion" "bodi" "mass"

[1] "pseudoparti" "likelihood" "failur" "conduct" "local"

[6] "onestep" "hazard" "propos" "multivari" "coeffici"

[11] "penalis" "compromis" "save" "burden" "attempt"

[16] "analyz" "surviv" "nconsist" "accomplish" "framingham" # input: fit, topics, grouping

# poisson2multinom

#

library(fastTopics)

library(ggplot2)

library(gridExtra)

structure_plot_general = function(Lhat,Fhat,grouping,title=NULL,

loadings_order = 'embed',

print_plot=TRUE,

seed=12345,

n_samples = NULL,

gap=40,

std_L_method = 'sum_to_1',

show_legend=TRUE,

K = NULL,

colors = c('#a6cee3',

'#1f78b4',

'#b2df8a',

'#33a02c',

'#fb9a99',

'#e31a1c',

'#fdbf6f',

'#ff7f00',

'#cab2d6',

'#6a3d9a',

'#ffff99',

'#b15928')){

set.seed(seed)

#s <- apply(Lhat,2,max)

#Lhat <- t(t(Lhat) / s)

if(is.null(n_samples)&all(loadings_order == "embed")){

n_samples = 2000

}

if(std_L_method=='sum_to_1'){

Lhat = Lhat/rowSums(Lhat)

}

if(std_L_method=='row_max_1'){

Lhat = Lhat/c(apply(Lhat,1,max))

}

if(std_L_method=='col_max_1'){

Lhat = apply(Lhat,2,function(z){z/max(z)})

}

if(std_L_method=='col_norm_1'){

Lhat = apply(Lhat,2,function(z){z/norm(z,'2')})

}

if(!is.null(K)){

Lhat = Lhat[,1:K]

Fhat = Fhat[,1:K]

}

Fhat = matrix(1,nrow=3,ncol=ncol(Lhat))

if(is.null(colnames(Lhat))){

colnames(Lhat) <- paste0("k",1:ncol(Lhat))

}

fit_list <- list(L = Lhat,F = Fhat)

class(fit_list) <- c("multinom_topic_model_fit", "list")

p <- structure_plot(fit_list,grouping = grouping,

loadings_order = loadings_order,

n = n_samples,gap = gap,colors=colors,verbose=F) +

labs(y = "loading",color = "dim",fill = "dim") + ggtitle(title)

if(!show_legend){

p <- p + theme(legend.position="none")

}

if(print_plot){

print(p)

}

return(p)

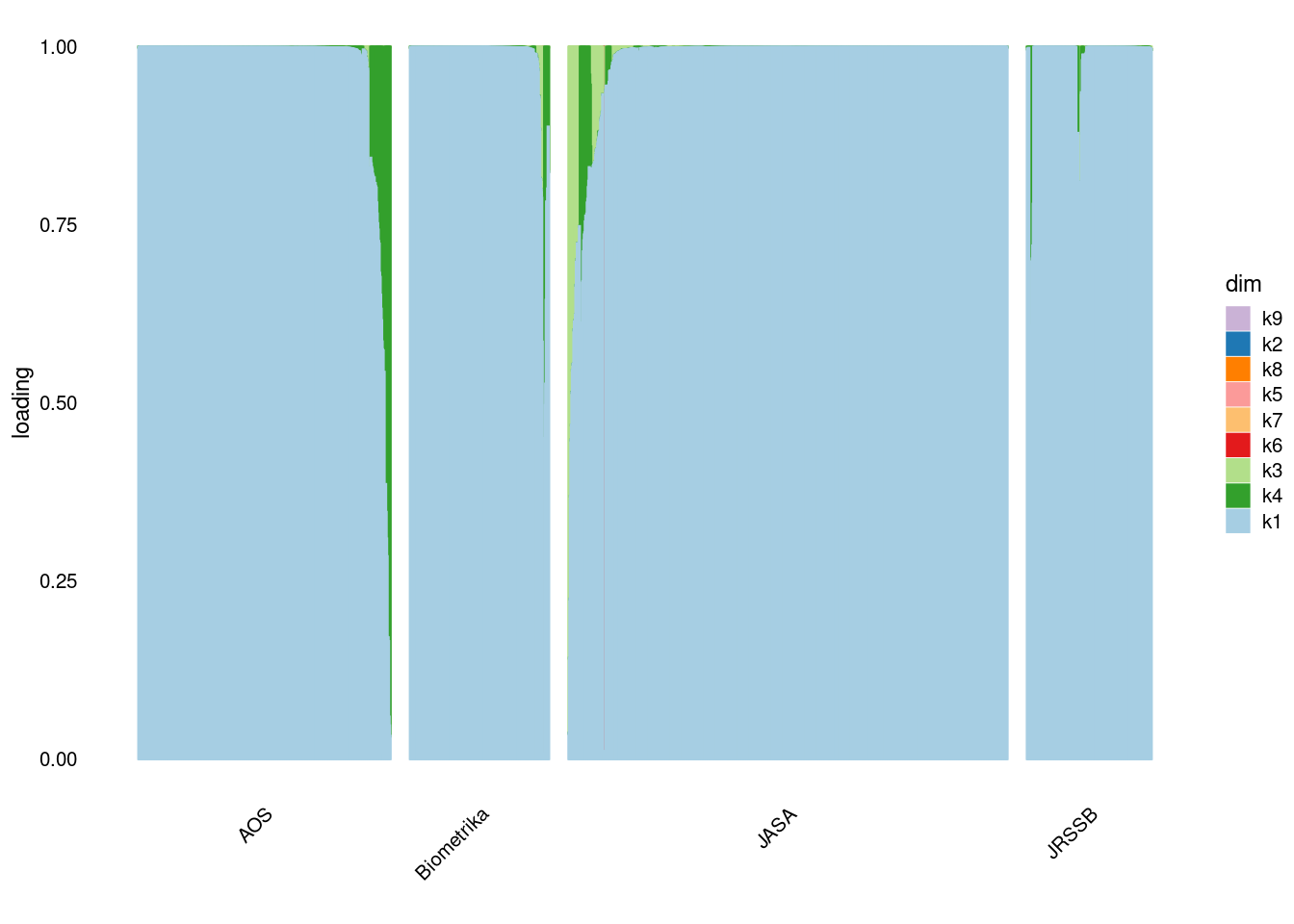

}p1=structure_plot_general(fit_flash$L_pm,fit_flash$F_pm,grouping = samples$journal,std_L_method = 'sum_to_1')Running tsne on 508 x 9 matrix.Running tsne on 280 x 9 matrix.Running tsne on 885 x 9 matrix.Running tsne on 251 x 9 matrix.

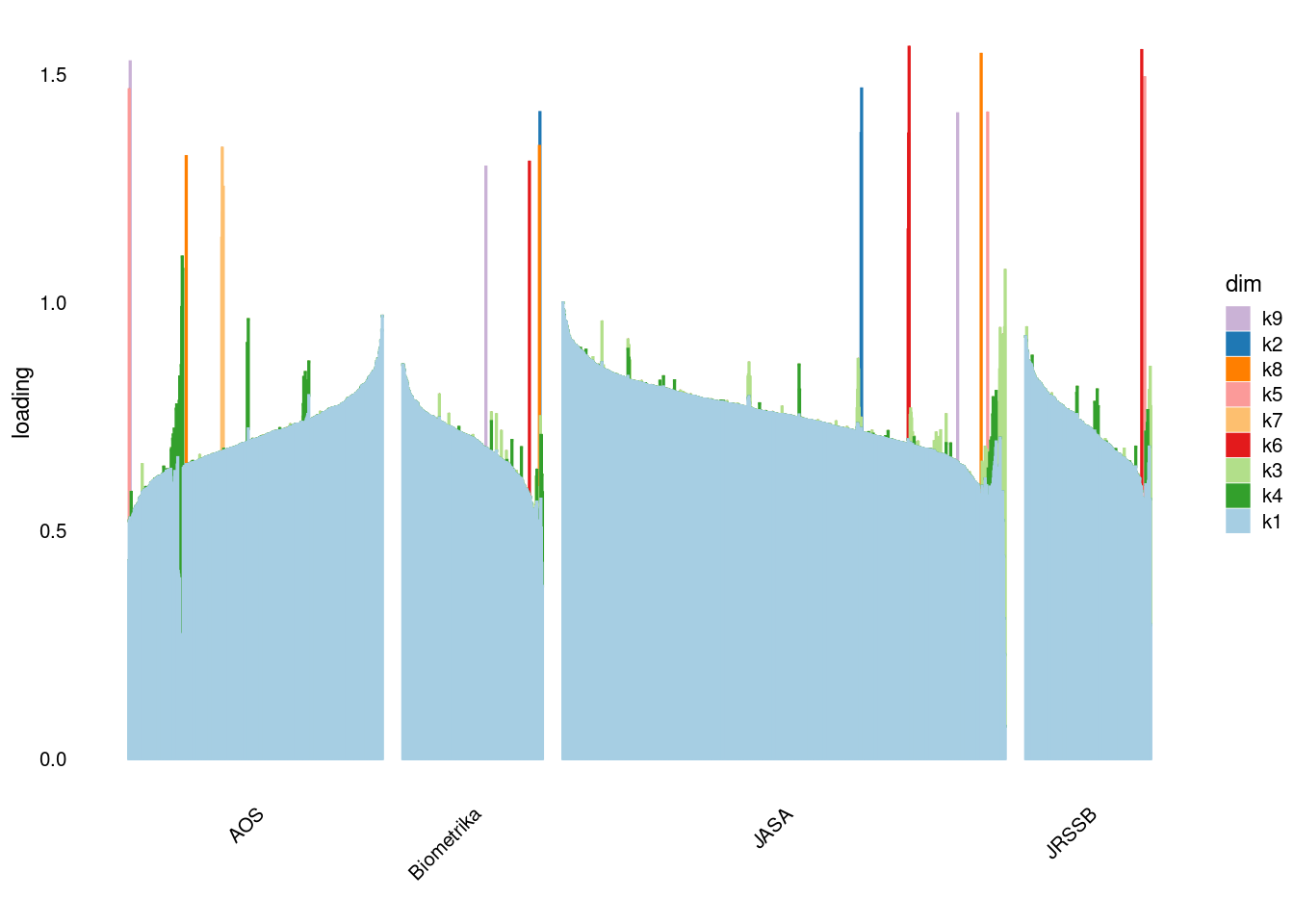

p2=structure_plot_general(fit_flash$L_pm,fit_flash$F_pm,grouping = samples$journal,std_L_method = 'row_max_1')Running tsne on 508 x 9 matrix.Running tsne on 280 x 9 matrix.Running tsne on 885 x 9 matrix.Running tsne on 251 x 9 matrix.

p3=structure_plot_general(fit_flash$L_pm,fit_flash$F_pm,grouping = samples$journal,std_L_method = 'col_norm_1')Running tsne on 508 x 9 matrix.Running tsne on 280 x 9 matrix.Running tsne on 885 x 9 matrix.Running tsne on 251 x 9 matrix.

p4=structure_plot_general(fit_flash$L_pm,fit_flash$F_pm,grouping = samples$journal,std_L_method = 'col_max_1')Running tsne on 508 x 9 matrix.Running tsne on 280 x 9 matrix.Running tsne on 885 x 9 matrix.Running tsne on 251 x 9 matrix.

EBPMF fit

Init 1

library(ebpmf)

fit_ebpmf1 = ebpmf_log(mat,

flash_control=list(backfit_extrapolate=T,backfit_warmstart=T,

ebnm.fn = c(ebnm::ebnm_point_exponential, ebnm::ebnm_point_exponential),

loadings_sign = 1,factors_sign=1,Kmax=8),

init_control = list(n_cores=5,flash_est_sigma2=F,log_init_for_non0y=T),

general_control = list(maxiter=500,save_init_val=T,save_latent_M=T),

sigma2_control = list(return_sigma2_trace=T))Initializing

Solving VGA for column 1...

Running initial EBMF fit

Running iterations...

iter 10, avg elbo=-0.09779, K=10

iter 20, avg elbo=-0.09641, K=10

iter 30, avg elbo=-0.09575, K=10

iter 40, avg elbo=-0.09524, K=9

iter 50, avg elbo=-0.09499, K=9

iter 60, avg elbo=-0.09479, K=9

iter 70, avg elbo=-0.09463, K=9

iter 80, avg elbo=-0.09449, K=9

iter 90, avg elbo=-0.09437, K=9#fit_ebpmf1 = readRDS('/project2/mstephens/dongyue/poisson_mf/sla/slafull_ebnmf_fit_init1.rds')

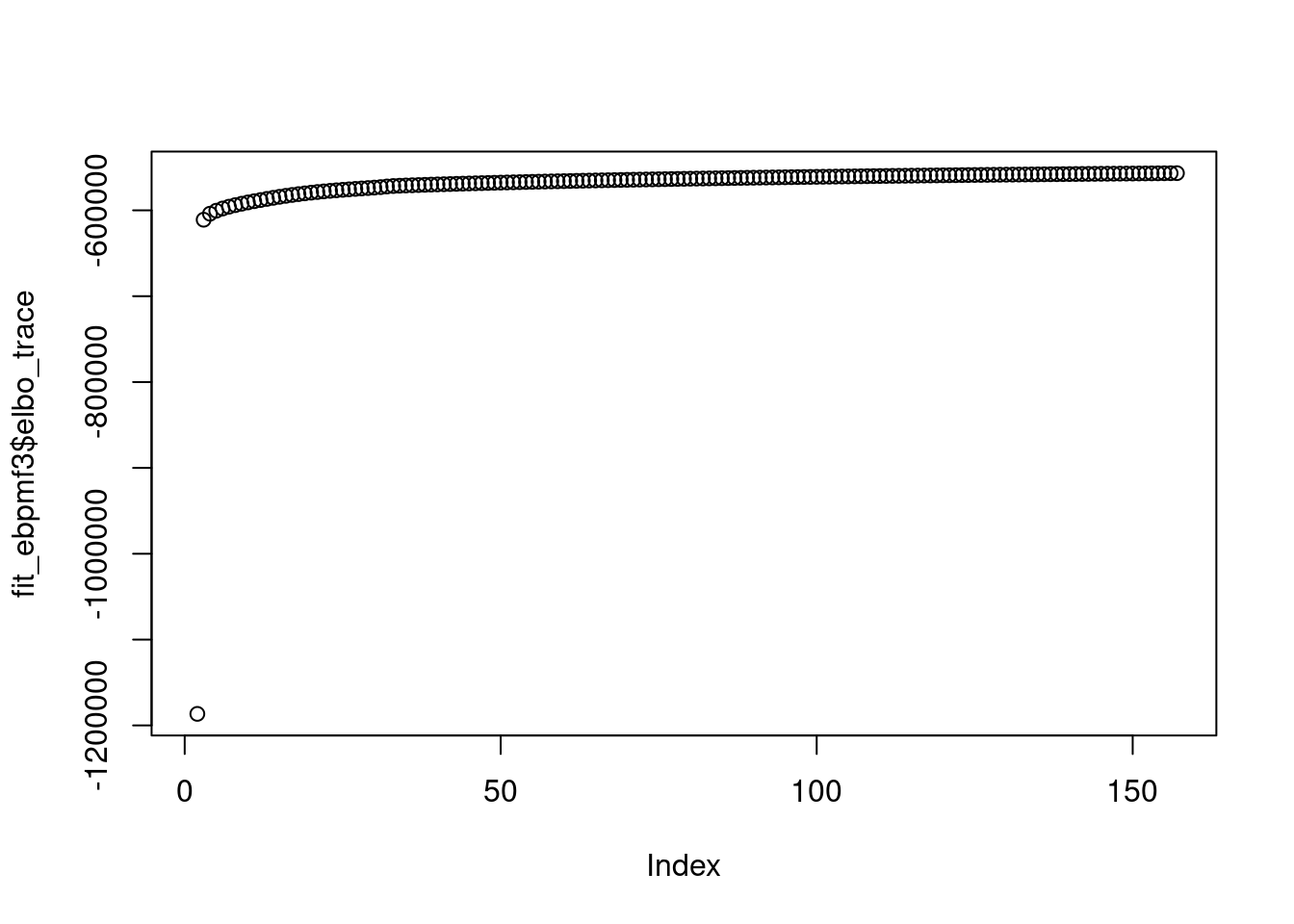

saveRDS(fit_ebpmf1,file='/project2/mstephens/dongyue/poisson_mf/sla/slafull_ebnmf_fit_w3_init1.rds')plot(fit_ebpmf1$elbo_trace)

plot(fit_ebpmf1$sigma2_trace[,100])

for(k in 3:fit_ebpmf1$fit_flash$n_factors){

print(colnames(mat)[order(fit_ebpmf1$fit_flash$F_pm[,k],decreasing = T)[1:15]])

} [1] "treatment" "trial" "causal" "placebo" "assign"

[6] "complianc" "depress" "arm" "patient" "adher"

[11] "noncompli" "outcom" "clinic" "dose" "instrument"

[1] "materi" "onlin" "supplementari" "supplement"

[5] "proof" "articl" "test" "data"

[9] "structur" "null" "correl" "protein"

[13] "summari" "imag" "screen"

[1] "health" "agenc" "climat" "mortal" "air" "pollut" "qualiti"

[8] "nation" "ozon" "year" "monitor" "trend" "public" "tempor"

[15] "chang"

[1] "fdr" "fals" "discoveri" "reject" "pvalu" "stepdown"

[7] "stepup" "kfwer" "hochberg" "hypothes" "fdp" "fwer"

[13] "control" "benjamini" "familywis"

[1] "chain" "markov" "mont" "carlo" "mcmc" "posterior"

[7] "sampler" "algorithm" "bayesian" "prior" "hierarch" "infer"

[13] "comput" "model" "mixtur"

[1] "gene" "microarray" "express" "cdna" "array"

[6] "differenti" "biolog" "thousand" "experi" "identifi"

[11] "challeng" "detect" "cluster" "cancer" "shrinkag"

[1] "asa" "statistician" "polici" "today" "scienc"

[6] "maker" "bring" "technolog" "scientist" "live"

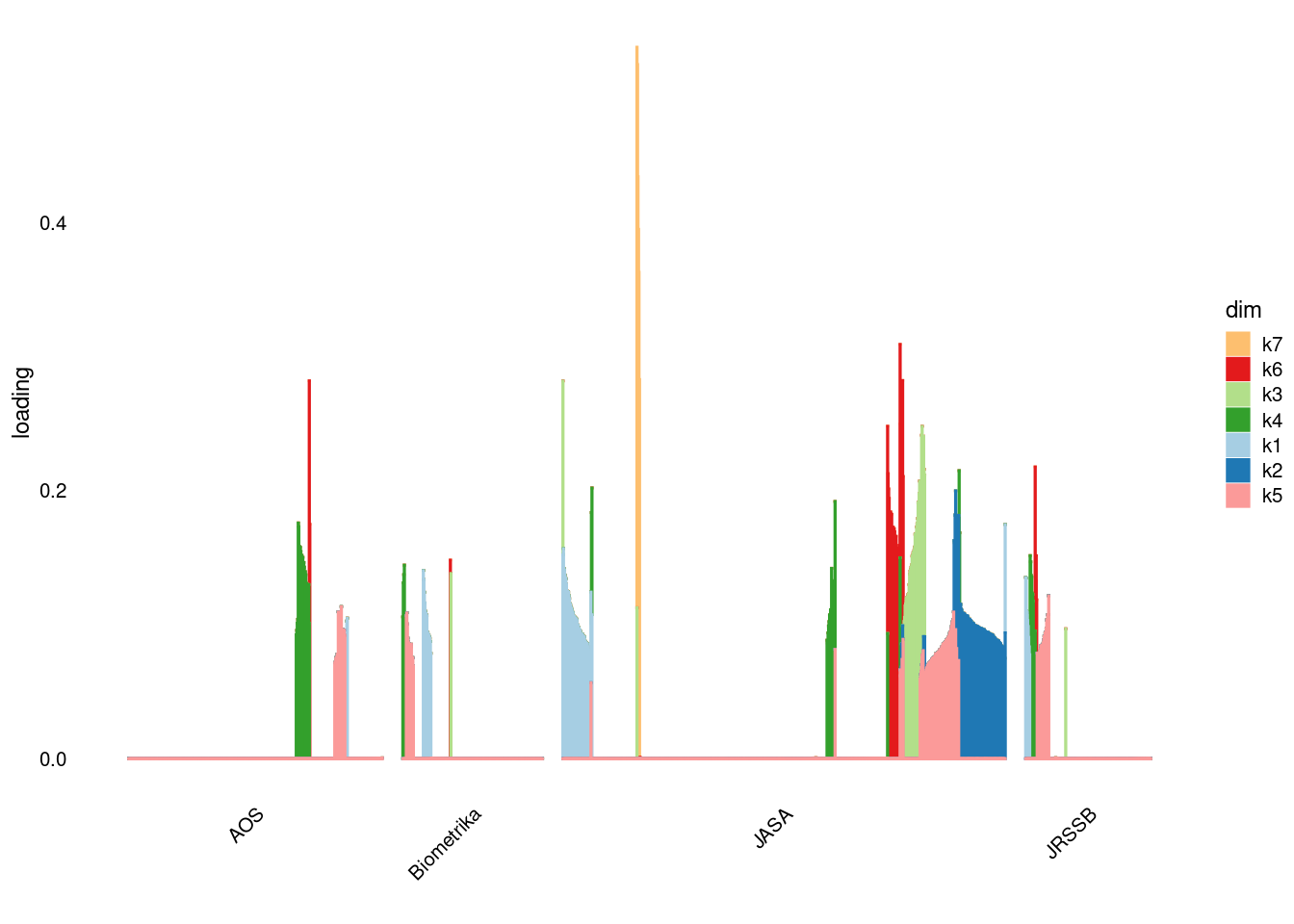

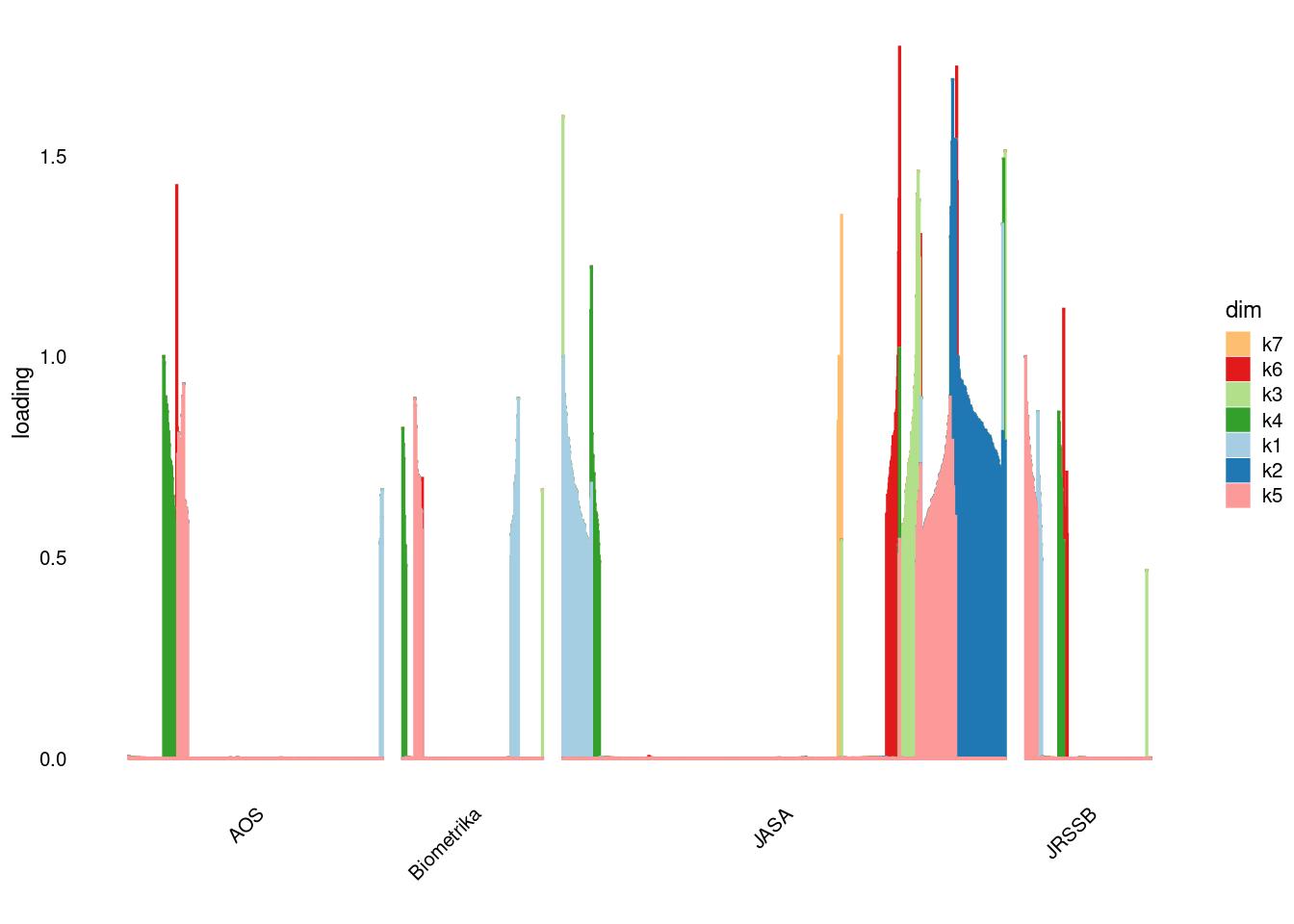

[11] "communic" "scientif" "role" "decis" "engin" p1=structure_plot_general(fit_ebpmf1$fit_flash$L_pm[,-c(1,2)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'sum_to_1')Running tsne on 508 x 7 matrix.Running tsne on 280 x 7 matrix.Running tsne on 885 x 7 matrix.Running tsne on 251 x 7 matrix.

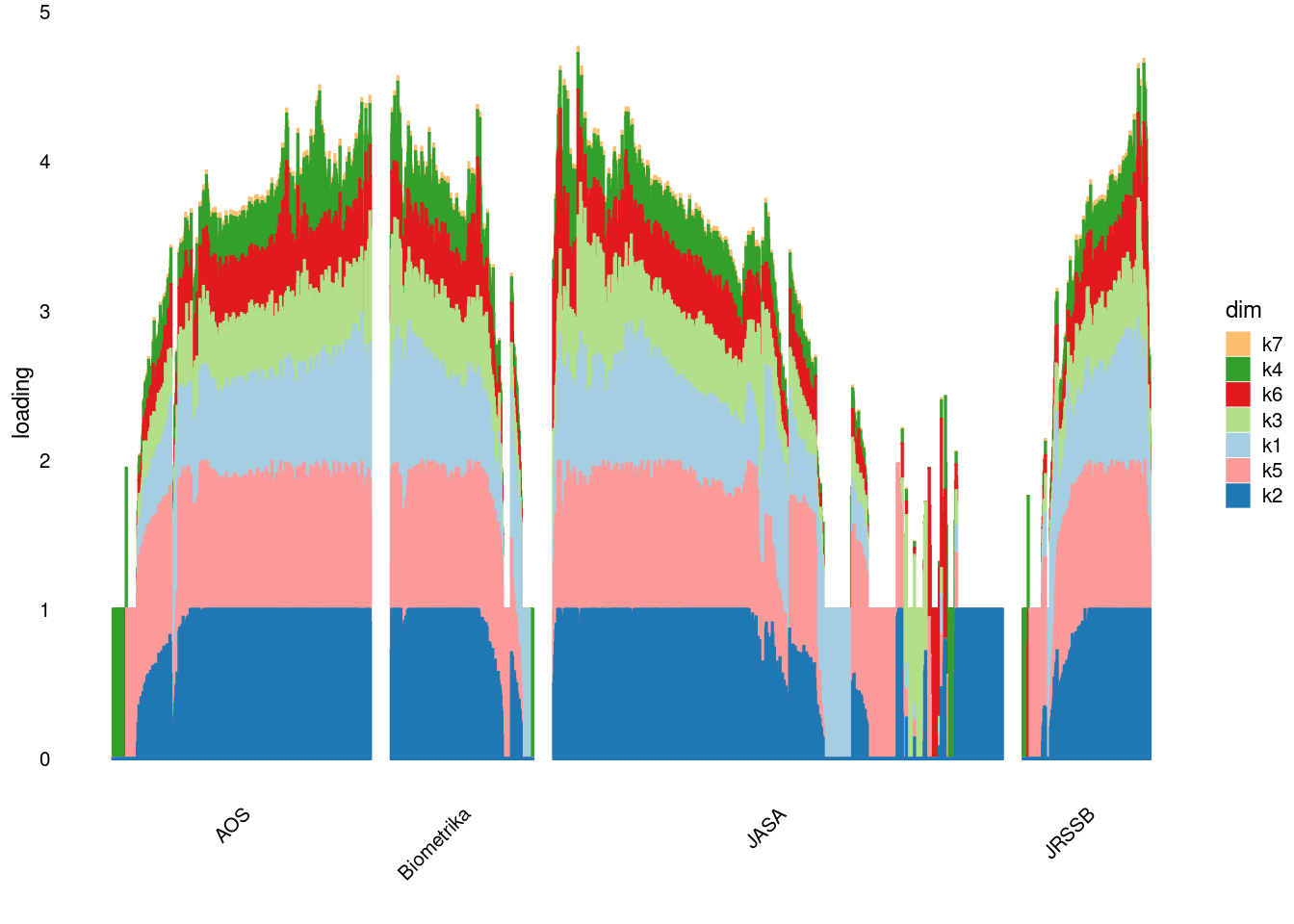

p2=structure_plot_general(fit_ebpmf1$fit_flash$L_pm[,-c(1,2)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'row_max_1')Running tsne on 508 x 7 matrix.Running tsne on 280 x 7 matrix.Running tsne on 885 x 7 matrix.Running tsne on 251 x 7 matrix.

p3=structure_plot_general(fit_ebpmf1$fit_flash$L_pm[,-c(1,2)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'col_norm_1')Running tsne on 508 x 7 matrix.Running tsne on 280 x 7 matrix.Running tsne on 885 x 7 matrix.Running tsne on 251 x 7 matrix.

p4=structure_plot_general(fit_ebpmf1$fit_flash$L_pm[,-c(1,2)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'col_max_1')Running tsne on 508 x 7 matrix.Running tsne on 280 x 7 matrix.Running tsne on 885 x 7 matrix.Running tsne on 251 x 7 matrix.

Init 2

library(ebpmf)

fit_ebpmf2 = ebpmf_log(mat,

flash_control=list(backfit_extrapolate=T,backfit_warmstart=T,

ebnm.fn = c(ebnm::ebnm_point_exponential, ebnm::ebnm_point_exponential),

loadings_sign = 1,factors_sign=1,Kmax=8),

init_control = list(n_cores=5,flash_est_sigma2=T,log_init_for_non0y=F),

general_control = list(maxiter=500,save_init_val=T,save_latent_M=T),

sigma2_control = list(return_sigma2_trace=T))Initializing

Solving VGA for column 1...

Running initial EBMF fit

Running iterations...

iter 10, avg elbo=-0.11589, K=10

iter 20, avg elbo=-0.11048, K=10

iter 30, avg elbo=-0.10693, K=10

iter 40, avg elbo=-0.10472, K=9

iter 50, avg elbo=-0.10338, K=9

iter 60, avg elbo=-0.10235, K=9

iter 70, avg elbo=-0.10151, K=9

iter 80, avg elbo=-0.10082, K=9

iter 90, avg elbo=-0.10024, K=9

iter 100, avg elbo=-0.09974, K=9

iter 110, avg elbo=-0.09931, K=9

iter 120, avg elbo=-0.09892, K=9

iter 130, avg elbo=-0.09858, K=9

iter 140, avg elbo=-0.09828, K=9

iter 150, avg elbo=-0.098, K=9

iter 160, avg elbo=-0.09775, K=9

iter 170, avg elbo=-0.09752, K=9

iter 180, avg elbo=-0.09731, K=9

iter 190, avg elbo=-0.09712, K=9

iter 200, avg elbo=-0.09693, K=9

iter 210, avg elbo=-0.09677, K=9

iter 220, avg elbo=-0.09661, K=9

iter 230, avg elbo=-0.09646, K=9

iter 240, avg elbo=-0.09632, K=9

iter 250, avg elbo=-0.09619, K=9

iter 260, avg elbo=-0.09607, K=9

iter 270, avg elbo=-0.09595, K=9

iter 280, avg elbo=-0.09584, K=9#fit_ebpmf1 = readRDS('/project2/mstephens/dongyue/poisson_mf/sla/slafull_ebnmf_fit_init1.rds')

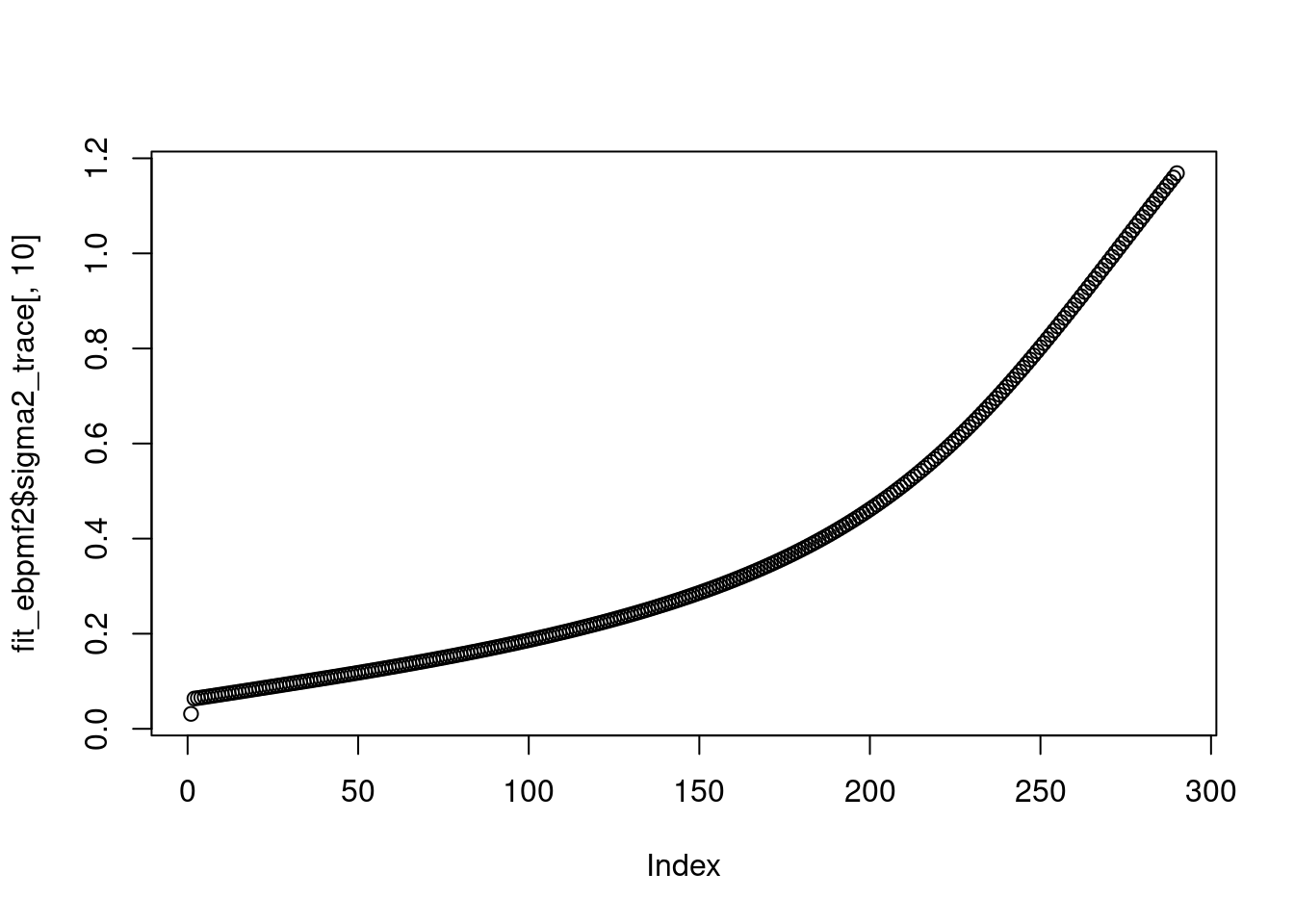

saveRDS(fit_ebpmf2,file='/project2/mstephens/dongyue/poisson_mf/sla/slafull_ebnmf_fit_w3_init2.rds')plot(fit_ebpmf2$elbo_trace)

plot(fit_ebpmf2$sigma2_trace[,10])

for(k in 3:fit_ebpmf2$fit_flash$n_factors){

print(colnames(mat)[order(fit_ebpmf2$fit_flash$F_pm[,k],decreasing = T)[1:15]])

} [1] "fdr" "discoveri" "pvalu" "reject" "fals" "hypothes"

[7] "fdp" "kfwer" "fwer" "benjamini" "control" "hochberg"

[13] "efdp" "pfdp" "stepdown"

[1] "treatment" "causal" "placebo" "complianc"

[5] "adher" "depress" "trial" "noncompli"

[9] "arm" "intentiontotreat" "intenttotreat" "assign"

[13] "feldman" "patient" "estimand"

[1] "mate" "partner" "men" "adult" "attend" "marit" "opposit"

[8] "prefer" "freeli" "colleg" "affili" "sex" "pairwis" "parent"

[15] "twosid"

[1] "toxic" "dose" "dosefind" "deescal" "phase"

[6] "escal" "prespecif" "ethic" "reassess" "trial"

[11] "prespecifi" "coher" "bma" "target" "patient"

[1] "fdr" "fnr" "nondiscoveri" "sidak" "multipletest"

[6] "fdrcontrol" "storey" "singlestep" "bonferroni" "fals"

[11] "configur" "hypothes" "modifi" "procedur" "rate"

[1] "inadmiss" "admiss" "endpoint" "pearson" "lehmann"

[6] "action" "satur" "intraclass" "resurg" "companion"

[11] "devot" "shrinkag" "math" "loss" "risk"

[1] "holm" "sime" "stepdown" "bonferroni" "stepup"

[6] "intersect" "familywis" "cut" "hochberg" "corner"

[11] "partit" "pvalu" "critic" "probabilist" "inequ" p1=structure_plot_general(fit_ebpmf2$fit_flash$L_pm[,-c(1)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'sum_to_1')Running tsne on 508 x 8 matrix.Running tsne on 280 x 8 matrix.Running tsne on 885 x 8 matrix.Running tsne on 251 x 8 matrix.

p2=structure_plot_general(fit_ebpmf2$fit_flash$L_pm[,-c(1,2)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'row_max_1')Running tsne on 508 x 7 matrix.Running tsne on 280 x 7 matrix.Running tsne on 885 x 7 matrix.Running tsne on 251 x 7 matrix.

p3=structure_plot_general(fit_ebpmf2$fit_flash$L_pm[,-c(1,2)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'col_norm_1')Running tsne on 508 x 7 matrix.Running tsne on 280 x 7 matrix.Running tsne on 885 x 7 matrix.Running tsne on 251 x 7 matrix.

p4=structure_plot_general(fit_ebpmf2$fit_flash$L_pm[,-c(1,2)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'col_max_1')Running tsne on 508 x 7 matrix.Running tsne on 280 x 7 matrix.Running tsne on 885 x 7 matrix.Running tsne on 251 x 7 matrix.

Init 3

library(ebpmf)

fit_ebpmf3 = ebpmf_log(mat,

flash_control=list(backfit_extrapolate=T,backfit_warmstart=T,

ebnm.fn = c(ebnm::ebnm_point_exponential, ebnm::ebnm_point_exponential),

loadings_sign = 1,factors_sign=1,Kmax=10),

init_control = list(n_cores=5,flash_est_sigma2=T,log_init_for_non0y=T),

general_control = list(maxiter=500,save_init_val=T,save_latent_M=T),

sigma2_control = list(return_sigma2_trace=T))Initializing

Solving VGA for column 1...

Running initial EBMF fit

Running iterations...

iter 10, avg elbo=-0.10097, K=12

iter 20, avg elbo=-0.09913, K=12

iter 30, avg elbo=-0.09816, K=12

iter 40, avg elbo=-0.09758, K=11

iter 50, avg elbo=-0.09723, K=11

iter 60, avg elbo=-0.09693, K=11

iter 70, avg elbo=-0.09668, K=11

iter 80, avg elbo=-0.09646, K=11

iter 90, avg elbo=-0.09626, K=11

iter 100, avg elbo=-0.09609, K=11

iter 110, avg elbo=-0.09593, K=11

iter 120, avg elbo=-0.09579, K=11

iter 130, avg elbo=-0.09566, K=11

iter 140, avg elbo=-0.09554, K=11

iter 150, avg elbo=-0.09544, K=11#fit_ebpmf1 = readRDS('/project2/mstephens/dongyue/poisson_mf/sla/slafull_ebnmf_fit_init1.rds')

saveRDS(fit_ebpmf3,file='/project2/mstephens/dongyue/poisson_mf/sla/slafull_ebnmf_fit_w3_init3.rds')plot(fit_ebpmf3$elbo_trace)

plot(fit_ebpmf3$sigma2_trace[,10])

for(k in 3:fit_ebpmf3$fit_flash$n_factors){

print(colnames(mat)[order(fit_ebpmf3$fit_flash$F_pm[,k],decreasing = T)[1:20]])

} [1] "fdr" "fals" "reject" "discoveri" "stepup"

[6] "stepdown" "kfwer" "hochberg" "pvalu" "hypothes"

[11] "fdp" "fwer" "benjamini" "control" "sime"

[16] "singlestep" "familywis" "soc" "ser" "holm"

[1] "treatment" "causal" "placebo" "complianc"

[5] "depress" "adher" "trial" "noncompli"

[9] "intentiontotreat" "arm" "intenttotreat" "patient"

[13] "assign" "feldman" "estimand" "elder"

[17] "outcom" "placebocontrol" "children" "meet"

[1] "virus" "immunodefici" "hiv" "viral" "human"

[6] "vaccin" "resist" "infect" "riemannian" "therapi"

[11] "pressur" "mutat" "transmiss" "syndrom" "plasma"

[16] "antiretrovir" "evolutionari" "clone" "immun" "efficaci"

[1] "ambient" "symptom" "diamet" "infant" "respiratori"

[6] "air" "humid" "mother" "particul" "pollut"

[11] "etiolog" "sulfat" "sick" "carolina" "morbid"

[16] "prepar" "press" "undertaken" "constitu" "spend"

[1] "hazard" "surviv" "censor" "failur" "event"

[6] "recurr" "cure" "frailti" "cox" "lengthbias"

[11] "rightcensor" "incid" "cancer" "transplant" "termin"

[16] "logrank" "baselin" "cumul" "breast" "cohort"

[1] "gase" "greenhous" "climat" "warm" "solar"

[6] "earth" "radiat" "aerosol" "ocean" "atmospher"

[11] "temperatur" "stimul" "obscur" "winter" "station"

[16] "skill" "carbon" "land" "gather" "opposit"

[1] "voter" "vote" "campaign" "elect" "talli"

[6] "court" "green" "polit" "testimoni" "presidenti"

[11] "invalid" "west" "highqual" "incent" "modelth"

[16] "florida" "refus" "media" "compli" "weakest"

[1] "asa" "commit" "disciplin" "centuri"

[5] "polici" "rival" "invalu" "infrastructur"

[9] "opportun" "statistician" "vital" "medicin"

[13] "fund" "capit" "poverti" "action"

[17] "speak" "text" "guess" "war"

[1] "markov" "chain" "mcmc" "mont" "carlo" "hidden"

[7] "posterior" "revers" "sampler" "jump" "updat" "gibb"

[13] "prior" "bayesian" "ergod" "algorithm" "dirichlet" "hierarch"

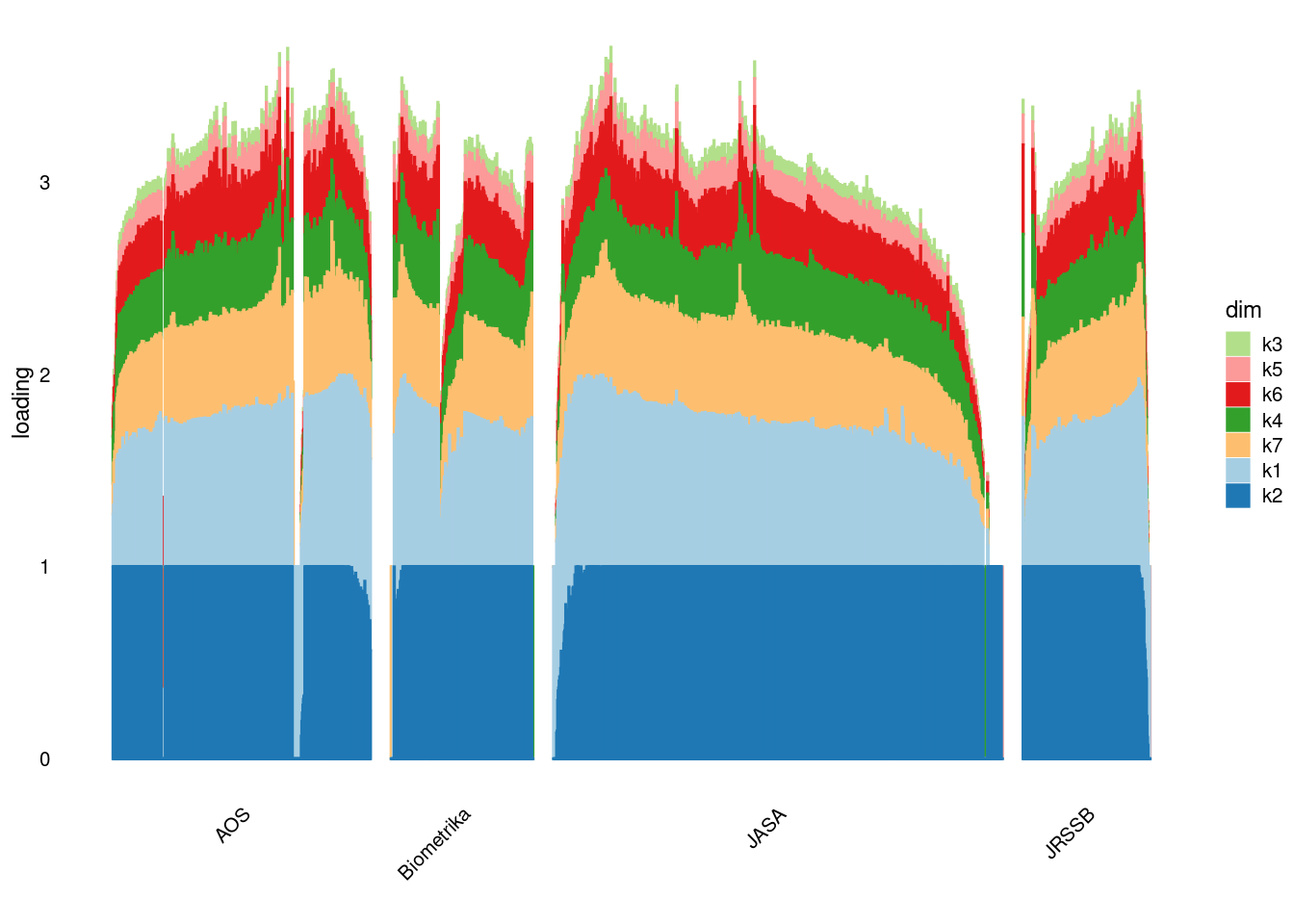

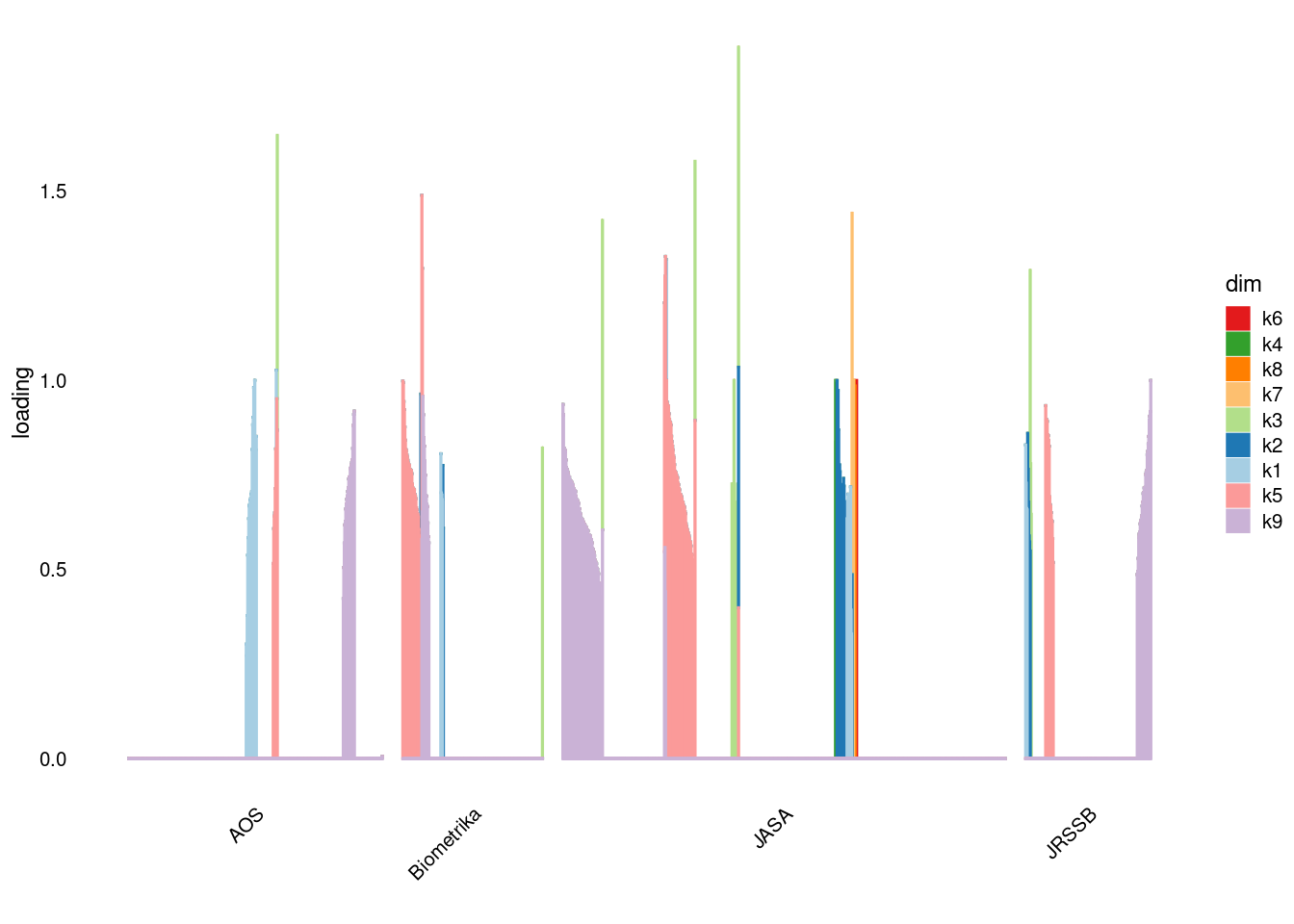

[19] "transit" "parallel" p1=structure_plot_general(fit_ebpmf3$fit_flash$L_pm[,-c(1)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'sum_to_1')Running tsne on 508 x 10 matrix.Running tsne on 280 x 10 matrix.Running tsne on 885 x 10 matrix.Running tsne on 251 x 10 matrix.

p2=structure_plot_general(fit_ebpmf3$fit_flash$L_pm[,-c(1,2)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'row_max_1')Running tsne on 508 x 9 matrix.Running tsne on 280 x 9 matrix.Running tsne on 885 x 9 matrix.Running tsne on 251 x 9 matrix.

p3=structure_plot_general(fit_ebpmf3$fit_flash$L_pm[,-c(1,2)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'col_norm_1')Running tsne on 508 x 9 matrix.Running tsne on 280 x 9 matrix.Running tsne on 885 x 9 matrix.Running tsne on 251 x 9 matrix.

p4=structure_plot_general(fit_ebpmf3$fit_flash$L_pm[,-c(1,2)],fit_flash$F_pm,grouping = samples$journal,std_L_method = 'col_max_1')Running tsne on 508 x 9 matrix.Running tsne on 280 x 9 matrix.Running tsne on 885 x 9 matrix.Running tsne on 251 x 9 matrix.

sessionInfo()R version 4.1.0 (2021-05-18)

Platform: x86_64-pc-linux-gnu (64-bit)

Running under: CentOS Linux 7 (Core)

Matrix products: default

BLAS: /software/R-4.1.0-no-openblas-el7-x86_64/lib64/R/lib/libRblas.so

LAPACK: /software/R-4.1.0-no-openblas-el7-x86_64/lib64/R/lib/libRlapack.so

locale:

[1] LC_CTYPE=en_US.UTF-8 LC_NUMERIC=C LC_TIME=C

[4] LC_COLLATE=C LC_MONETARY=C LC_MESSAGES=C

[7] LC_PAPER=C LC_NAME=C LC_ADDRESS=C

[10] LC_TELEPHONE=C LC_MEASUREMENT=C LC_IDENTIFICATION=C

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] gridExtra_2.3 ggplot2_3.4.1 ebpmf_2.3.1 flashier_0.2.51

[5] ebnm_1.0-54 magrittr_2.0.3 fastTopics_0.6-142 Matrix_1.5-3

[9] workflowr_1.6.2

loaded via a namespace (and not attached):

[1] Rtsne_0.16 ebpm_0.0.1.3 colorspace_2.1-0

[4] smashr_1.3-6 ellipsis_0.3.2 mr.ash_0.1-87

[7] rprojroot_2.0.2 fs_1.5.0 rstudioapi_0.13

[10] farver_2.1.1 MatrixModels_0.5-1 ggrepel_0.9.3

[13] fansi_1.0.4 mvtnorm_1.1-2 codetools_0.2-18

[16] splines_4.1.0 cachem_1.0.5 knitr_1.33

[19] jsonlite_1.8.4 nloptr_1.2.2.2 mcmc_0.9-7

[22] ashr_2.2-54 smashrgen_1.2.4 uwot_0.1.14

[25] compiler_4.1.0 httr_1.4.5 RcppZiggurat_0.1.6

[28] fastmap_1.1.0 lazyeval_0.2.2 cli_3.6.1

[31] later_1.3.0 htmltools_0.5.4 quantreg_5.94

[34] prettyunits_1.1.1 tools_4.1.0 coda_0.19-4

[37] gtable_0.3.1 glue_1.6.2 dplyr_1.1.0

[40] Rcpp_1.0.10 softImpute_1.4-1 jquerylib_0.1.4

[43] vctrs_0.6.2 iterators_1.0.13 wavethresh_4.7.2

[46] xfun_0.24 stringr_1.5.0 trust_0.1-8

[49] lifecycle_1.0.3 irlba_2.3.5.1 MASS_7.3-54

[52] scales_1.2.1 hms_1.1.2 promises_1.2.0.1

[55] parallel_4.1.0 SparseM_1.81 yaml_2.3.7

[58] pbapply_1.7-0 sass_0.4.0 stringi_1.6.2

[61] SQUAREM_2021.1 highr_0.9 deconvolveR_1.2-1

[64] foreach_1.5.1 caTools_1.18.2 truncnorm_1.0-8

[67] shape_1.4.6 horseshoe_0.2.0 rlang_1.1.1

[70] pkgconfig_2.0.3 matrixStats_0.59.0 bitops_1.0-7

[73] evaluate_0.14 lattice_0.20-44 invgamma_1.1

[76] purrr_1.0.1 htmlwidgets_1.6.1 labeling_0.4.2

[79] Rfast_2.0.7 cowplot_1.1.1 tidyselect_1.2.0

[82] R6_2.5.1 generics_0.1.3 pillar_1.8.1

[85] whisker_0.4 withr_2.5.0 survival_3.2-11

[88] mixsqp_0.3-48 tibble_3.2.1 crayon_1.5.2

[91] utf8_1.2.3 plotly_4.10.1 rmarkdown_2.9

[94] progress_1.2.2 grid_4.1.0 data.table_1.14.8

[97] git2r_0.28.0 digest_0.6.31 vebpm_0.4.8

[100] tidyr_1.3.0 httpuv_1.6.1 MCMCpack_1.6-3

[103] RcppParallel_5.1.7 munsell_0.5.0 glmnet_4.1-2

[106] viridisLite_0.4.1 bslib_0.4.2 quadprog_1.5-8