Disparities in the probability of finding a relative via long-range familial search

Junhui He

2025-01-29 21:27:00

Last updated: 2025-01-29

Checks: 7 0

Knit directory: PODFRIDGE/

This reproducible R Markdown analysis was created with workflowr (version 1.7.1). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20230302) was run prior to running

the code in the R Markdown file. Setting a seed ensures that any results

that rely on randomness, e.g. subsampling or permutations, are

reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility.

The results in this page were generated with repository version 0faff2d. See the Past versions tab to see a history of the changes made to the R Markdown and HTML files.

Note that you need to be careful to ensure that all relevant files for

the analysis have been committed to Git prior to generating the results

(you can use wflow_publish or

wflow_git_commit). workflowr only checks the R Markdown

file, but you know if there are other scripts or data files that it

depends on. Below is the status of the Git repository when the results

were generated:

Ignored files:

Ignored: .DS_Store

Ignored: .Rhistory

Ignored: .Rproj.user/

Ignored: analysis/.DS_Store

Ignored: analysis/.Rhistory

Ignored: data/.DS_Store

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the repository in which changes were

made to the R Markdown (analysis/probability_disparity.Rmd)

and HTML (docs/probability_disparity.html) files. If you’ve

configured a remote Git repository (see ?wflow_git_remote),

click on the hyperlinks in the table below to view the files as they

were in that past version.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| Rmd | 0faff2d | Junhui He | 2025-01-29 | update probability disparity |

| Rmd | a69816f | Junhui He | 2025-01-29 | upload data for probability disparity |

| html | 1731b7f | Junhui He | 2025-01-22 | Build site. |

| Rmd | 90a3d7c | Junhui He | 2025-01-22 | wflow_publish("analysis/probability_disparity.Rmd") |

| html | 8c86890 | Junhui He | 2025-01-17 | Build site. |

| Rmd | 64c4f90 | Junhui He | 2025-01-17 | create a report for Result 3 |

1 Objective

We aims to determine the difference in the probability of finding a match in a direct-to-consumer (DTC) genetic database for Black and White Americans. This analysis integrates family size distributions (from Result 2), database representation disparities (from Result 1B), and considers the proportion of DTC databases accessible to law enforcement.

We establish a theoretical binomial model \(\text{Bin}(K,p)\) to calculate the probability of finding a match in a database, where \(K\) is the size of the database and \(p\) is the match probability between a target and another individual. The match probability \(p\) is calculated based on the population size, the database size, racial representation disparities, and the family size distribution.

2 Model assumptions

In the current generation, the population size is \(N\), and the racial proportion of the population is \(\alpha\).

The database has \(K\) individuals, and the racial proportion of the database is \(\beta\). The database is randomly sampled from the current population. Both the population proportion \(\alpha\) and the database proportion \(\beta\) are considered fixed constants.

The family trees of different races are strictly separated. For instance, the ancestors and descendants of black Americans are also black Americans.

We assume that there is no inter-marriage in the family involved, except for the known ancestor couple of the target.

The number of children per couple at the generation \(g\) before the present is \(r_g\). It is assumed that \(r_g\) follows a multinomial distribution. In this model, the distribution of \(r_1\) is estimated based on the number of children born to women aged 40-49 in 1990, the distribution of \(r_2\) is estimated based on the number of children born to women aged 40-49 in 1960, and the distribution of \(r_3\) is estimated based on the number of children born to women aged 70+ in 1960. For generations \(g\geq 3\), \(r_g\) is assumed to follow the same distribution as \(r_3\). Additionally, if a specific couple is known as the ancestors of the target, it is assumed that they have at least one child.

Individuals are diploid and we consider only the autosomal genome.

The genome of the target individual is compared to those of all individuals in the database, and identical-by-descent (IBD) segments are identified. We assume that detectable segments must be of length \(\geq\) \(m\) (in Morgans). We further assume that in order to confidently detect the relationship (a “match”), we must observe at least \(s\) such segments

We only consider relationships for which the common ancestors have lived \(g\leq g_{\max}\) generations ago. For example, \(g=1\) for siblings, \(g=2\) for first cousins, etc. All cousins/siblings are full.

We only consider regular cousins, excluding once removed and so on.

The number of matches between the target and the individuals in the database is counted. If we have more than \(t\) matches, we declare that there is sufficient information to trace back the target. Typically, we simply assume \(t=1\).

3 Derivation

3.1 The probability of a sharing a pair of ancestor

Consider the cousins of the target. \(g\) generations before the present, the target has \(2^{g-1}\) ancestral couples. For example, each individual has one pair of parents (\(g=1\)), two pairs of grandparents (\(g=2\)), four pairs of great-grandparents (\(g=3\)) and so on. Each ancestral couple contributes to \((r_g-1) \prod_{i=1}^{g-1} r_i\) of the \((g-1)\)-th cousins of the target. For example, consider a pair of grandparents (\(g=2\)), each individual has \((r_2-1)\) uncles/aunts, and hence \((r_2-1)r_1\) first cousins. Note that \(r_g \geq 1\). Therefore, the total number of the \((g-1)\)-th cousins is given by

\[\text{The number of the $(g-1)$ cousins}=2^{g-1}(r_g-1) \prod_{i=1}^{g-1} r_i.\]

Under the assumption of separated family trees and randomly sampled database, given the family size, the probability to share an ancestral couple for the first time at generation \(g\) between the target and the individual in the database with the same race is approximately:

\[P(\text{first sharing a mating pair at $g$ for a certain race}|r)=\frac{2^{g-1}(r_g-1) \prod_{i=1}^{g-1} r_i}{\alpha N}.\]

3.3 The match probability between a target and a individual from the same race

Given the family size, the probability of declaring a match between the target and a random individual in the database is simply the sum of the product over all \(g\),

\[ P(\text{match}|r)=\sum_{g=1}^{g_{\max}} P(\text{match}|g) \frac{2^{g-1}(r_g-1) \prod_{i=1}^{g-1} r_i}{\alpha N}. \]

The number of matches to a database is assumed to follow a binomial distribution defined as

\[ \text{Bin}(\beta K, P(\text{match}|r)) .\]

To identify an individual, we need to find at least \(t\) matches in the database. Thus, given the family size,

\[P(\text{identify}|r)=1-\sum_{k=1}^{t-1} \text{Bin}(k;\beta K, P(\text{match}|r)).\]

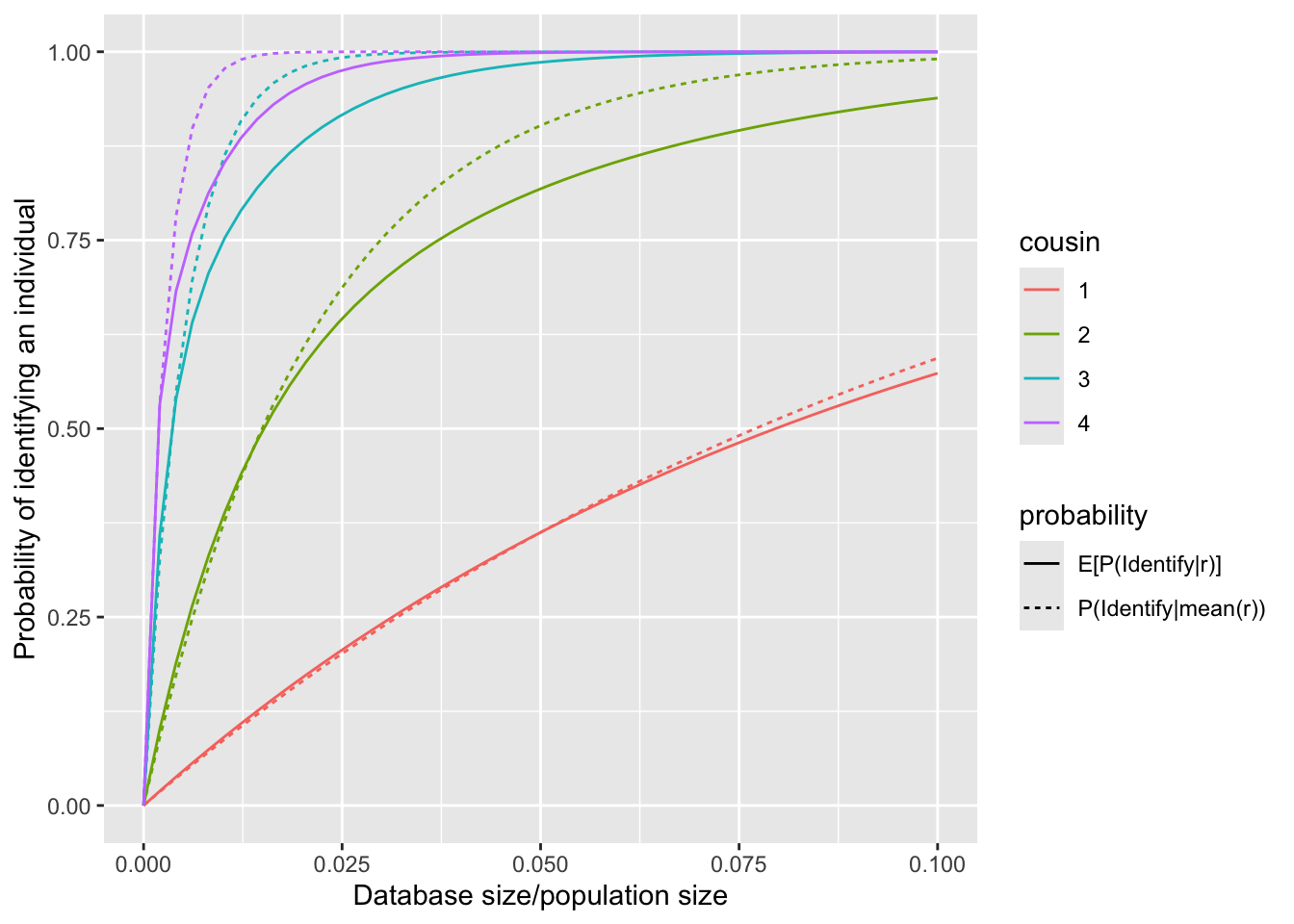

We utilize Monte Carlo methods to calculate the mean probability of identifying an individual over family size, i.e., \(E[P(\text{identify}|r)]\).

Additionally, since \(r\) is a random variable, we calculate \(P(\text{identify}|\bar{r})\) to make a comparison with \(E[P(\text{identify}|r)]\), where \(\bar{r}\) represents the mean number of children. This probability \(P(\text{identify}|\bar{r})\) is used in the paper of Erlich et al. 2018, if we substitute \(\bar{r}\) by a constant \(r\).

4 Experimental results

4.1 Identifying probabilities considering the family size

In this subsection, it is assumed that the population and database consist of a single race. Ratios \(\alpha\) and \(\beta\) are set to \(1\) in the calculation of identifying probabilities. These probabilities are utilized to explore the influence of disparities in the family size of different races, without considering the racial representation.

The probabilities of identifying an individual over the family size up to a certain type of cousinship from a pure database, where the identifying probability is \(E[P(\text{identify}|r)]\) and the pseudo probability is \(P(\text{identify}|\bar{r})\). The family size distributions are estimated using a multinomial distribution or a zero inflation model, respectively.

From left to right, the panels in Figure 1 are:

Identifying probability using multinomial distributions

Identifying probability using zero inflation models

Pseudo identifying probability

| Version | Author | Date |

|---|---|---|

| 1731b7f | Junhui He | 2025-01-22 |

4.2 Identifying probabilities considering the family size and racial representation

In this subsection, it is assumed that the population and database consist of multiple races, including black and white Americans. Ratios \(\alpha\) and \(\beta\) correspond to racial proportions in population and database, respectively. We calculate the probabilities of identifying an individual by treating \(\alpha\) and \(\beta\) fixed as constants. When the database size is much smaller than the population size, this assumption seems to be reasonable.

The probabilities of identifying an individual over the family size up to a certain type of cousinship from a mixed database, where the identifying probability is \(E[P(\text{identify}|r)]\) and the pseudo probability is \(P(\text{identify}|\bar{r})\). The family size distributions are estimated using a multinomial distribution or a zero inflation model, respectively.

From left to right, the panels in Figure 2 are:

Identifying probability using multinomial distributions

Identifying probability using zero inflation models

Pseudo identifying probability

| Version | Author | Date |

|---|---|---|

| 1731b7f | Junhui He | 2025-01-22 |

4.3 Conclusions

Considering only the family size, it is interesting to observe that identification probabilities \(E[P(\text{identify}|r)]\) for black and white Americans are close to each other (see Figure 1 in Section 4.1), while pseudo identification probabilities \(P(\text{identify}|\bar{r})\) for black Americans are significantly higher than that for black Americans (see Figure 2 in Section 4.1).

Considering the family size and racial representation, the identification probability for White Americans is significantly higher than that for Black Americans (see Figures 1 and 2 in Section 4.2), primarily due to disparities in database representation.

The value of \(P(\text{identify}|\bar{r})\) is notably higher than \(E[P(\text{identify}|r)]\), highlighting the importance of considering family size as a complete distribution in the analysis.

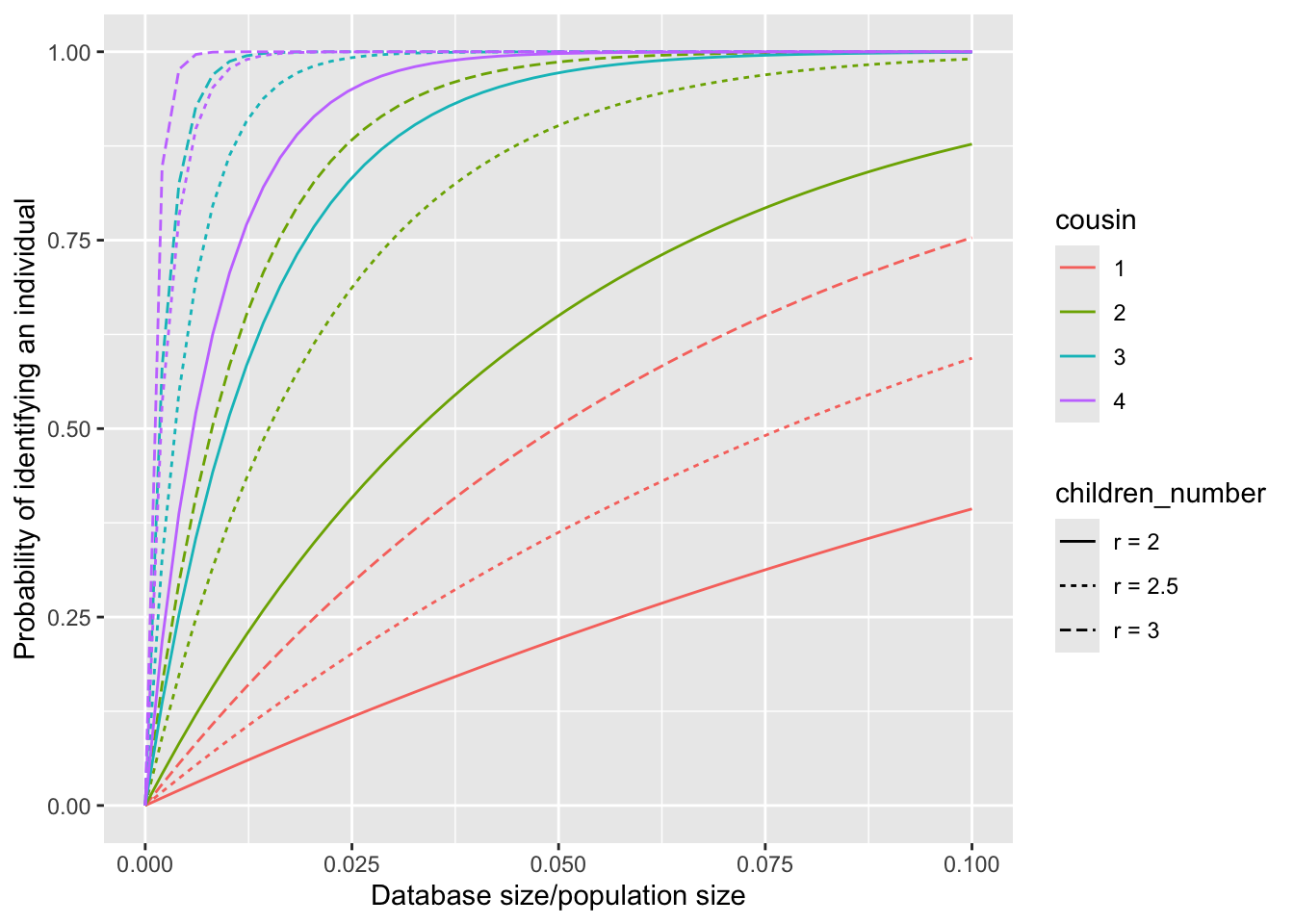

5 A toy example for distinguishing \(E[P(\text{identify}|r)]\) and \(P(\text{identify}|\bar{r})\)

To intuitively illustrate the impact of the full distribution of the number of children on the calculation of the identification probability, we assume that \(r_g=r_0\) for all \(g\geq 1\), and compute the probability \(P(\text{identify}|r_0)\) for various constant values of \(r_0\). Especially, we consider the scenarios where \(r_0\) is set to \(2\), \(2.5\) and \(3\).

Furthermore, we consider a random family size \(r\) with the following distribution: \[P(r=2)=P(r=3)=50\%.\] This implies that half of the couples have 2 children, while the other half have 3 children. The mean family size, denoted by \(\bar{r}\), is given as \(2.5\). Consequently, we obtain the following relationships: \[E[P(\text{identify}|r)] = 0.5*P(\text{identify}|2) + 0.5 * P(\text{identify}|3),\] \[P(\text{identify}|\bar{r}) = P(\text{identify}|2.5).\]

This figure shows that \(P(\text{identify}|\bar{r})>E[P(\text{identify}|r)]\), with the difference becoming more pronounced as the degree of cousinship increases. The underlying mathematical principle responsible for this relationship is Jensen’s Inequality.

R version 4.4.2 (2024-10-31)

Platform: aarch64-apple-darwin20

Running under: macOS Sequoia 15.2

Matrix products: default

BLAS: /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/lib/libRblas.0.dylib

LAPACK: /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/lib/libRlapack.dylib; LAPACK version 3.12.0

locale:

[1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

time zone: America/Detroit

tzcode source: internal

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] ggpubr_0.6.0 ggplot2_3.5.1 workflowr_1.7.1

loaded via a namespace (and not attached):

[1] tidyr_1.3.1 sass_0.4.9 utf8_1.2.4 generics_0.1.3

[5] rstatix_0.7.2 stringi_1.8.4 digest_0.6.37 magrittr_2.0.3

[9] evaluate_1.0.1 grid_4.4.2 fastmap_1.2.0 rprojroot_2.0.4

[13] jsonlite_1.8.9 processx_3.8.4 whisker_0.4.1 backports_1.5.0

[17] Formula_1.2-5 gridExtra_2.3 ps_1.8.1 promises_1.3.2

[21] httr_1.4.7 purrr_1.0.2 fansi_1.0.6 scales_1.3.0

[25] jquerylib_0.1.4 abind_1.4-8 cli_3.6.3 rlang_1.1.4

[29] cowplot_1.1.3 munsell_0.5.1 withr_3.0.2 cachem_1.1.0

[33] yaml_2.3.10 tools_4.4.2 ggsignif_0.6.4 dplyr_1.1.4

[37] colorspace_2.1-1 httpuv_1.6.15 broom_1.0.7 vctrs_0.6.5

[41] R6_2.5.1 lifecycle_1.0.4 git2r_0.35.0 stringr_1.5.1

[45] car_3.1-3 fs_1.6.5 pkgconfig_2.0.3 callr_3.7.6

[49] pillar_1.9.0 bslib_0.8.0 later_1.4.1 gtable_0.3.6

[53] glue_1.8.0 Rcpp_1.0.13-1 xfun_0.49 tibble_3.2.1

[57] tidyselect_1.2.1 rstudioapi_0.17.1 knitr_1.49 farver_2.1.2

[61] htmltools_0.5.8.1 labeling_0.4.3 carData_3.0-5 rmarkdown_2.29

[65] compiler_4.4.2 getPass_0.2-4