Pilot Study: Confirmatory Factor Analysis

R. Noah Padgett

2020-11-16

Last updated: 2021-02-18

Checks: 6 1

Knit directory: pools-projects/

This reproducible R Markdown analysis was created with workflowr (version 1.6.2). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

The R Markdown is untracked by Git. To know which version of the R Markdown file created these results, you’ll want to first commit it to the Git repo. If you’re still working on the analysis, you can ignore this warning. When you’re finished, you can run wflow_publish to commit the R Markdown file and build the HTML.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20201007) was run prior to running the code in the R Markdown file. Setting a seed ensures that any results that rely on randomness, e.g. subsampling or permutations, are reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility.

The results in this page were generated with repository version ea0a6a6. See the Past versions tab to see a history of the changes made to the R Markdown and HTML files.

Note that you need to be careful to ensure that all relevant files for the analysis have been committed to Git prior to generating the results (you can use wflow_publish or wflow_git_commit). workflowr only checks the R Markdown file, but you know if there are other scripts or data files that it depends on. Below is the status of the Git repository when the results were generated:

Ignored files:

Ignored: .Rhistory

Ignored: .Rproj.user/

Untracked files:

Untracked: analysis/development-study-CFA-invariance-test.Rmd

Untracked: analysis/development-study-CFA.Rmd

Untracked: analysis/development-study-EFA.Rmd

Untracked: analysis/development-study-data-management.Rmd

Untracked: analysis/figure/

Untracked: analysis/study-1-power-calculation.Rmd

Untracked: code/laplace_functions.R

Untracked: code/pdf2png.R

Untracked: code/utility_functions.R

Untracked: data/efa_results_2021_01_06.csv

Untracked: data/fit-test.RData

Untracked: data/savedlocalfit.RData

Untracked: diagrams/

Untracked: item-review-2/expert-review-2-response1.pdf

Untracked: item-review-2/expert-review-2-response2.pdf

Untracked: item-review-2/expert-review-2-response3.pdf

Untracked: item-review-2/pilot-data-item-review.xlsx

Untracked: manuscript/

Untracked: output/cfa-final-parameterEstimates.csv

Untracked: output/cfa_results.csv

Untracked: output/corr-plot.pdf

Untracked: output/corr-residuals.pdf

Unstaged changes:

Modified: .Rprofile

Deleted: .gitattributes

Modified: .gitignore

Modified: analysis/index.Rmd

Deleted: analysis/pilot-study-CFA.Rmd

Deleted: analysis/pilot-study-EFA.Rmd

Deleted: analysis/pilot-study-data-management.Rmd

Deleted: analysis/pilot-study-power-calculation.Rmd

Modified: code/load_packages.R

Modified: data/data-2020-11-16/pools_data_split1_2020_11_16.txt

Modified: data/data-2020-11-16/pools_data_split2_2020_11_16.txt

Modified: item-review-1/response8_nov6.pdf

Modified: item-review-2/Overview of Expert Review v2.0 Results.docx

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

There are no past versions. Publish this analysis with wflow_publish() to start tracking its development.

Data

source("code/load_packages.R")-- Attaching packages --------------------------------------- tidyverse 1.3.0 --v ggplot2 3.3.3 v purrr 0.3.4

v tibble 3.0.5 v dplyr 1.0.3

v tidyr 1.1.2 v stringr 1.4.0

v readr 1.4.0 v forcats 0.5.0-- Conflicts ------------------------------------------ tidyverse_conflicts() --

x dplyr::filter() masks stats::filter()

x dplyr::lag() masks stats::lag()

Attaching package: 'data.table'The following objects are masked from 'package:dplyr':

between, first, lastThe following object is masked from 'package:purrr':

transposeThis is lavaan 0.6-7lavaan is BETA software! Please report any bugs. ###############################################################################This is semTools 0.5-4All users of R (or SEM) are invited to submit functions or ideas for functions.###############################################################################

Attaching package: 'semTools'The following object is masked from 'package:readr':

clipboardThis is MIIVsem 0.5.5MIIVsem is BETA software! Please report any bugs. #################################################################This is simsem 0.5-15simsem is BETA software! Please report any bugs.simsem was first developed at the University of Kansas Center forResearch Methods and Data Analysis, under NSF Grant 1053160.#################################################################

Attaching package: 'simsem'The following object is masked from 'package:lavaan':

inspectLoading required package: multilevelLoading required package: nlme

Attaching package: 'nlme'The following object is masked from 'package:dplyr':

collapseLoading required package: MASS

Attaching package: 'MASS'The following object is masked from 'package:patchwork':

areaThe following object is masked from 'package:dplyr':

select

Attaching package: 'psychometric'The following object is masked from 'package:ggplot2':

alpha

Attaching package: 'psych'The following object is masked from 'package:psychometric':

alphaThe following object is masked from 'package:simsem':

simThe following object is masked from 'package:semTools':

skewThe following object is masked from 'package:lavaan':

cor2covThe following objects are masked from 'package:ggplot2':

%+%, alphaLoading required package: lattice

Attaching package: 'nFactors'The following object is masked from 'package:lattice':

parallel

Attaching package: 'kableExtra'The following object is masked from 'package:dplyr':

group_rowsoptions(digits=3, max.print = 10000)

mydata <- read.table("data/data-2020-11-16/pools_data_split2_2020_11_16.txt", sep="\t", header=T)

# transform responses to (-2, 2) scale

mydata[, 7:63] <- apply(mydata[,7:63], 2, function(x){x-3})Data Summary

use.var <- c(paste0("Q4_",c(1:5,8:11, 15:18)), #13

paste0("Q5_",c(1:6, 8, 12)), #8-> 14- 21

paste0("Q6_",c(1:8, 11)), #9 -> 22-30

paste0("Q7_",c(2, 4:5, 7:8, 12:14))) #31-38

psych::describe(

mydata[, use.var]

) vars n mean sd median trimmed mad min max range skew kurtosis se

Q4_1 1 312 -0.62 0.85 -1.0 -0.63 1.48 -2 2 4 0.28 0.06 0.05

Q4_2 2 312 -0.80 0.78 -1.0 -0.82 0.00 -2 2 4 0.64 1.07 0.04

Q4_3 3 312 -0.54 0.85 -1.0 -0.54 1.48 -2 2 4 0.35 0.47 0.05

Q4_4 4 312 -0.47 0.85 0.0 -0.46 1.48 -2 2 4 -0.01 0.12 0.05

Q4_5 5 312 -0.75 0.87 -1.0 -0.81 1.48 -2 2 4 0.61 0.32 0.05

Q4_8 6 312 -0.81 0.85 -1.0 -0.86 1.48 -2 2 4 0.50 0.16 0.05

Q4_9 7 312 -0.61 0.97 -1.0 -0.66 1.48 -2 2 4 0.45 -0.29 0.05

Q4_10 8 312 -0.46 0.80 0.0 -0.41 0.00 -2 2 4 -0.19 0.30 0.05

Q4_11 9 312 -0.52 0.95 -1.0 -0.56 1.48 -2 2 4 0.26 -0.26 0.05

Q4_15 10 312 -0.71 0.88 -1.0 -0.75 1.48 -2 2 4 0.34 -0.32 0.05

Q4_16 11 312 -0.67 0.93 -1.0 -0.72 1.48 -2 2 4 0.23 -0.50 0.05

Q4_17 12 312 -0.90 0.93 -1.0 -0.99 1.48 -2 2 4 0.53 -0.47 0.05

Q4_18 13 312 -0.72 0.79 -1.0 -0.74 0.00 -2 2 4 0.49 0.33 0.04

Q5_1 14 312 -0.47 0.95 -1.0 -0.49 1.48 -2 2 4 0.19 -0.49 0.05

Q5_2 15 312 -0.04 1.01 0.0 0.01 1.48 -2 2 4 -0.20 -0.49 0.06

Q5_3 16 312 -0.41 1.04 0.0 -0.43 1.48 -2 2 4 0.26 -0.52 0.06

Q5_4 17 312 0.46 1.10 1.0 0.55 0.00 -2 2 4 -0.80 -0.15 0.06

Q5_5 18 312 0.45 1.06 1.0 0.52 0.00 -2 2 4 -0.78 -0.16 0.06

Q5_6 19 312 -0.15 0.92 0.0 -0.14 1.48 -2 2 4 0.03 -0.07 0.05

Q5_8 20 312 -0.16 1.07 0.0 -0.13 1.48 -2 2 4 -0.09 -0.66 0.06

Q5_12 21 312 -0.20 1.01 0.0 -0.18 1.48 -2 2 4 -0.10 -0.29 0.06

Q6_1 22 312 -1.32 0.86 -1.5 -1.48 0.74 -2 2 4 1.52 2.55 0.05

Q6_2 23 312 -0.96 0.91 -1.0 -1.07 0.00 -2 2 4 0.98 0.92 0.05

Q6_3 24 312 -1.01 0.92 -1.0 -1.13 1.48 -2 2 4 1.03 1.03 0.05

Q6_4 25 312 -0.89 0.94 -1.0 -0.99 1.48 -2 2 4 0.84 0.64 0.05

Q6_5 26 312 -0.61 1.10 -1.0 -0.69 1.48 -2 2 4 0.57 -0.45 0.06

Q6_6 27 312 -1.18 0.79 -1.0 -1.29 0.00 -2 2 4 1.08 1.68 0.04

Q6_7 28 312 -0.89 0.88 -1.0 -0.95 1.48 -2 2 4 0.61 0.19 0.05

Q6_8 29 312 -0.85 0.83 -1.0 -0.90 0.00 -2 2 4 0.70 0.77 0.05

Q6_11 30 312 -0.31 0.98 0.0 -0.30 1.48 -2 2 4 -0.11 -0.29 0.06

Q7_2 31 312 -0.35 0.89 0.0 -0.33 0.00 -2 2 4 -0.35 -0.09 0.05

Q7_4 32 312 -0.21 0.94 0.0 -0.18 1.48 -2 2 4 -0.07 -0.15 0.05

Q7_5 33 312 -0.21 0.94 0.0 -0.19 0.00 -2 2 4 -0.14 0.12 0.05

Q7_7 34 312 0.57 1.07 1.0 0.66 0.00 -2 2 4 -0.86 0.08 0.06

Q7_8 35 312 -0.19 0.94 0.0 -0.16 0.00 -2 2 4 -0.07 0.03 0.05

Q7_12 36 312 0.38 1.07 1.0 0.44 1.48 -2 2 4 -0.55 -0.17 0.06

Q7_13 37 312 0.49 1.11 1.0 0.56 1.48 -2 2 4 -0.50 -0.42 0.06

Q7_14 38 312 0.56 1.04 1.0 0.64 1.48 -2 2 4 -0.69 0.00 0.06CFA

The hypothesized four-factor solution is shown below.

The above model can be convert to code using the below model.

mod1 <- "

EL =~ Q4_1 + Q4_2 + Q4_3 + Q4_4 + Q4_5 + Q4_8 + Q4_9 + Q4_10 + Q4_11 + Q4_15 + Q4_16 + Q4_17 + Q4_18

SC =~ Q5_1 + Q5_2 + Q5_3 + Q5_4 + Q5_5 + Q5_6 + Q5_8 + Q5_12

IN =~ Q6_1 + Q6_2 + Q6_3 + Q6_4 + Q6_5 + Q6_6 + Q6_7 + Q6_8 + Q6_11

EN =~ Q7_2 + Q7_4 + Q7_5 + Q7_7 + Q7_8 + Q7_12 + Q7_13 + Q7_14

EL ~~ EL + SC + IN + EN

SC ~~ SC + IN + EN

IN ~~ IN + EN

EN ~~ EN

"

mod1.2 <- "

EL =~ y1 + y2 + y3 + y4 + y5 + y6 + y7 + y8 + y9 + y10 + y11 + y12 + y13

SC =~ y14 + y15 + y16 + y17 + y18 + y19 + y20 + y21

IN =~ y22 + y23 + y24 + y25 + y26 + y27 + y28 + y29 + y30

EN =~ y31 + y32 + y33 + y34 + y35 + y36 + y37 + y38

EL ~~ EL + SC + IN + EN

SC ~~ SC + IN + EN

IN ~~ IN + EN

EN ~~ EN

"Maximum Likelihood

fit1 <- lavaan::sem(mod1, data=mydata, estimator="MLM")

summary(fit1, standardized=T, fit.measures=T)lavaan 0.6-7 ended normally after 62 iterations

Estimator ML

Optimization method NLMINB

Number of free parameters 82

Number of observations 312

Model Test User Model:

Standard Robust

Test Statistic 1999.775 1448.633

Degrees of freedom 659 659

P-value (Chi-square) 0.000 0.000

Scaling correction factor 1.380

Satorra-Bentler correction

Model Test Baseline Model:

Test statistic 8479.132 5614.687

Degrees of freedom 703 703

P-value 0.000 0.000

Scaling correction factor 1.510

User Model versus Baseline Model:

Comparative Fit Index (CFI) 0.828 0.839

Tucker-Lewis Index (TLI) 0.816 0.828

Robust Comparative Fit Index (CFI) 0.853

Robust Tucker-Lewis Index (TLI) 0.843

Loglikelihood and Information Criteria:

Loglikelihood user model (H0) -12758.826 -12758.826

Loglikelihood unrestricted model (H1) -11758.939 -11758.939

Akaike (AIC) 25681.652 25681.652

Bayesian (BIC) 25988.578 25988.578

Sample-size adjusted Bayesian (BIC) 25728.502 25728.502

Root Mean Square Error of Approximation:

RMSEA 0.081 0.062

90 Percent confidence interval - lower 0.077 0.058

90 Percent confidence interval - upper 0.085 0.066

P-value RMSEA <= 0.05 0.000 0.000

Robust RMSEA 0.073

90 Percent confidence interval - lower 0.068

90 Percent confidence interval - upper 0.078

Standardized Root Mean Square Residual:

SRMR 0.080 0.080

Parameter Estimates:

Standard errors Robust.sem

Information Expected

Information saturated (h1) model Structured

Latent Variables:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

EL =~

Q4_1 1.000 0.663 0.779

Q4_2 0.898 0.058 15.367 0.000 0.595 0.761

Q4_3 1.027 0.051 20.126 0.000 0.680 0.804

Q4_4 1.036 0.054 19.336 0.000 0.686 0.810

Q4_5 0.986 0.070 14.139 0.000 0.653 0.751

Q4_8 0.927 0.065 14.179 0.000 0.614 0.720

Q4_9 0.969 0.072 13.461 0.000 0.642 0.666

Q4_10 0.928 0.059 15.844 0.000 0.615 0.769

Q4_11 1.074 0.063 17.014 0.000 0.712 0.749

Q4_15 0.958 0.061 15.583 0.000 0.635 0.719

Q4_16 0.937 0.076 12.297 0.000 0.621 0.668

Q4_17 0.837 0.081 10.341 0.000 0.555 0.597

Q4_18 0.972 0.056 17.259 0.000 0.644 0.816

SC =~

Q5_1 1.000 0.547 0.575

Q5_2 1.144 0.112 10.236 0.000 0.625 0.619

Q5_3 1.250 0.122 10.266 0.000 0.683 0.657

Q5_4 1.593 0.164 9.693 0.000 0.871 0.792

Q5_5 1.548 0.151 10.220 0.000 0.846 0.800

Q5_6 1.311 0.121 10.836 0.000 0.716 0.779

Q5_8 1.527 0.147 10.411 0.000 0.835 0.780

Q5_12 1.111 0.116 9.556 0.000 0.607 0.600

IN =~

Q6_1 1.000 0.586 0.686

Q6_2 1.218 0.090 13.533 0.000 0.714 0.786

Q6_3 1.258 0.103 12.216 0.000 0.738 0.799

Q6_4 1.237 0.106 11.622 0.000 0.725 0.776

Q6_5 0.855 0.119 7.190 0.000 0.501 0.456

Q6_6 1.051 0.103 10.186 0.000 0.616 0.784

Q6_7 1.227 0.112 10.925 0.000 0.719 0.822

Q6_8 1.165 0.106 10.988 0.000 0.683 0.820

Q6_11 1.030 0.121 8.506 0.000 0.604 0.619

EN =~

Q7_2 1.000 0.652 0.736

Q7_4 0.927 0.069 13.499 0.000 0.605 0.642

Q7_5 1.078 0.077 13.945 0.000 0.703 0.747

Q7_7 1.150 0.105 10.923 0.000 0.750 0.704

Q7_8 1.106 0.081 13.616 0.000 0.721 0.771

Q7_12 1.085 0.106 10.239 0.000 0.708 0.665

Q7_13 0.637 0.125 5.075 0.000 0.415 0.376

Q7_14 1.020 0.108 9.463 0.000 0.666 0.639

Covariances:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

EL ~~

SC 0.205 0.040 5.123 0.000 0.566 0.566

IN 0.268 0.043 6.216 0.000 0.689 0.689

EN 0.306 0.045 6.788 0.000 0.707 0.707

SC ~~

IN 0.169 0.034 5.002 0.000 0.528 0.528

EN 0.275 0.043 6.357 0.000 0.771 0.771

IN ~~

EN 0.257 0.042 6.112 0.000 0.671 0.671

Variances:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

EL 0.439 0.060 7.309 0.000 1.000 1.000

SC 0.299 0.057 5.252 0.000 1.000 1.000

IN 0.344 0.066 5.207 0.000 1.000 1.000

EN 0.426 0.062 6.838 0.000 1.000 1.000

.Q4_1 0.284 0.032 8.766 0.000 0.284 0.393

.Q4_2 0.258 0.024 10.671 0.000 0.258 0.421

.Q4_3 0.254 0.028 8.991 0.000 0.254 0.354

.Q4_4 0.246 0.023 10.568 0.000 0.246 0.343

.Q4_5 0.330 0.042 7.928 0.000 0.330 0.436

.Q4_8 0.351 0.049 7.182 0.000 0.351 0.482

.Q4_9 0.519 0.047 11.144 0.000 0.519 0.557

.Q4_10 0.261 0.025 10.523 0.000 0.261 0.409

.Q4_11 0.397 0.039 10.268 0.000 0.397 0.439

.Q4_15 0.376 0.036 10.382 0.000 0.376 0.482

.Q4_16 0.478 0.041 11.732 0.000 0.478 0.553

.Q4_17 0.555 0.051 10.956 0.000 0.555 0.643

.Q4_18 0.208 0.021 9.942 0.000 0.208 0.334

.Q5_1 0.604 0.049 12.256 0.000 0.604 0.669

.Q5_2 0.630 0.058 10.789 0.000 0.630 0.617

.Q5_3 0.614 0.054 11.337 0.000 0.614 0.568

.Q5_4 0.451 0.047 9.586 0.000 0.451 0.373

.Q5_5 0.403 0.052 7.747 0.000 0.403 0.360

.Q5_6 0.333 0.035 9.624 0.000 0.333 0.393

.Q5_8 0.448 0.061 7.364 0.000 0.448 0.392

.Q5_12 0.654 0.067 9.699 0.000 0.654 0.640

.Q6_1 0.387 0.064 6.062 0.000 0.387 0.529

.Q6_2 0.315 0.040 7.808 0.000 0.315 0.382

.Q6_3 0.308 0.040 7.624 0.000 0.308 0.362

.Q6_4 0.347 0.047 7.445 0.000 0.347 0.397

.Q6_5 0.955 0.086 11.116 0.000 0.955 0.792

.Q6_6 0.238 0.027 8.758 0.000 0.238 0.385

.Q6_7 0.249 0.027 9.142 0.000 0.249 0.325

.Q6_8 0.228 0.029 7.853 0.000 0.228 0.328

.Q6_11 0.587 0.053 11.066 0.000 0.587 0.617

.Q7_2 0.360 0.039 9.197 0.000 0.360 0.459

.Q7_4 0.521 0.051 10.141 0.000 0.521 0.587

.Q7_5 0.392 0.047 8.255 0.000 0.392 0.442

.Q7_7 0.574 0.054 10.600 0.000 0.574 0.505

.Q7_8 0.355 0.043 8.219 0.000 0.355 0.406

.Q7_12 0.633 0.067 9.385 0.000 0.633 0.558

.Q7_13 1.045 0.082 12.716 0.000 1.045 0.858

.Q7_14 0.643 0.060 10.756 0.000 0.643 0.592Local Fit Assessment

# Residual Analysis

out <- residuals(fit1, type="cor.bollen")

kable(out[[2]], format="html", digit=3)%>%

kable_styling(full_width = T)%>%

scroll_box(width="100%", height="800px")| Q4_1 | Q4_2 | Q4_3 | Q4_4 | Q4_5 | Q4_8 | Q4_9 | Q4_10 | Q4_11 | Q4_15 | Q4_16 | Q4_17 | Q4_18 | Q5_1 | Q5_2 | Q5_3 | Q5_4 | Q5_5 | Q5_6 | Q5_8 | Q5_12 | Q6_1 | Q6_2 | Q6_3 | Q6_4 | Q6_5 | Q6_6 | Q6_7 | Q6_8 | Q6_11 | Q7_2 | Q7_4 | Q7_5 | Q7_7 | Q7_8 | Q7_12 | Q7_13 | Q7_14 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Q4_1 | 0.000 | 0.066 | 0.094 | 0.041 | -0.075 | -0.089 | -0.069 | 0.030 | 0.000 | -0.035 | 0.000 | -0.033 | -0.004 | 0.149 | 0.007 | 0.049 | -0.066 | -0.042 | 0.029 | -0.049 | 0.076 | 0.011 | -0.034 | -0.067 | -0.082 | 0.045 | -0.019 | -0.012 | -0.044 | 0.154 | -0.014 | 0.069 | -0.057 | -0.054 | 0.007 | -0.066 | 0.050 | -0.047 |

| Q4_2 | 0.066 | 0.000 | 0.064 | 0.006 | 0.013 | 0.013 | -0.074 | -0.052 | -0.023 | -0.013 | -0.069 | -0.061 | 0.060 | 0.149 | -0.045 | 0.020 | -0.162 | -0.153 | -0.005 | -0.104 | 0.025 | -0.022 | -0.023 | -0.062 | -0.064 | 0.041 | 0.001 | 0.005 | -0.014 | 0.108 | -0.056 | -0.001 | -0.023 | -0.131 | -0.045 | -0.081 | 0.009 | -0.077 |

| Q4_3 | 0.094 | 0.064 | 0.000 | 0.083 | -0.050 | -0.064 | -0.088 | 0.000 | -0.033 | -0.024 | 0.016 | -0.076 | 0.002 | 0.184 | -0.038 | 0.040 | -0.134 | -0.104 | -0.034 | -0.069 | 0.088 | -0.010 | -0.063 | -0.086 | -0.061 | 0.021 | 0.021 | -0.033 | -0.028 | 0.166 | -0.003 | 0.026 | -0.090 | -0.078 | -0.042 | -0.005 | 0.045 | -0.023 |

| Q4_4 | 0.041 | 0.006 | 0.083 | 0.000 | -0.007 | -0.005 | -0.049 | 0.038 | 0.011 | -0.067 | -0.065 | -0.060 | -0.021 | 0.100 | -0.041 | 0.023 | -0.097 | -0.054 | 0.054 | -0.011 | 0.085 | -0.100 | -0.041 | -0.073 | -0.053 | 0.016 | 0.020 | -0.029 | -0.055 | 0.210 | 0.079 | 0.104 | 0.008 | -0.029 | 0.055 | -0.050 | -0.015 | -0.019 |

| Q4_5 | -0.075 | 0.013 | -0.050 | -0.007 | 0.000 | 0.123 | 0.041 | 0.005 | 0.024 | 0.008 | -0.048 | 0.038 | -0.002 | 0.149 | 0.004 | 0.112 | -0.106 | -0.099 | 0.068 | 0.055 | 0.099 | -0.029 | 0.017 | -0.044 | -0.080 | 0.032 | 0.017 | 0.052 | -0.030 | 0.148 | 0.059 | -0.010 | -0.019 | -0.023 | 0.037 | -0.122 | -0.153 | -0.036 |

| Q4_8 | -0.089 | 0.013 | -0.064 | -0.005 | 0.123 | 0.000 | 0.102 | -0.010 | -0.012 | 0.031 | -0.021 | -0.012 | -0.016 | 0.151 | -0.001 | 0.085 | -0.099 | -0.046 | 0.049 | 0.048 | 0.114 | -0.013 | -0.037 | -0.029 | 0.008 | 0.069 | 0.068 | 0.084 | 0.031 | 0.101 | 0.068 | 0.006 | 0.041 | -0.086 | 0.093 | -0.063 | -0.054 | -0.042 |

| Q4_9 | -0.069 | -0.074 | -0.088 | -0.049 | 0.041 | 0.102 | 0.000 | 0.013 | 0.062 | 0.059 | -0.048 | 0.022 | 0.030 | 0.208 | 0.077 | 0.098 | -0.042 | -0.014 | 0.052 | 0.015 | 0.208 | 0.033 | 0.010 | -0.026 | -0.009 | 0.096 | -0.003 | 0.075 | -0.033 | 0.136 | 0.191 | 0.041 | 0.056 | -0.019 | 0.091 | 0.118 | 0.056 | 0.006 |

| Q4_10 | 0.030 | -0.052 | 0.000 | 0.038 | 0.005 | -0.010 | 0.013 | 0.000 | -0.009 | -0.025 | 0.028 | -0.029 | -0.036 | 0.109 | 0.037 | 0.018 | -0.047 | -0.023 | 0.034 | 0.011 | 0.203 | -0.020 | -0.013 | -0.041 | -0.070 | 0.024 | 0.028 | 0.069 | -0.025 | 0.233 | 0.096 | 0.061 | 0.025 | 0.024 | 0.063 | 0.017 | 0.001 | 0.085 |

| Q4_11 | 0.000 | -0.023 | -0.033 | 0.011 | 0.024 | -0.012 | 0.062 | -0.009 | 0.000 | -0.057 | -0.017 | 0.038 | -0.034 | 0.241 | 0.049 | 0.097 | -0.109 | -0.059 | 0.035 | 0.002 | 0.180 | 0.032 | -0.011 | -0.025 | -0.080 | 0.132 | 0.064 | 0.120 | 0.079 | 0.193 | 0.145 | 0.086 | 0.181 | 0.081 | 0.139 | 0.064 | 0.031 | -0.007 |

| Q4_15 | -0.035 | -0.013 | -0.024 | -0.067 | 0.008 | 0.031 | 0.059 | -0.025 | -0.057 | 0.000 | 0.154 | 0.044 | 0.042 | 0.189 | 0.071 | 0.129 | -0.045 | -0.018 | 0.022 | 0.017 | 0.219 | 0.036 | -0.010 | -0.032 | 0.008 | 0.085 | -0.020 | 0.068 | -0.023 | 0.153 | -0.036 | -0.050 | -0.049 | -0.096 | -0.054 | -0.004 | -0.025 | 0.053 |

| Q4_16 | 0.000 | -0.069 | 0.016 | -0.065 | -0.048 | -0.021 | -0.048 | 0.028 | -0.017 | 0.154 | 0.000 | 0.110 | 0.016 | 0.158 | 0.064 | 0.118 | -0.013 | 0.011 | 0.049 | 0.035 | 0.259 | 0.077 | -0.038 | -0.070 | -0.012 | 0.089 | -0.014 | 0.033 | -0.026 | 0.176 | -0.010 | 0.027 | -0.029 | -0.032 | 0.039 | 0.005 | 0.006 | -0.012 |

| Q4_17 | -0.033 | -0.061 | -0.076 | -0.060 | 0.038 | -0.012 | 0.022 | -0.029 | 0.038 | 0.044 | 0.110 | 0.000 | 0.067 | 0.014 | -0.003 | -0.025 | -0.056 | -0.073 | -0.032 | -0.039 | 0.131 | -0.001 | -0.021 | 0.004 | -0.003 | 0.055 | 0.092 | 0.098 | 0.020 | 0.128 | 0.085 | 0.009 | 0.031 | -0.016 | 0.027 | -0.052 | -0.046 | -0.051 |

| Q4_18 | -0.004 | 0.060 | 0.002 | -0.021 | -0.002 | -0.016 | 0.030 | -0.036 | -0.034 | 0.042 | 0.016 | 0.067 | 0.000 | 0.131 | 0.030 | 0.017 | -0.156 | -0.111 | 0.011 | -0.058 | 0.140 | -0.036 | -0.048 | -0.082 | -0.090 | 0.074 | 0.002 | 0.069 | -0.004 | 0.113 | 0.012 | 0.020 | -0.032 | -0.165 | -0.067 | -0.085 | -0.071 | -0.089 |

| Q5_1 | 0.149 | 0.149 | 0.184 | 0.100 | 0.149 | 0.151 | 0.208 | 0.109 | 0.241 | 0.189 | 0.158 | 0.014 | 0.131 | 0.000 | 0.164 | 0.095 | -0.073 | -0.091 | -0.046 | -0.043 | 0.029 | 0.102 | 0.105 | 0.104 | 0.129 | 0.073 | 0.144 | 0.146 | 0.143 | 0.258 | 0.111 | 0.081 | 0.150 | 0.065 | 0.057 | 0.112 | 0.041 | 0.054 |

| Q5_2 | 0.007 | -0.045 | -0.038 | -0.041 | 0.004 | -0.001 | 0.077 | 0.037 | 0.049 | 0.071 | 0.064 | -0.003 | 0.030 | 0.164 | 0.000 | 0.136 | -0.005 | -0.012 | -0.051 | -0.077 | -0.012 | -0.010 | 0.049 | 0.007 | 0.104 | 0.042 | 0.085 | 0.019 | 0.048 | 0.207 | 0.084 | -0.016 | -0.025 | -0.037 | -0.115 | 0.041 | 0.000 | 0.092 |

| Q5_3 | 0.049 | 0.020 | 0.040 | 0.023 | 0.112 | 0.085 | 0.098 | 0.018 | 0.097 | 0.129 | 0.118 | -0.025 | 0.017 | 0.095 | 0.136 | 0.000 | -0.032 | -0.053 | 0.017 | -0.008 | -0.037 | 0.077 | 0.052 | -0.030 | 0.080 | 0.124 | -0.014 | 0.029 | -0.009 | 0.147 | 0.011 | -0.041 | 0.014 | -0.035 | -0.053 | -0.049 | -0.088 | 0.020 |

| Q5_4 | -0.066 | -0.162 | -0.134 | -0.097 | -0.106 | -0.099 | -0.042 | -0.047 | -0.109 | -0.045 | -0.013 | -0.056 | -0.156 | -0.073 | -0.005 | -0.032 | 0.000 | 0.164 | -0.041 | 0.002 | -0.083 | -0.141 | -0.106 | -0.136 | -0.043 | 0.053 | -0.090 | -0.054 | -0.140 | 0.155 | -0.060 | -0.040 | -0.079 | 0.038 | -0.120 | 0.012 | -0.012 | 0.094 |

| Q5_5 | -0.042 | -0.153 | -0.104 | -0.054 | -0.099 | -0.046 | -0.014 | -0.023 | -0.059 | -0.018 | 0.011 | -0.073 | -0.111 | -0.091 | -0.012 | -0.053 | 0.164 | 0.000 | -0.009 | -0.015 | -0.080 | -0.116 | -0.116 | -0.148 | -0.050 | 0.005 | -0.105 | -0.094 | -0.094 | 0.145 | -0.007 | -0.057 | -0.070 | -0.010 | -0.109 | 0.041 | 0.022 | 0.126 |

| Q5_6 | 0.029 | -0.005 | -0.034 | 0.054 | 0.068 | 0.049 | 0.052 | 0.034 | 0.035 | 0.022 | 0.049 | -0.032 | 0.011 | -0.046 | -0.051 | 0.017 | -0.041 | -0.009 | 0.000 | 0.078 | 0.007 | -0.066 | -0.020 | -0.051 | 0.009 | 0.080 | 0.039 | 0.017 | -0.023 | 0.225 | 0.004 | 0.044 | 0.010 | -0.012 | 0.052 | -0.072 | -0.111 | 0.016 |

| Q5_8 | -0.049 | -0.104 | -0.069 | -0.011 | 0.055 | 0.048 | 0.015 | 0.011 | 0.002 | 0.017 | 0.035 | -0.039 | -0.058 | -0.043 | -0.077 | -0.008 | 0.002 | -0.015 | 0.078 | 0.000 | 0.048 | -0.064 | -0.030 | -0.113 | 0.024 | 0.033 | 0.005 | 0.026 | -0.034 | 0.189 | -0.021 | -0.066 | -0.024 | -0.019 | 0.020 | -0.074 | -0.123 | 0.034 |

| Q5_12 | 0.076 | 0.025 | 0.088 | 0.085 | 0.099 | 0.114 | 0.208 | 0.203 | 0.180 | 0.219 | 0.259 | 0.131 | 0.140 | 0.029 | -0.012 | -0.037 | -0.083 | -0.080 | 0.007 | 0.048 | 0.000 | 0.070 | 0.127 | 0.068 | 0.102 | 0.184 | 0.170 | 0.286 | 0.152 | 0.298 | 0.123 | 0.113 | 0.122 | 0.093 | 0.144 | 0.089 | 0.059 | 0.128 |

| Q6_1 | 0.011 | -0.022 | -0.010 | -0.100 | -0.029 | -0.013 | 0.033 | -0.020 | 0.032 | 0.036 | 0.077 | -0.001 | -0.036 | 0.102 | -0.010 | 0.077 | -0.141 | -0.116 | -0.066 | -0.064 | 0.070 | 0.000 | 0.132 | 0.071 | 0.037 | -0.005 | -0.033 | -0.040 | -0.038 | -0.125 | -0.022 | -0.082 | -0.103 | -0.216 | -0.129 | -0.076 | 0.066 | -0.150 |

| Q6_2 | -0.034 | -0.023 | -0.063 | -0.041 | 0.017 | -0.037 | 0.010 | -0.013 | -0.011 | -0.010 | -0.038 | -0.021 | -0.048 | 0.105 | 0.049 | 0.052 | -0.106 | -0.116 | -0.020 | -0.030 | 0.127 | 0.132 | 0.000 | 0.053 | 0.117 | -0.052 | -0.066 | -0.084 | -0.001 | -0.051 | -0.006 | 0.062 | -0.030 | -0.126 | -0.110 | -0.090 | -0.048 | -0.103 |

| Q6_3 | -0.067 | -0.062 | -0.086 | -0.073 | -0.044 | -0.029 | -0.026 | -0.041 | -0.025 | -0.032 | -0.070 | 0.004 | -0.082 | 0.104 | 0.007 | -0.030 | -0.136 | -0.148 | -0.051 | -0.113 | 0.068 | 0.071 | 0.053 | 0.000 | 0.031 | -0.030 | -0.009 | 0.011 | -0.029 | -0.052 | -0.006 | 0.001 | -0.037 | -0.139 | -0.073 | -0.106 | -0.057 | -0.082 |

| Q6_4 | -0.082 | -0.064 | -0.061 | -0.053 | -0.080 | 0.008 | -0.009 | -0.070 | -0.080 | 0.008 | -0.012 | -0.003 | -0.090 | 0.129 | 0.104 | 0.080 | -0.043 | -0.050 | 0.009 | 0.024 | 0.102 | 0.037 | 0.117 | 0.031 | 0.000 | -0.127 | -0.044 | -0.069 | 0.042 | -0.039 | 0.035 | 0.016 | -0.020 | -0.100 | -0.089 | -0.021 | 0.016 | -0.046 |

| Q6_5 | 0.045 | 0.041 | 0.021 | 0.016 | 0.032 | 0.069 | 0.096 | 0.024 | 0.132 | 0.085 | 0.089 | 0.055 | 0.074 | 0.073 | 0.042 | 0.124 | 0.053 | 0.005 | 0.080 | 0.033 | 0.184 | -0.005 | -0.052 | -0.030 | -0.127 | 0.000 | 0.044 | 0.086 | -0.023 | 0.070 | 0.009 | 0.030 | 0.042 | 0.017 | 0.072 | 0.042 | 0.072 | 0.019 |

| Q6_6 | -0.019 | 0.001 | 0.021 | 0.020 | 0.017 | 0.068 | -0.003 | 0.028 | 0.064 | -0.020 | -0.014 | 0.092 | 0.002 | 0.144 | 0.085 | -0.014 | -0.090 | -0.105 | 0.039 | 0.005 | 0.170 | -0.033 | -0.066 | -0.009 | -0.044 | 0.044 | 0.000 | 0.071 | 0.003 | 0.035 | 0.142 | 0.051 | 0.096 | -0.086 | 0.015 | -0.059 | -0.016 | -0.031 |

| Q6_7 | -0.012 | 0.005 | -0.033 | -0.029 | 0.052 | 0.084 | 0.075 | 0.069 | 0.120 | 0.068 | 0.033 | 0.098 | 0.069 | 0.146 | 0.019 | 0.029 | -0.054 | -0.094 | 0.017 | 0.026 | 0.286 | -0.040 | -0.084 | 0.011 | -0.069 | 0.086 | 0.071 | 0.000 | 0.024 | 0.002 | 0.104 | 0.060 | 0.123 | -0.071 | 0.043 | -0.010 | 0.014 | -0.024 |

| Q6_8 | -0.044 | -0.014 | -0.028 | -0.055 | -0.030 | 0.031 | -0.033 | -0.025 | 0.079 | -0.023 | -0.026 | 0.020 | -0.004 | 0.143 | 0.048 | -0.009 | -0.140 | -0.094 | -0.023 | -0.034 | 0.152 | -0.038 | -0.001 | -0.029 | 0.042 | -0.023 | 0.003 | 0.024 | 0.000 | -0.023 | 0.107 | 0.134 | 0.122 | -0.095 | 0.012 | -0.020 | 0.003 | -0.070 |

| Q6_11 | 0.154 | 0.108 | 0.166 | 0.210 | 0.148 | 0.101 | 0.136 | 0.233 | 0.193 | 0.153 | 0.176 | 0.128 | 0.113 | 0.258 | 0.207 | 0.147 | 0.155 | 0.145 | 0.225 | 0.189 | 0.298 | -0.125 | -0.051 | -0.052 | -0.039 | 0.070 | 0.035 | 0.002 | -0.023 | 0.000 | 0.250 | 0.243 | 0.189 | 0.217 | 0.217 | 0.199 | 0.076 | 0.241 |

| Q7_2 | -0.014 | -0.056 | -0.003 | 0.079 | 0.059 | 0.068 | 0.191 | 0.096 | 0.145 | -0.036 | -0.010 | 0.085 | 0.012 | 0.111 | 0.084 | 0.011 | -0.060 | -0.007 | 0.004 | -0.021 | 0.123 | -0.022 | -0.006 | -0.006 | 0.035 | 0.009 | 0.142 | 0.104 | 0.107 | 0.250 | 0.000 | 0.066 | 0.024 | -0.038 | 0.007 | -0.075 | -0.070 | -0.082 |

| Q7_4 | 0.069 | -0.001 | 0.026 | 0.104 | -0.010 | 0.006 | 0.041 | 0.061 | 0.086 | -0.050 | 0.027 | 0.009 | 0.020 | 0.081 | -0.016 | -0.041 | -0.040 | -0.057 | 0.044 | -0.066 | 0.113 | -0.082 | 0.062 | 0.001 | 0.016 | 0.030 | 0.051 | 0.060 | 0.134 | 0.243 | 0.066 | 0.000 | 0.083 | -0.021 | -0.006 | -0.105 | -0.146 | -0.082 |

| Q7_5 | -0.057 | -0.023 | -0.090 | 0.008 | -0.019 | 0.041 | 0.056 | 0.025 | 0.181 | -0.049 | -0.029 | 0.031 | -0.032 | 0.150 | -0.025 | 0.014 | -0.079 | -0.070 | 0.010 | -0.024 | 0.122 | -0.103 | -0.030 | -0.037 | -0.020 | 0.042 | 0.096 | 0.123 | 0.122 | 0.189 | 0.024 | 0.083 | 0.000 | -0.044 | 0.041 | -0.054 | -0.074 | -0.064 |

| Q7_7 | -0.054 | -0.131 | -0.078 | -0.029 | -0.023 | -0.086 | -0.019 | 0.024 | 0.081 | -0.096 | -0.032 | -0.016 | -0.165 | 0.065 | -0.037 | -0.035 | 0.038 | -0.010 | -0.012 | -0.019 | 0.093 | -0.216 | -0.126 | -0.139 | -0.100 | 0.017 | -0.086 | -0.071 | -0.095 | 0.217 | -0.038 | -0.021 | -0.044 | 0.000 | 0.065 | 0.090 | 0.072 | 0.032 |

| Q7_8 | 0.007 | -0.045 | -0.042 | 0.055 | 0.037 | 0.093 | 0.091 | 0.063 | 0.139 | -0.054 | 0.039 | 0.027 | -0.067 | 0.057 | -0.115 | -0.053 | -0.120 | -0.109 | 0.052 | 0.020 | 0.144 | -0.129 | -0.110 | -0.073 | -0.089 | 0.072 | 0.015 | 0.043 | 0.012 | 0.217 | 0.007 | -0.006 | 0.041 | 0.065 | 0.000 | -0.039 | -0.052 | -0.044 |

| Q7_12 | -0.066 | -0.081 | -0.005 | -0.050 | -0.122 | -0.063 | 0.118 | 0.017 | 0.064 | -0.004 | 0.005 | -0.052 | -0.085 | 0.112 | 0.041 | -0.049 | 0.012 | 0.041 | -0.072 | -0.074 | 0.089 | -0.076 | -0.090 | -0.106 | -0.021 | 0.042 | -0.059 | -0.010 | -0.020 | 0.199 | -0.075 | -0.105 | -0.054 | 0.090 | -0.039 | 0.000 | 0.285 | 0.203 |

| Q7_13 | 0.050 | 0.009 | 0.045 | -0.015 | -0.153 | -0.054 | 0.056 | 0.001 | 0.031 | -0.025 | 0.006 | -0.046 | -0.071 | 0.041 | 0.000 | -0.088 | -0.012 | 0.022 | -0.111 | -0.123 | 0.059 | 0.066 | -0.048 | -0.057 | 0.016 | 0.072 | -0.016 | 0.014 | 0.003 | 0.076 | -0.070 | -0.146 | -0.074 | 0.072 | -0.052 | 0.285 | 0.000 | 0.132 |

| Q7_14 | -0.047 | -0.077 | -0.023 | -0.019 | -0.036 | -0.042 | 0.006 | 0.085 | -0.007 | 0.053 | -0.012 | -0.051 | -0.089 | 0.054 | 0.092 | 0.020 | 0.094 | 0.126 | 0.016 | 0.034 | 0.128 | -0.150 | -0.103 | -0.082 | -0.046 | 0.019 | -0.031 | -0.024 | -0.070 | 0.241 | -0.082 | -0.082 | -0.064 | 0.032 | -0.044 | 0.203 | 0.132 | 0.000 |

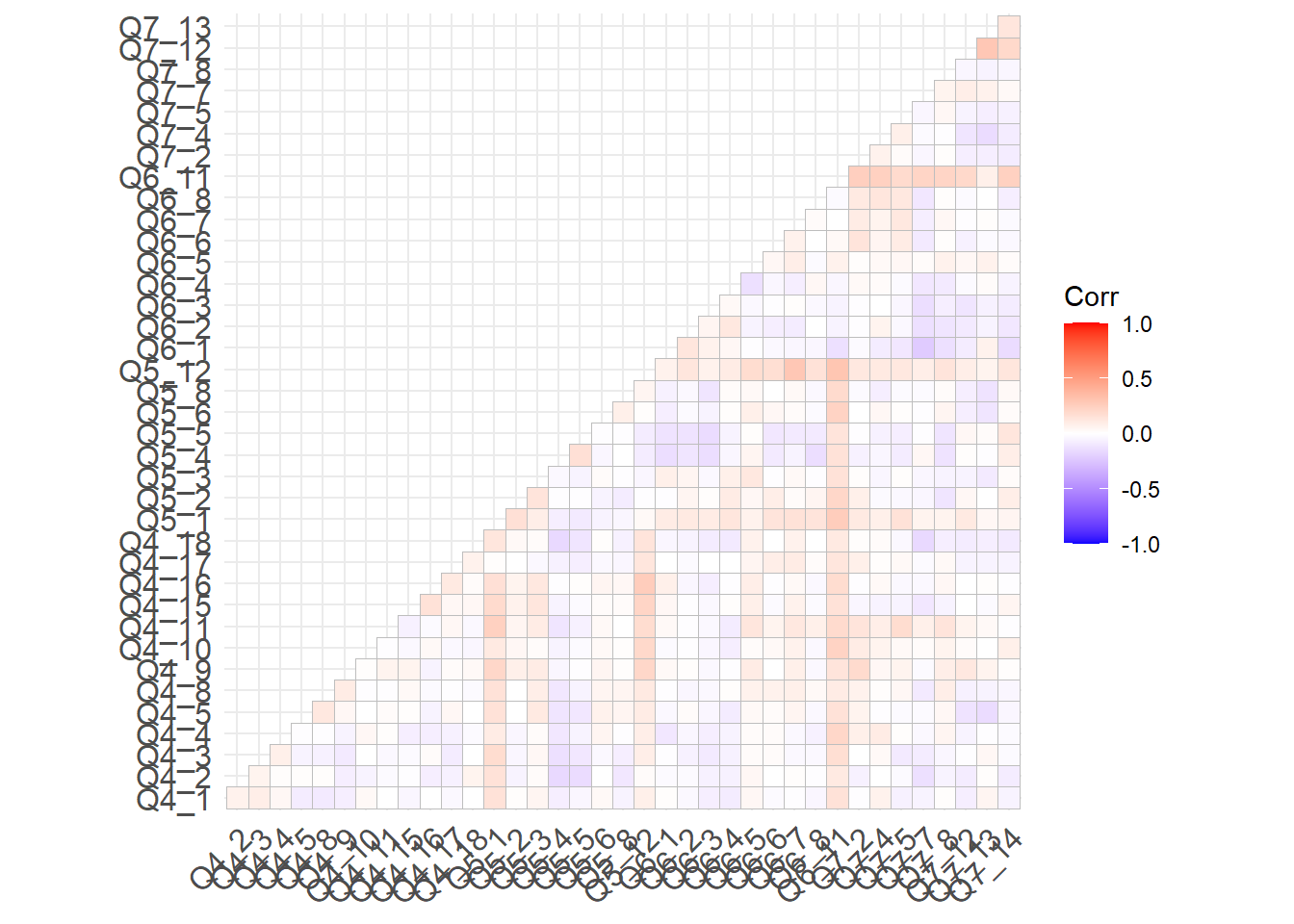

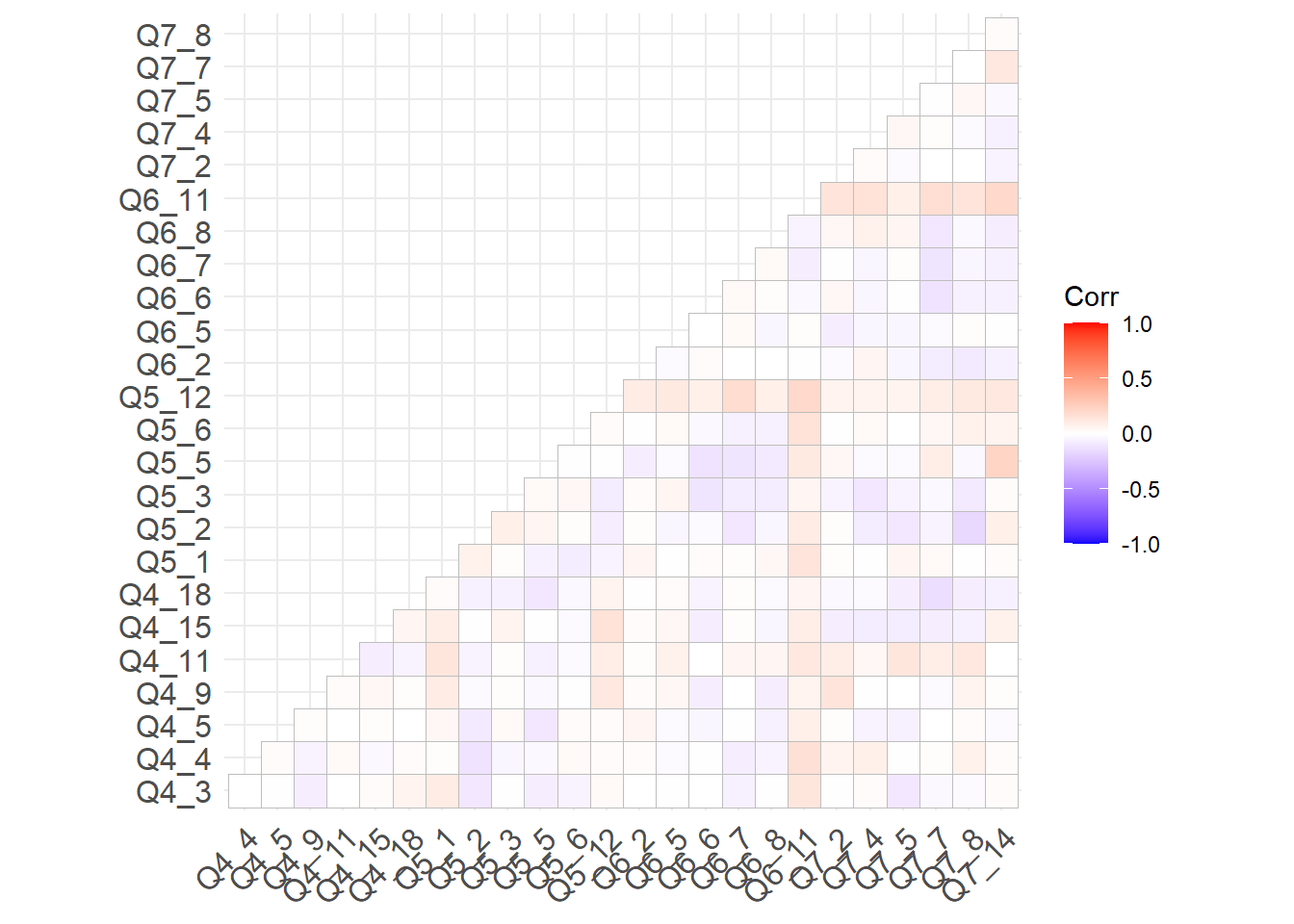

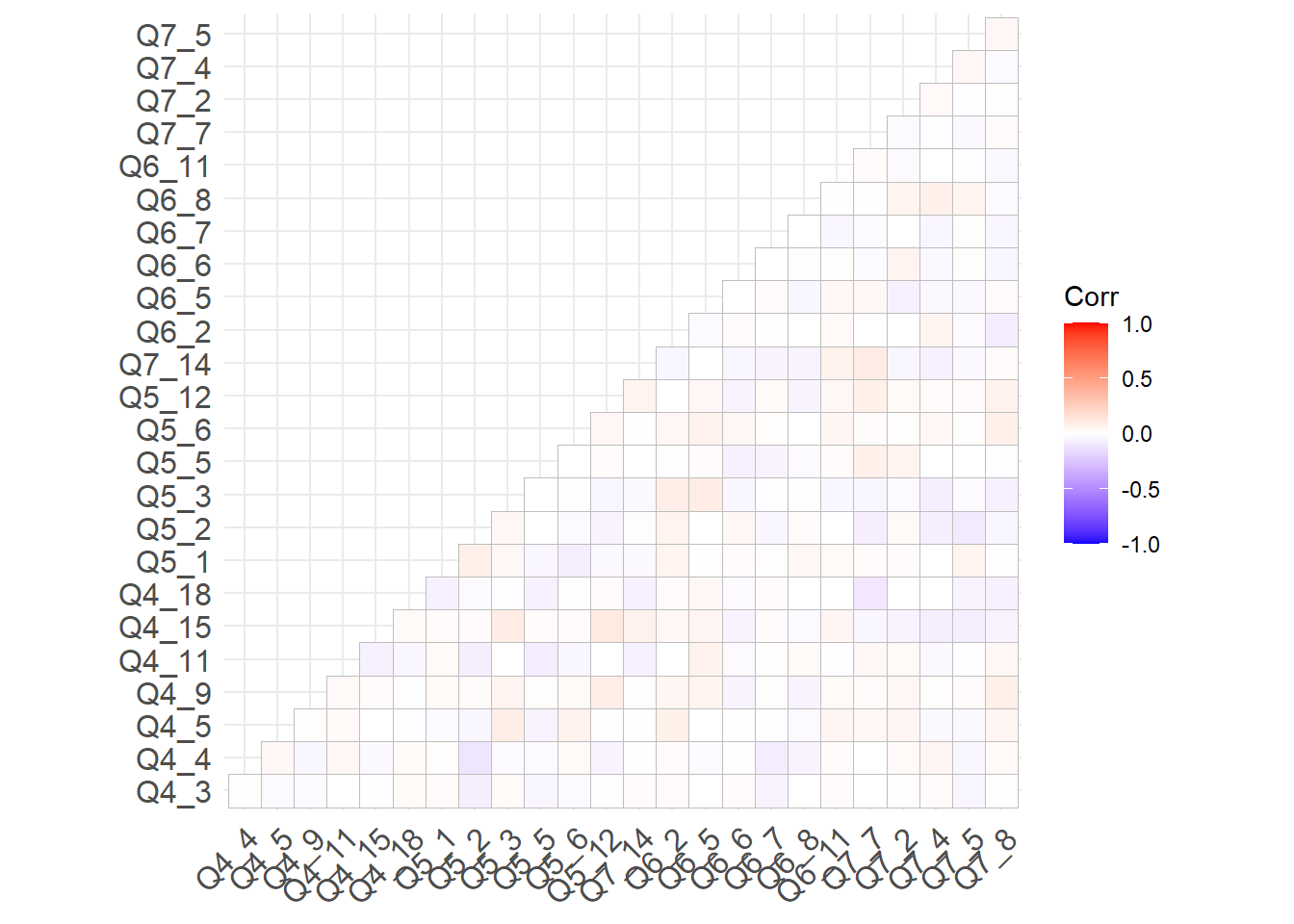

ggcorrplot(out[[2]], type = "lower")

# modification indices

modindices(fit1, minimum.value = 10, sort = TRUE) lhs op rhs mi epc sepc.lv sepc.all sepc.nox

673 Q5_4 ~~ Q5_5 104.4 0.320 0.320 0.750 0.750

200 EN =~ Q6_11 87.8 0.979 0.639 0.655 0.655

901 Q7_12 ~~ Q7_13 59.2 0.374 0.374 0.460 0.460

133 SC =~ Q6_11 54.3 0.768 0.420 0.431 0.431

103 EL =~ Q6_11 47.5 0.698 0.462 0.474 0.474

902 Q7_12 ~~ Q7_14 47.0 0.272 0.272 0.426 0.426

785 Q6_2 ~~ Q6_4 38.0 0.135 0.135 0.407 0.407

191 EN =~ Q5_12 37.3 0.848 0.553 0.547 0.547

768 Q6_1 ~~ Q6_2 33.6 0.128 0.128 0.368 0.368

162 IN =~ Q5_12 31.4 0.574 0.336 0.333 0.333

498 Q4_15 ~~ Q4_16 31.1 0.142 0.142 0.334 0.334

87 EL =~ Q5_1 29.3 0.480 0.318 0.334 0.334

343 Q4_5 ~~ Q4_8 26.2 0.106 0.106 0.312 0.312

788 Q6_2 ~~ Q6_7 25.8 -0.097 -0.097 -0.348 -0.348

94 EL =~ Q5_12 25.3 0.466 0.309 0.306 0.306

179 EN =~ Q4_11 24.7 0.458 0.298 0.314 0.314

202 Q4_1 ~~ Q4_3 24.7 0.085 0.085 0.315 0.315

90 EL =~ Q5_4 24.2 -0.409 -0.271 -0.247 -0.247

184 EN =~ Q5_1 23.6 0.645 0.421 0.443 0.443

604 Q5_1 ~~ Q5_2 23.1 0.179 0.179 0.290 0.290

274 Q4_3 ~~ Q4_4 23.0 0.077 0.077 0.309 0.309

757 Q5_12 ~~ Q6_7 22.7 0.121 0.121 0.299 0.299

155 IN =~ Q5_1 21.8 0.457 0.268 0.282 0.282

714 Q5_6 ~~ Q5_8 19.4 0.120 0.120 0.311 0.311

628 Q5_2 ~~ Q5_3 19.2 0.167 0.167 0.268 0.268

492 Q4_11 ~~ Q7_5 19.0 0.107 0.107 0.272 0.272

813 Q6_4 ~~ Q6_5 18.6 -0.151 -0.151 -0.263 -0.263

192 EN =~ Q6_1 18.5 -0.370 -0.241 -0.282 -0.282

838 Q6_6 ~~ Q6_7 18.2 0.071 0.071 0.291 0.291

159 IN =~ Q5_5 17.6 -0.367 -0.215 -0.203 -0.203

374 Q4_5 ~~ Q7_13 17.0 -0.143 -0.143 -0.244 -0.244

775 Q6_1 ~~ Q6_11 17.0 -0.118 -0.118 -0.248 -0.248

166 IN =~ Q7_7 16.9 -0.492 -0.288 -0.271 -0.271

815 Q6_4 ~~ Q6_7 16.5 -0.081 -0.081 -0.276 -0.276

158 IN =~ Q5_4 15.8 -0.365 -0.214 -0.195 -0.195

205 Q4_1 ~~ Q4_8 15.3 -0.076 -0.076 -0.241 -0.241

194 EN =~ Q6_3 15.1 -0.312 -0.204 -0.220 -0.220

713 Q5_5 ~~ Q7_14 14.8 0.125 0.125 0.246 0.246

647 Q5_2 ~~ Q7_8 14.6 -0.114 -0.114 -0.240 -0.240

277 Q4_3 ~~ Q4_9 14.6 -0.085 -0.085 -0.235 -0.235

887 Q7_4 ~~ Q7_13 14.5 -0.167 -0.167 -0.227 -0.227

607 Q5_1 ~~ Q5_5 14.0 -0.120 -0.120 -0.244 -0.244

376 Q4_8 ~~ Q4_9 13.4 0.094 0.094 0.220 0.220

729 Q5_6 ~~ Q7_8 13.1 0.082 0.082 0.239 0.239

431 Q4_9 ~~ Q7_2 13.0 0.096 0.096 0.221 0.221

886 Q7_4 ~~ Q7_12 12.8 -0.128 -0.128 -0.222 -0.222

91 EL =~ Q5_5 12.7 -0.283 -0.187 -0.177 -0.177

787 Q6_2 ~~ Q6_6 12.6 -0.064 -0.064 -0.235 -0.235

484 Q4_11 ~~ Q6_4 12.4 -0.081 -0.081 -0.218 -0.218

204 Q4_1 ~~ Q4_5 12.4 -0.067 -0.067 -0.219 -0.219

676 Q5_4 ~~ Q5_12 12.0 -0.123 -0.123 -0.225 -0.225

141 SC =~ Q7_14 12.0 0.579 0.317 0.304 0.304

127 SC =~ Q6_3 11.8 -0.275 -0.150 -0.163 -0.163

903 Q7_13 ~~ Q7_14 11.8 0.168 0.168 0.204 0.204

862 Q6_8 ~~ Q7_5 11.8 0.067 0.067 0.224 0.224

696 Q5_5 ~~ Q5_12 11.6 -0.115 -0.115 -0.223 -0.223

163 IN =~ Q7_2 11.5 0.329 0.193 0.217 0.217

526 Q4_16 ~~ Q4_17 11.4 0.103 0.103 0.199 0.199

895 Q7_7 ~~ Q7_12 11.3 0.129 0.129 0.214 0.214

183 EN =~ Q4_18 11.2 -0.228 -0.149 -0.189 -0.189

827 Q6_5 ~~ Q6_7 11.0 0.101 0.101 0.207 0.207

373 Q4_5 ~~ Q7_12 10.9 -0.092 -0.092 -0.201 -0.201

883 Q7_4 ~~ Q7_5 10.7 0.095 0.095 0.211 0.211

535 Q4_16 ~~ Q5_12 10.7 0.109 0.109 0.194 0.194

238 Q4_2 ~~ Q4_3 10.7 0.053 0.053 0.206 0.206

656 Q5_3 ~~ Q6_1 10.5 0.096 0.096 0.196 0.196

97 EL =~ Q6_3 10.5 -0.252 -0.167 -0.181 -0.181

177 EN =~ Q4_9 10.5 0.335 0.218 0.226 0.226

769 Q6_1 ~~ Q6_3 10.4 0.071 0.071 0.206 0.206

747 Q5_8 ~~ Q7_8 10.4 0.085 0.085 0.213 0.213

314 Q4_4 ~~ Q4_15 10.2 -0.061 -0.061 -0.200 -0.200

525 Q4_15 ~~ Q7_14 10.2 0.095 0.095 0.193 0.193

730 Q5_6 ~~ Q7_12 10.2 -0.093 -0.093 -0.203 -0.203

875 Q6_11 ~~ Q7_14 10.2 0.117 0.117 0.191 0.191

689 Q5_4 ~~ Q7_7 10.1 0.105 0.105 0.206 0.206

248 Q4_2 ~~ Q4_18 10.1 0.047 0.047 0.201 0.201

882 Q7_2 ~~ Q7_14 10.0 -0.098 -0.098 -0.203 -0.203ROPE Probability Approx

# set up data

dat2 <- mydata[, use.var]

colnames(dat2) <- c(paste0("y", 1:38))

fit1 <- lavaan::sem(mod1.2, data=dat2)

# Probility method

source("code/utility_functions.R")

# ========================================== #

# ========================================== #

# function: get_prior_dens()

# ========================================== #

# use: gets the appropriate prior for the

# parameter of interest

#

get_prior_dens <- function(pvalue, pname,...){

if(pname %like% 'lambda'){

out <- dnorm(pvalue, 0, 1, log=T)

}

if(pname %like% 'dphi'){

out <- dgamma(pvalue, 1, 0.5, log=T)

}

if(pname %like% 'odphi'){

out <- dnorm(pvalue, 0, 1, log=T)

}

if(pname %like% 'dpsi'){

out <- dgamma(pvalue, 1, 0.5, log=T)

}

if(pname %like% 'odpsi'){

out <- dnorm(pvalue, 0, 1, log=T)

}

if(pname %like% 'eta'){

out <- dnorm(pvalue, 0, 10, log=T)

}

if(pname %like% 'tau'){

out <- dnorm(pvalue, 0, 32, log=T)

}

return(out)

}

# ========================================== #

# ========================================== #

# function: get_log_post()

# ========================================== #

# use: uses the model, parameters, and data to

# to calculate log posterior

#

# arguments:

# p - names vector of parameters

# sample.data - data frame of raw data

# cfa.model - list of model components

#

get_log_post <- function(p, sample.data, cfa.model,...) {

out <- use_cfa_model(p, cov(sample.data), cfa.model)

log_lik <- sum(apply(sample.data, 1, dmvnorm,

mean=out[['tau']],

sigma=out[['Sigma']], log=T))

log_prior<-0

if(length(p)==1){

log_prior <- get_prior_dens(p, names(p))

} else {

i <- 1

for(i in 1:length(p)){

log_prior <- log_prior + get_prior_dens(p[i], names(p)[i])

}

}

log_post <- log_lik + log_prior

log_post

}

# ========================================== #

# ========================================== #

# function: use_cfa_model()

# ========================================== #

# use: take in parameters, data, and model to

# obtain the log-likelihood

#

# arguments:

# theta - vector of parameters being optimized

# sample.cov - samplecovariance matrix

# cfa.model - list of model parameters

use_cfa_model <- function(theta, sample.cov, cfa.model,...){

# Compue sample statistics

p<-ncol(sample.cov)

S<-sample.cov

# unpack model

lambda <- cfa.model[[1]]

phi <- cfa.model[[2]]

psi <- cfa.model[[3]]

#tau <- cfaModel[[4]]

#eta <- cfaModel[[5]]

# number factor loadings

lam.num <- length(which(is.na(lambda)))

lambda[which(is.na(lambda))] <- theta[1:lam.num]

nF = ncol(lambda)

# number elements in factor (co)variance matrix

phi.num <- length(which(is.na(phi)))

dphi.num <- sum(is.na(diag(phi))==T)

odphi.num <- sum(is.na(phi[lower.tri(phi)])==T)

if(phi.num > 0){

if(dphi.num == 0){

phi[which(is.na(phi))] <- theta[(lam.num+1):(lam.num+phi.num)]

} else {

diag(phi) <- theta[(lam.num+1):(lam.num+dphi.num)]

phi[which(is.na(phi))] <- theta[(lam.num+dphi.num+1):(lam.num+phi.num)]

}

}

phi <- low2full(phi) # map lower to upper

# number elements in error (co)variance matrix

psi.num <- length(which(is.na(psi)))

dpsi.num <- sum(is.na(diag(psi))==T)

odpsi.num <- sum(is.na(psi[lower.tri(psi)])==T)

if(psi.num > 0){

if(dpsi.num == 0){

psi[which(is.na(psi))] <- theta[(lam.num+1):(lam.num+psi.num)]

} else {

diag(psi) <- theta[(lam.num+1):(lam.num+dpsi.num)]

psi[which(is.na(psi))] <- theta[(lam.num+dpsi.num+1):(lam.num+psi.num)]

}

}

psi <- low2full(psi)

# number of factor scores

#eta.num <- length(eta)

#eta <- matrix(theta[(lam.num+phi.num+psi.num+tau.num+1):(lam.num+phi.num+psi.num+tau.num+eta.num)],

# nrow=nF)

# mean center eta

#for(i in 1:nF){

# eta[i, ] <- eta[i,] - mean(eta[,i])

#}

# # number of intercepts

# tau.num <- length(tau)

# tau <- matrix(theta[(lam.num+phi.num+psi.num+1):(lam.num+phi.num+psi.num+tau.num)], ncol=1)

# tau <- repeat_col(tau, ncol(eta))

# compute model observed outcomes

#Y <- tau + lambda%*%eta

tau <- numeric(p)

# compute model implied (co)variance matrix

Sigma<-lambda%*%phi%*%(t(lambda)) + psi

#return fit value

out <- list(Sigma, lambda, phi, psi, tau)

names(out) <- c('Sigma', 'lambda', 'phi', 'psi', 'tau')

return(out)

}

# ========================================== #

# ========================================== #

# function: laplace_local_fit()

# ========================================== #

# use: uses the fittes lavaan object to run

# the proposed method

#

# arguments:

# fit - fitted lavaan model

# standardized - logical for whether to standardize

# cut.load - cutoff for value of loading to care about default = 0.3

# cut.cov - cutoff for value of covariances to care about default = 0.1

# opt - list of parameters to pass to interior functions

# sum.print - logical indicator of whether to print the summary table upon completion

# counter - logical indicator of whether to print out a (.) after each

# parameter is completed

#

#laplace_local_fit <- function(fit, cut.load = 0.3, cut.cov = 0.1, standardize=T,

# opt=list(scale.cov=1, no.samples=1000),

# all.parameters=F,

# sum.print=F, pb=T,...){

fit=fit1

cut.load = 0.6

cut.cov = 0.25

standardize=T

opt=list(scale.cov=1, no.samples=1000)

all.parameters=F

sum.print=F

pb=T

# Observed Data

sampleData <- fit@Data@X[[1]]

# sample covariance matrix

sampleCov <- fit@SampleStats@cov[[1]]

# extract model

extractedLavaan <- lavMatrixRepresentation(partable(fit))

factNames <- unique(extractedLavaan[extractedLavaan[,"mat"]=="lambda", "lhs"])

varNames <- unique(extractedLavaan[extractedLavaan[,"mat"]=="lambda", "rhs"])

# extract factor loading matrix

lambda <- extractedLavaan[ extractedLavaan$mat == "lambda" ,]

lambda <- convert2matrix(lambda$row, lambda$col, lambda$est)

colnames(lambda) <- factNames

rownames(lambda) <- varNames

# extract factor covariance matrix

phi <- extractedLavaan[ extractedLavaan$mat == "psi" ,]

phi <- convert2matrix(phi[,'row'], phi[,'col'], phi[,'est'])

phi <- up2full(phi)

colnames(phi) <- rownames(phi) <- factNames

# extract error covariance matrix

psi <- extractedLavaan[ extractedLavaan$mat == "theta" ,]

psi <- convert2matrix(psi[,'row'], psi[,'col'], psi[,'est'])

psi[upper.tri(psi)] <- 0

colnames(psi) <- rownames(psi) <- varNames

# need to create list of all NA parameters in the above matrices

if(all.parameters == T){

lambdaA <- lambda

phiA <- phi

psiA <- psi

lambdaA[!is.na(lambdaA)] <- NA

phiA[!is.na(phiA)] <- NA

psiA[!is.na(psiA)] <- NA

} else{

lambdaA <- lambda

phiA <- phi

psiA <- psi

}

lamList <- as.matrix(which(is.na(lambdaA), arr.ind = T))

il <- nrow(lamList)

phiList <- as.matrix(which(is.na(phiA), arr.ind = T))

ip <- il + nrow(phiList)

psiList <- as.matrix(which(is.na(psiA), arr.ind = T))

it <- ip + nrow(psiList)

modList <- rbind(lamList, phiList, psiList)

# number of variables

# create names for each condition

vnlamList <- lamList

vnlamList[,2] <- paste0(factor(vnlamList[,2], levels = order(unique(vnlamList[,2])),labels=factNames))

vnlamList[,1] <- rownames(lamList)

vnlamList[,2] <- paste0(vnlamList[,2],"=~",vnlamList[,1])

vnphiList <- phiList

if(nrow(phiList)>0){

vnphiList[,1] <- paste0(factor(phiList[,1], levels = order(unique(vnphiList[,1])),labels=factNames))

vnphiList[,2] <- paste0(factor(phiList[,2], levels = order(unique(phiList[,2])),labels=factNames))

}

vnpsiList <- psiList

vnpsiList[,1] <- rownames(psiList)

vnpsiList[,2] <- paste0(vnpsiList[,1],"~~y", psiList[,2])

nameList <- rbind(vnlamList, vnphiList, vnpsiList)

# ========================================================== #

# ========================================================== #

# iterate around this function

fitResults <- matrix(nrow=opt[[2]], ncol=it)

# progress bar

if(pb==T) progress_bar <- txtProgressBar(min = 0, max = it, style = 3)

|

| | 0% iter <- 1

for(iter in 1:it){

# extract iteration information from modList

x <- modList[iter, ]

# do we need to update lambda?

if(iter <= il){

Q <- lambda

Q[is.na(Q)] <- 0

Q[x[1], x[2]] <- NA

lambdaMod <- Q

} else {

Q <- lambda

Q[is.na(Q)] <- 0

lambdaMod <- Q

}

# update phi?

if(iter > il & iter <= ip){

Q <- phi

Q[is.na(Q)] <- 0

Q[x[1], x[2]] <- NA

phiMod <- Q

} else {

Q <- phi

Q[is.na(Q)] <- 0

phiMod <- Q

}

# update psi?

if(iter > ip){

Q <- psi

Q[is.na(Q)] <- 0

Q[x[1], x[2]] <- NA

psiMod <- Q

} else {

Q <- psi

Q[is.na(Q)] <- 0

psiMod <- Q

}

# combine into a single list

cfaModel <- list(lambdaMod, phiMod, psiMod) #, tauMod, etaMod

#print(cfaModel)

# get starting values

inits <- get_starting_values(cfaModel)

# use optim() to run simulation

fit <- optim(inits, get_log_post, control = list(fnscale = -1),

hessian = TRUE,

sample.data=sampleData, cfa.model=cfaModel)

param_mean <- fit$par # numerical deriv

# compute hess at param_mean

#hess <- numDeriv::hessian(model, param_mean, ...)

#param_cov_mat <- solve(-hess)

param_cov_mat <- solve(-fit$hessian)

# scaled covariance matrix (artifically inflate uncertainty)

scale.cov = opt[[1]]

A <- diag(scale.cov, nrow=nrow(param_cov_mat), ncol=ncol(param_cov_mat))

param_cov_mat <- A%*%param_cov_mat%*%t(A)

# sample

no.samples=opt[[2]]

fitResults[,iter] <- mcmc(rmvnorm(no.samples, param_mean, param_cov_mat))

if(pb == T) setTxtProgressBar(progress_bar, iter)

}Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

| | 1%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|= | 1%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|= | 2%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|== | 2%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|== | 3%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|== | 4%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|=== | 4%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|=== | 5%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|==== | 5%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|==== | 6%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|===== | 6%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|===== | 7%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|===== | 8%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|====== | 8%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|====== | 9%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|======= | 9%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|======= | 10%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|======= | 11%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|======== | 11%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|======== | 12%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|========= | 12%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|========= | 13%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|========== | 14%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|========== | 15%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|=========== | 15%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|=========== | 16%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|============ | 17%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|============ | 18%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|============= | 18%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|============= | 19%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|============== | 19%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|============== | 20%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|============== | 21%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

|

|=============== | 21%Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly

Warning in optim(inits, get_log_post, control = list(fnscale = -1), hessian = TRUE, : one-dimensional optimization by Nelder-Mead is unreliable:

use "Brent" or optimize() directly