Netflix Titles NLP

Jim Gruman

April 20, 2021

Last updated: 2021-10-07

Checks: 7 0

Knit directory: myTidyTuesday/

This reproducible R Markdown analysis was created with workflowr (version 1.6.2). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20210907) was run prior to running the code in the R Markdown file. Setting a seed ensures that any results that rely on randomness, e.g. subsampling or permutations, are reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility.

The results in this page were generated with repository version 65449f7. See the Past versions tab to see a history of the changes made to the R Markdown and HTML files.

Note that you need to be careful to ensure that all relevant files for the analysis have been committed to Git prior to generating the results (you can use wflow_publish or wflow_git_commit). workflowr only checks the R Markdown file, but you know if there are other scripts or data files that it depends on. Below is the status of the Git repository when the results were generated:

Ignored files:

Ignored: .Rhistory

Ignored: .Rproj.user/

Ignored: catboost_info/

Ignored: data/2021-09-08/

Ignored: data/CNHI_Excel_Chart.xlsx

Ignored: data/CommunityTreemap.jpeg

Ignored: data/Community_Roles.jpeg

Ignored: data/YammerDigitalDataScienceMembership.xlsx

Ignored: data/acs_poverty.rds

Ignored: data/fmhpi.rds

Ignored: data/grainstocks.rds

Ignored: data/hike_data.rds

Ignored: data/nber_rs.rmd

Ignored: data/netflixTitles.rmd

Ignored: data/netflixTitles2.rds

Ignored: data/us_states.rds

Ignored: data/us_states_hexgrid.geojson

Ignored: data/weatherstats_toronto_daily.csv

Untracked files:

Untracked: code/YammerReach.R

Untracked: code/work list batch targets.R

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the repository in which changes were made to the R Markdown (analysis/2021_04_20.Rmd) and HTML (docs/2021_04_20.html) files. If you’ve configured a remote Git repository (see ?wflow_git_remote), click on the hyperlinks in the table below to view the files as they were in that past version.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| Rmd | 65449f7 | opus1993 | 2021-10-07 | add Netflix titles NLP |

knitr::include_graphics("assets/netflix.png", error = FALSE)

The #TidyTuesday data this week is Netflix’s streaming catalog titles with descriptions, genre classifications, actors, directors, dates, and other metadata.

Introduction

First, I want to acknowledge that I benefited from the guidance and work of the following:

David Robinson’s Youtube screencast and code at the repo here.

Julia Silge’s Which #TidyTuesday Netflix titles are movies and which are TV shows?

Mark H White, PhD’s blog post EXPLORING THE STAR WARS “PREQUEL RENAISSANCE” USING TIDYMODELS AND WORKFLOWSETS

Max Kuhn and Julia Silge’s in-progres book Tidy Modeling With R Chapter 15: Screening Many Models

Max Kuhn’s LA R User Group talk on 18May2021

My goal is to explore and classify using the tidymodels framework and workflowsets to predict the genre of the titles on the streaming services. Dr. Silge hinted in her broadcast that a multi-class prediction would be possible with a neural net. Let’s explore that path.

First, let’s load libraries and set a ggplot theme:

suppressPackageStartupMessages({

library(tidyverse)

library(lubridate)

library(hrbrthemes)

library(tidytext)

library(tidymodels)

library(themis)

library(textrecipes)

library(discrim)

library(finetune) # fast hyperparameter selection

library(workflowsets)

library(tidyposterior)

library(stacks)

})

source(here::here("code","_common.R"),

verbose = FALSE,

local = knitr::knit_global())

ggplot2::theme_set(theme_jim(base_size = 12))Let’s load the data and clean up the duration movie field and date_added fields.

netflix_titles <-

tidytuesdayR::tt_load("2021-04-20")$netflix_titles %>%

separate(duration,

c("duration", "duration_units"),

sep = " ",

convert = TRUE

) %>%

mutate(

date_added = mdy(date_added),

year_added = year(date_added)

)

Downloading file 1 of 1: `netflix_titles.csv`Exploration

Skimming

The dataset includes 7,787 titles, both as movies and TV shows. Other summary details of the dataset, as provided by skimr

skimr::skim(netflix_titles)| Name | netflix_titles |

| Number of rows | 7787 |

| Number of columns | 14 |

| _______________________ | |

| Column type frequency: | |

| character | 10 |

| Date | 1 |

| numeric | 3 |

| ________________________ | |

| Group variables | None |

Variable type: character

| skim_variable | n_missing | complete_rate | min | max | empty | n_unique | whitespace |

|---|---|---|---|---|---|---|---|

| show_id | 0 | 1.00 | 2 | 5 | 0 | 7787 | 0 |

| type | 0 | 1.00 | 5 | 7 | 0 | 2 | 0 |

| title | 0 | 1.00 | 1 | 104 | 0 | 7787 | 0 |

| director | 2389 | 0.69 | 2 | 208 | 0 | 4049 | 0 |

| cast | 718 | 0.91 | 3 | 771 | 0 | 6831 | 0 |

| country | 507 | 0.93 | 4 | 123 | 0 | 681 | 0 |

| rating | 7 | 1.00 | 1 | 8 | 0 | 14 | 0 |

| duration_units | 0 | 1.00 | 3 | 7 | 0 | 3 | 0 |

| listed_in | 0 | 1.00 | 6 | 79 | 0 | 492 | 0 |

| description | 0 | 1.00 | 61 | 248 | 0 | 7769 | 0 |

Variable type: Date

| skim_variable | n_missing | complete_rate | min | max | median | n_unique |

|---|---|---|---|---|---|---|

| date_added | 10 | 1 | 2008-01-01 | 2021-01-16 | 2019-03-08 | 1512 |

Variable type: numeric

| skim_variable | n_missing | complete_rate | mean | sd | p0 | p25 | p50 | p75 | p100 | hist |

|---|---|---|---|---|---|---|---|---|---|---|

| release_year | 0 | 1 | 2013.93 | 8.76 | 1925 | 2013 | 2017 | 2018 | 2021 | ▁▁▁▁▇ |

| duration | 0 | 1 | 69.12 | 50.95 | 1 | 2 | 88 | 106 | 312 | ▆▇▂▁▁ |

| year_added | 10 | 1 | 2018.49 | 1.39 | 2008 | 2018 | 2019 | 2020 | 2021 | ▁▁▁▆▇ |

Genres

The listed_in character field associates individual titles with one or more genres. When separated out, the count of titles in each genre includes:

netflix_titles %>%

separate_rows(listed_in, sep = ", ") %>%

count(listed_in, sort = TRUE)Film Duration

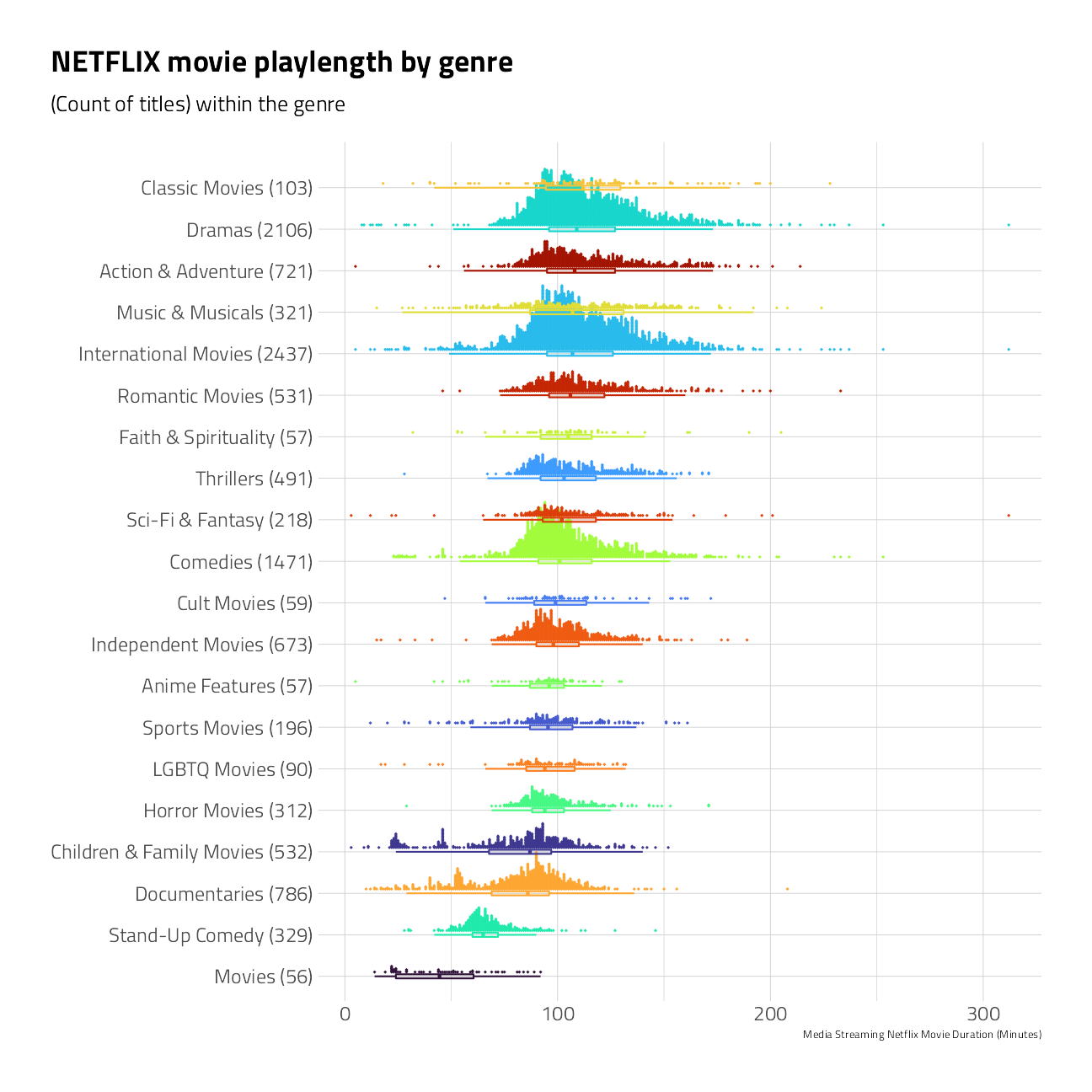

Let’s take a quick peek at the median time duration of only the movies for each genre. Note again that the same title can often be counted in more than one genre grouping.

netflix_titles %>%

separate_rows(listed_in, sep = ", ") %>%

filter(type == "Movie") %>%

group_by(type, genre = listed_in) %>%

mutate(

n = n()

) %>%

ungroup() %>%

mutate(

genre = glue::glue("{ genre } ({ n })"),

genre = fct_reorder(genre, duration)

) %>%

filter(genre != "Movies") %>%

ungroup() %>%

ggplot(aes(duration, genre)) +

ggdist::stat_dots(

aes(color = genre),

side = "top",

justification = -0.1,

binwidth = 0.5,

show.legend = FALSE

) +

geom_boxplot(aes(

color = genre,

fill = stage(genre,

after_scale = ggplot2::alpha(fill, 0.1)

)

),

width = 0.1,

outlier.shape = NA,

show.legend = FALSE

) +

scale_color_manual(values = viridis::viridis_pal(option = "H")(21)[c(1, 8, 15, 2, 9, 16, 3, 10, 17, 4, 11, 18, 5, 12, 19, 6, 13, 20, 7, 14, 21)]) +

scale_fill_manual(values = viridis::viridis_pal(option = "H")(21)[c(1, 8, 15, 2, 9, 16, 3, 10, 17, 4, 11, 18, 5, 12, 19, 6, 13, 20, 7, 14, 21)]) +

labs(

x = "Media Streaming Netflix Movie Duration (Minutes)",

y = NULL,

title = "NETFLIX movie playlength by genre",

subtitle = "(Count of titles) within the genre"

)

The median of Netflix titles labeled as Documentaries and Stand-Up Comedy movies are shorter in duration than Dramas and Classic Movies.

Feature Engineering

Given the description, cast members, director, and release_year, can we build a useful model to predict the genre?

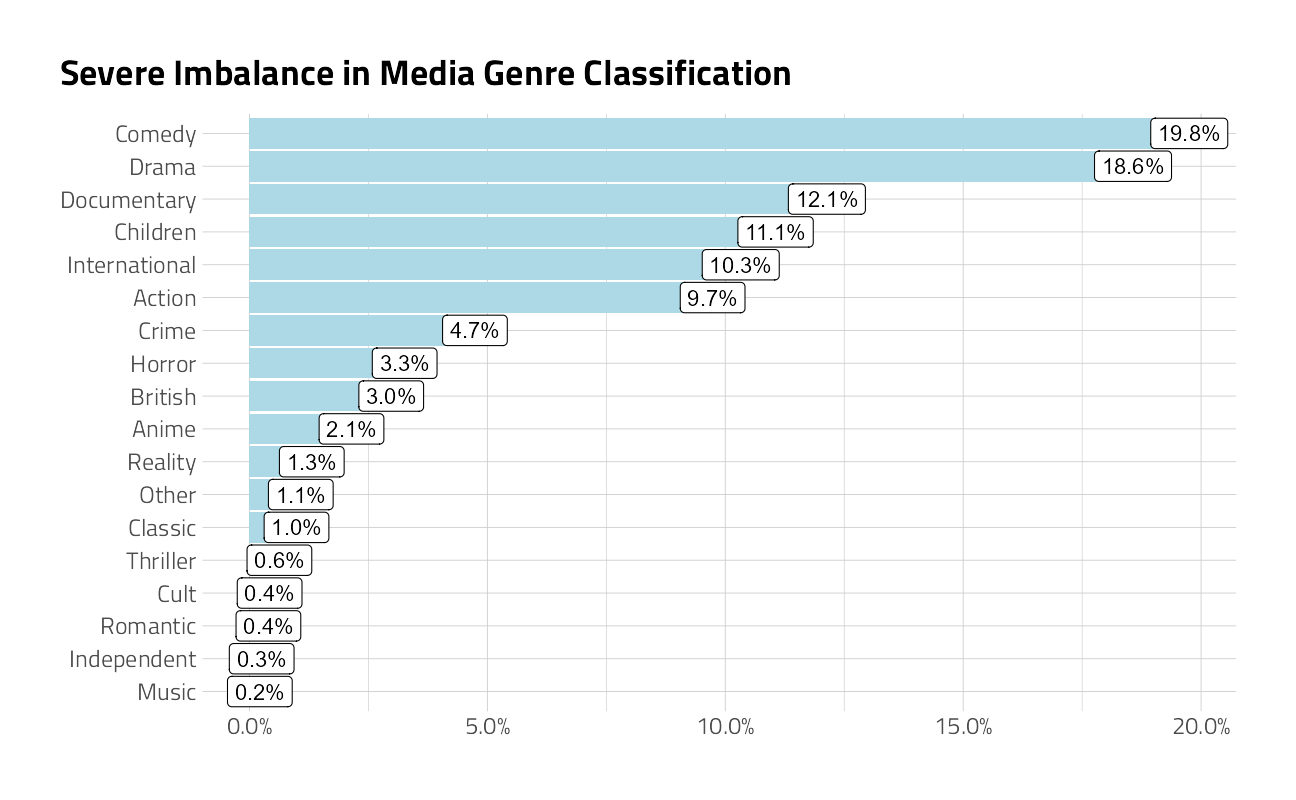

Unbalanced multi-class problems are especially challenging. And in the Netflix dataset, most movies belong to two or three genres at the same time. To make this problem just a little less complex, we will bundle some genre’s into logical groupings. I am also only using the first listed_in genre, as the primary. The result is 20 genres.

netflix_df <- netflix_titles %>%

select(

genre = listed_in,

show_id,

description,

cast,

director,

release_year

) %>%

separate_rows(genre, sep = ", ") %>%

mutate(genre = case_when(

str_detect(genre, "International") ~ "International",

str_detect(genre, "Romantic") ~ "Romantic",

str_detect(genre, "Drama") ~ "Drama",

str_detect(genre, "Action") ~ "Action",

str_detect(genre, "Comed") ~ "Comedy",

str_detect(genre, "Cult") ~ "Cult",

str_detect(genre, "Thrill") ~ "Thriller",

str_detect(genre, "Teen|Children|Kids") ~ "Children",

str_detect(genre, "Science|Docu") ~ "Documentary",

str_detect(genre, "Anime") ~ "Anime",

str_detect(genre, "Horror") ~ "Horror",

str_detect(genre, "Independent") ~ "Independent",

str_detect(genre, "Crime") ~ "Crime",

str_detect(genre, "Music") ~ "Music",

str_detect(genre, "British") ~ "British",

str_detect(genre, "Reality") ~ "Reality",

str_detect(genre, "Classic") ~ "Classic",

str_detect(genre, "Myster") ~ "Mysteries",

str_detect(genre, "Faith") ~ "Faith",

TRUE ~ "Other"

)) %>%

group_by(show_id) %>%

mutate(position = row_number()) %>%

ungroup() %>%

pivot_wider(

names_from = position,

values_from = genre,

names_prefix = "genre"

) %>%

mutate(genre1 = as.factor(genre1))netflix_df %>%

count(genre1) %>%

mutate(pct = n / sum(n)) %>%

ggplot(aes(pct, fct_reorder(genre1, pct))) +

geom_col(fill = "lightblue") +

scale_x_continuous(labels = scales::percent) +

geom_label(aes(label = scales::percent(pct, accuracy = .1))) +

labs(

title = "Severe Imbalance in Media Genre Classification",

y = NULL, x = NULL

)

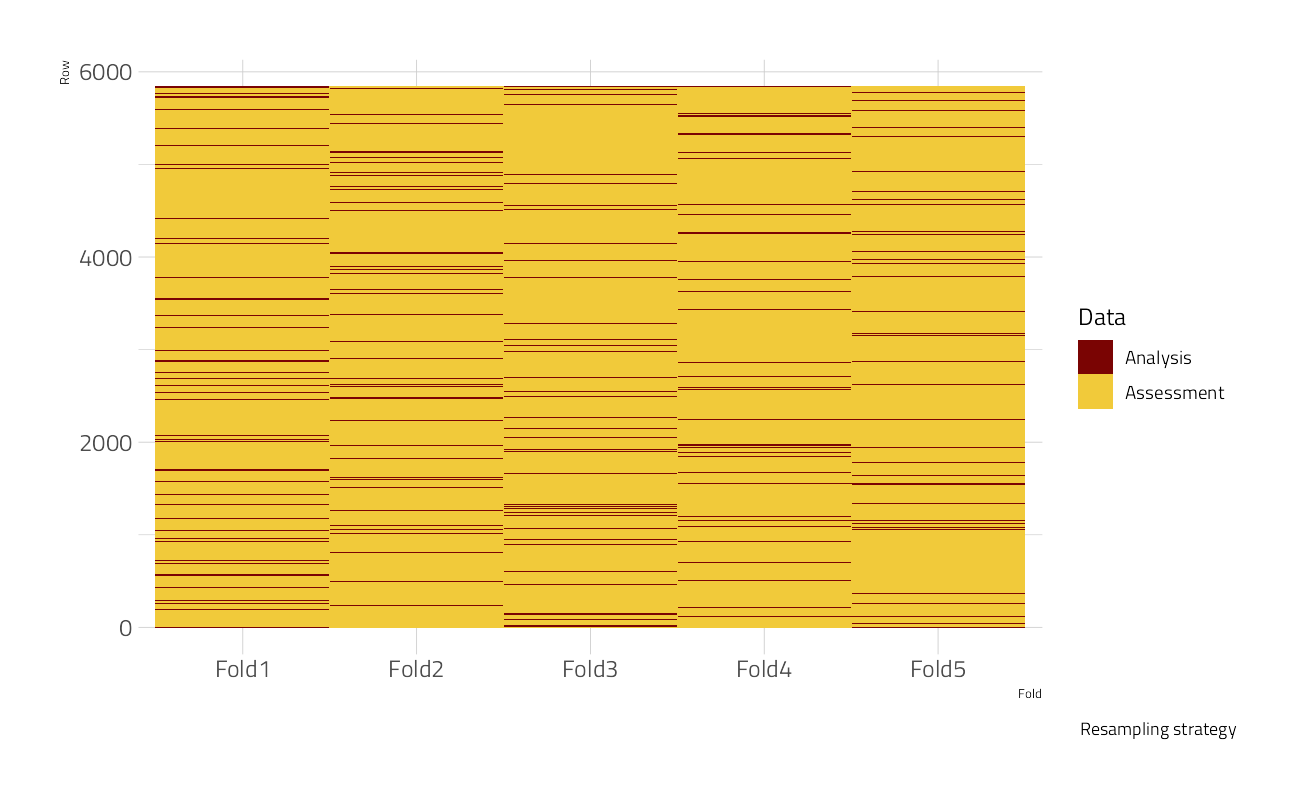

Let’s split the data using the default 3:1 ratio of training-to-test and resample the training set using 5-fold cross-validation. V-fold cross-validation randomly splits the data into V groups (5) of roughly equal size (called “folds”). A resample of the analysis data consists of V-1 (4) of the folds while the assessment set contains the final fold. In basic V-fold cross-validation (i.e. no repeats), the number of resamples is equal to V.

Cross Validation

The strata argument causes the random sampling to be conducted within the stratification variable, in this case genre1.

set.seed(1501)

netflix_split <- initial_split(netflix_df, strata = genre1)

netflix_train <- training(netflix_split)

netflix_test <- testing(netflix_split)

netflix_folds <- vfold_cv(netflix_train,

v = 5, strata = genre1

)ggplot(

netflix_folds %>% tidy(),

aes(Fold, Row, fill = Data)

) +

geom_tile() +

labs(caption = "Resampling strategy")

Our dataset will be pre-processed through several different recipes that engineer text features by tokenizing the description, cast, and the director fields.

The first recipe applies filters to use the words, cast members, and director with the 30 highest term frequencies after removing stop words and stemming.

The second filter the description words to remove stopwords and then builds 25 word embeddings with Glove Twitter 27B. Learn more about word embeddings and pre-trained model transfer learning here. The recipe goes on to hash the cast member and director features into numeric variables with the hashing trick. All models are centered and scaled. There are no missing values.

With a big multi-class challenge, we need to take action to balance the classes. smote upsamples members of the minority classes with nearest neighbors imputation (in the training set only). upsampling replicates rows of the minority classes to match the count of the members of the largest class member.

Pre-processing

(token_rec <- recipe(genre1 ~ description + cast + director,

data = netflix_train

) %>%

# upsample training set so each class has the same number of rows

step_upsample(genre1) %>%

# work with description character field

step_tokenize(description) %>%

step_stopwords(description) %>%

step_stem(description) %>%

step_word_embeddings(description,

embeddings = textdata::embedding_glove27b()

) %>%

# work with the cast members character field

step_tokenize(cast,

token = "regex",

options = list(pattern = ", ")

) %>%

step_tokenfilter(cast, max_tokens = 200) %>%

step_texthash(cast, num_terms = 6) %>%

# work with the director member character field

step_tokenize(director,

token = "regex",

options = list(pattern = ", ")

) %>%

step_tokenfilter(director, max_tokens = 200) %>%

step_texthash(director, num_terms = 10) %>%

# normalize for any engine with regularization

step_normalize(all_numeric())

)Recipe

Inputs:

role #variables

outcome 1

predictor 3

Operations:

Up-sampling based on genre1

Tokenization for description

Stop word removal for description

Stemming for description

Word embeddings aggregated from description

Tokenization for cast

Text filtering for cast

Feature hashing with cast

Tokenization for director

Text filtering for director

Feature hashing with director

Centering and scaling for all_numeric()Text Embeddings Recipe

And a look at the features of the upsampled “embeddings” recipe:

token_rec %>%

prep() %>%

bake(new_data = NULL) %>%

glimpse()Rows: 21,006

Columns: 42

$ director_hash01 <dbl> -0.02893736, -0.02893736, -0.02893736, -0.02893736, -0~

$ director_hash02 <dbl> 0.01096156, 0.01096156, 0.01096156, 0.01096156, 0.0109~

$ director_hash03 <dbl> 0.05915029, 0.05915029, 0.05915029, 0.05915029, 0.0591~

$ director_hash04 <dbl> -0.7685871, -0.7685871, -0.7685871, -0.7685871, -0.768~

$ director_hash05 <dbl> -0.03928938, -0.03928938, -0.03928938, -0.03928938, -0~

$ director_hash06 <dbl> 0.01435543, 0.01435543, 0.01435543, 0.01435543, 0.0143~

$ director_hash07 <dbl> 0.04672439, 0.04672439, 0.04672439, 0.04672439, 0.0467~

$ director_hash08 <dbl> -0.0485395, -0.0485395, -0.0485395, -0.0485395, -0.048~

$ director_hash09 <dbl> -0.03925078, -0.03925078, -0.03925078, -0.03925078, -0~

$ director_hash10 <dbl> -0.009853192, -0.009853192, -0.009853192, -0.009853192~

$ cast_hash1 <dbl> -0.08471693, -0.08471693, -3.38020567, -0.08471693, -0~

$ cast_hash2 <dbl> 0.09249959, 0.09249959, 0.09249959, 0.09249959, 0.0924~

$ cast_hash3 <dbl> -0.05483196, -0.05483196, -0.05483196, -0.05483196, -0~

$ cast_hash4 <dbl> -0.08204554, -0.08204554, -0.08204554, -0.08204554, -0~

$ cast_hash5 <dbl> -0.00775839, -0.00775839, 2.25575196, -0.00775839, -0.~

$ cast_hash6 <dbl> -0.04166601, -0.04166601, -0.04166601, -0.04166601, -0~

$ genre1 <fct> Action, Action, Action, Action, Action, Action, Action~

$ w_embed_sum_d1 <dbl> -0.88769404, -0.42409688, 1.13895629, -1.66692534, 0.1~

$ w_embed_sum_d2 <dbl> 0.8027839, 1.9160799, 0.8872131, 0.3691772, -1.6421994~

$ w_embed_sum_d3 <dbl> -0.45137723, -0.07343944, -0.78374714, -0.98154366, -1~

$ w_embed_sum_d4 <dbl> 0.2481995, 1.0630392, 0.1830963, 1.6405290, 0.7708223,~

$ w_embed_sum_d5 <dbl> 0.100271613, -1.313108020, -0.450255723, 0.053833303, ~

$ w_embed_sum_d6 <dbl> 0.98962476, -0.54912573, 0.38250539, 0.42568428, 0.122~

$ w_embed_sum_d7 <dbl> -0.41984969, -0.26710037, 0.03264045, -0.40406612, -1.~

$ w_embed_sum_d8 <dbl> -1.0401391, -0.2923903, 0.3710757, -0.9874991, -2.0057~

$ w_embed_sum_d9 <dbl> 1.1823848, 0.6338659, -0.2088298, 1.0279676, 0.4180787~

$ w_embed_sum_d10 <dbl> 1.560526621, 0.408680891, 1.597693635, -0.194099924, 0~

$ w_embed_sum_d11 <dbl> 0.06575798, 1.52348731, 1.84824395, -0.69609988, 0.091~

$ w_embed_sum_d12 <dbl> 0.03596779, 0.19906447, 0.70283170, 0.90545887, -0.254~

$ w_embed_sum_d13 <dbl> 0.7670217, 0.2198714, 0.7434887, 0.1240857, 0.4057891,~

$ w_embed_sum_d14 <dbl> 0.23182713, -1.16364635, -0.93447456, 1.27965719, 0.28~

$ w_embed_sum_d15 <dbl> 0.152729431, 1.426433309, -1.104981440, 0.002337636, 0~

$ w_embed_sum_d16 <dbl> 1.42214118, -0.39406305, -1.02111869, -0.41868217, -0.~

$ w_embed_sum_d17 <dbl> -0.16916311, -0.48377838, -0.46560217, 0.60142401, -0.~

$ w_embed_sum_d18 <dbl> -0.09388500, -0.37158055, -0.99407908, -0.35556000, 1.~

$ w_embed_sum_d19 <dbl> 0.32575707, -0.49348854, 0.16692971, 1.07728364, 1.075~

$ w_embed_sum_d20 <dbl> -0.79798799, 0.24579381, -1.62909190, -1.24971495, -0.~

$ w_embed_sum_d21 <dbl> 0.41841392, -0.00691488, -0.46134090, 0.90286682, -0.5~

$ w_embed_sum_d22 <dbl> 1.6369944284, 1.8566314246, -0.4315628413, 1.469026277~

$ w_embed_sum_d23 <dbl> 0.54119547, 2.21096826, -0.29194279, 0.34880442, 0.150~

$ w_embed_sum_d24 <dbl> -0.662200013, 0.862445045, 0.977315898, -1.452283239, ~

$ w_embed_sum_d25 <dbl> 0.59674274, 0.53158162, 0.09021243, 0.31440704, -0.137~Machine Learning

For the models themselves, we use the the parsnip package to create sets of model specifications. All are setup for multi-class classification.

logistic_reg_glmnet_spec <-

multinom_reg(

penalty = tune(),

mixture = 0.01

) %>%

set_engine("glmnet") %>%

set_mode("classification")

nb_spec <- naive_Bayes() %>%

set_mode("classification") %>%

set_engine("naivebayes")

svm_spec <-

svm_linear() %>%

set_mode("classification") %>%

set_engine("LiblineaR")Workflowsets

This is where the cool part comes in: We now define a “workflow set.” We apply cross = TRUE to look at all combinations of recipes and models.

(wfs <-

workflow_set(

preproc = list(embeddings = token_rec),

models = list(

nb_spec,

logistic_reg_glmnet_spec,

svm_spec

)

)

)And we see we have our six different workflows set up.

Tuning and Cross Validation

The engines chosen here require no tuning. We will load register a parallel backend to speed the computations across the cross validation folds.

all_cores <- parallelly::availableCores(omit = 1)

future::plan("multisession", workers = all_cores) # on Windows

ctrl <-

control_grid(

save_pred = FALSE,

parallel_over = "everything",

save_workflow = FALSE

)

system.time(

cv_res <- wfs %>%

workflow_map("tune_grid",

seed = 2021,

resamples = netflix_folds,

control = ctrl,

grid = 20,

metrics = metric_set(

accuracy,

sensitivity,

specificity,

j_index

),

verbose = TRUE

)

)

future::plan(strategy = "sequential")i No tuning parameters. `fit_resamples()` will be attempted

i 1 of 3 resampling: embeddings_naive_Bayes

v 1 of 3 resampling: embeddings_naive_Bayes (30.8s)

i 2 of 3 tuning: embeddings_multinom_reg

v 2 of 3 tuning: embeddings_multinom_reg (2m 29.2s)

i No tuning parameters. `fit_resamples()` will be attempted

i 3 of 3 resampling: embeddings_svm_linear

v 3 of 3 resampling: embeddings_svm_linear (4m 27.1s)

user system elapsed

448.23 0.31 449.03 Note the run times.

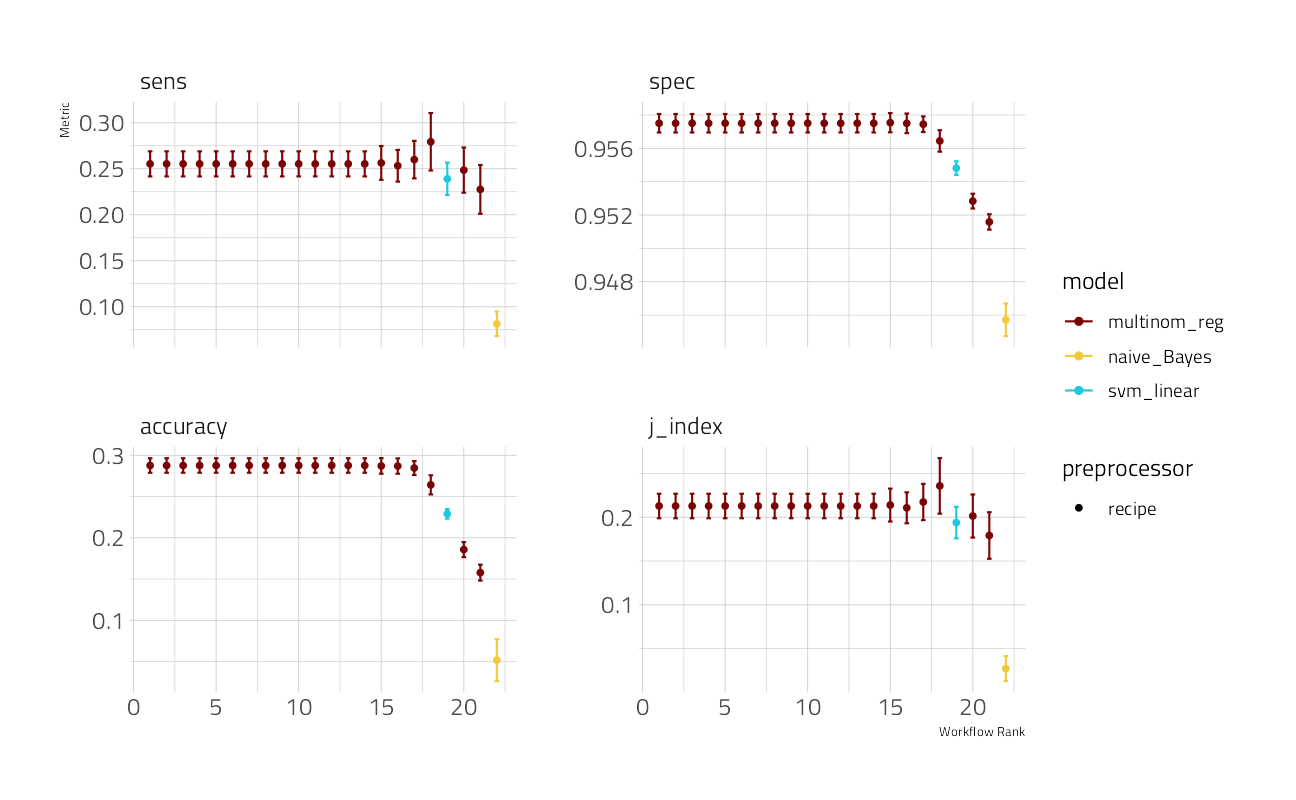

Compare the models

We can then look at the results. What are the top workflows?

autoplot(cv_res)

cv_res %>%

rank_results(select_best = TRUE) %>%

pivot_wider(

id_cols = wflow_id,

names_from = .metric,

values_from = mean

) %>%

select(wflow_id, accuracy, j_index, sens, spec)So the GLMnet runs fast and delivers the best results of the three engines used. Let’s see what we can do to improve it, still using the same resample folds.

logistic_reg_glmnet_spec <-

multinom_reg(

penalty = tune(),

mixture = tune()

) %>%

set_engine("glmnet") %>%

set_mode("classification")

glm_wf <- workflow(token_rec, logistic_reg_glmnet_spec)

ctrl <-

control_grid(

save_pred = TRUE,

parallel_over = "everything",

save_workflow = FALSE

)

all_cores <- parallelly::availableCores(omit = 1)

future::plan("multisession", workers = all_cores) # on Windows

cv_glmnet <- glm_wf %>%

tune_grid(

resamples = netflix_folds,

control = ctrl,

grid = 20,

metrics = metric_set(

roc_auc,

accuracy,

sensitivity,

specificity,

mn_log_loss

)

)

future::plan(strategy = "sequential")

autoplot(cv_glmnet)Not bad. Let’s look more closely at performance across cross validation folds and prediction classes next.

Performance

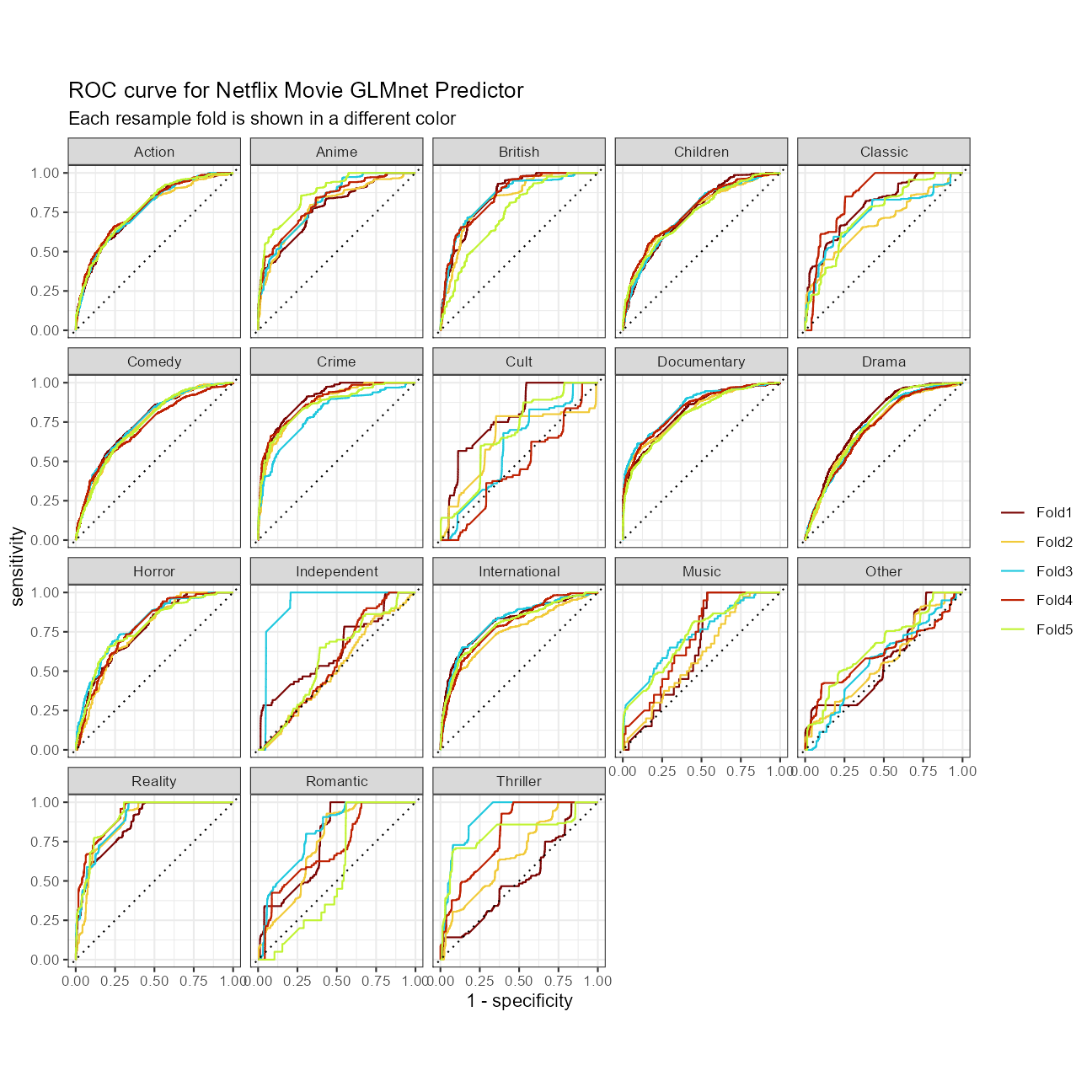

We can look at ROC curves by genre class for the set of 5 cross-validation folds of the best model:

cv_glmnet %>%

collect_predictions() %>%

group_by(id) %>%

roc_curve(truth = genre1, .pred_Action:.pred_Thriller) %>%

autoplot() +

labs(

color = NULL,

title = glue::glue("ROC curve for Netflix Movie GLMnet Predictor"),

subtitle = "Each resample fold is shown in a different color"

)

This model predicts Documentaries and International films very well. On the other extreme, Romantic Movies and “Other” are not predicted well in every cross validation fold.

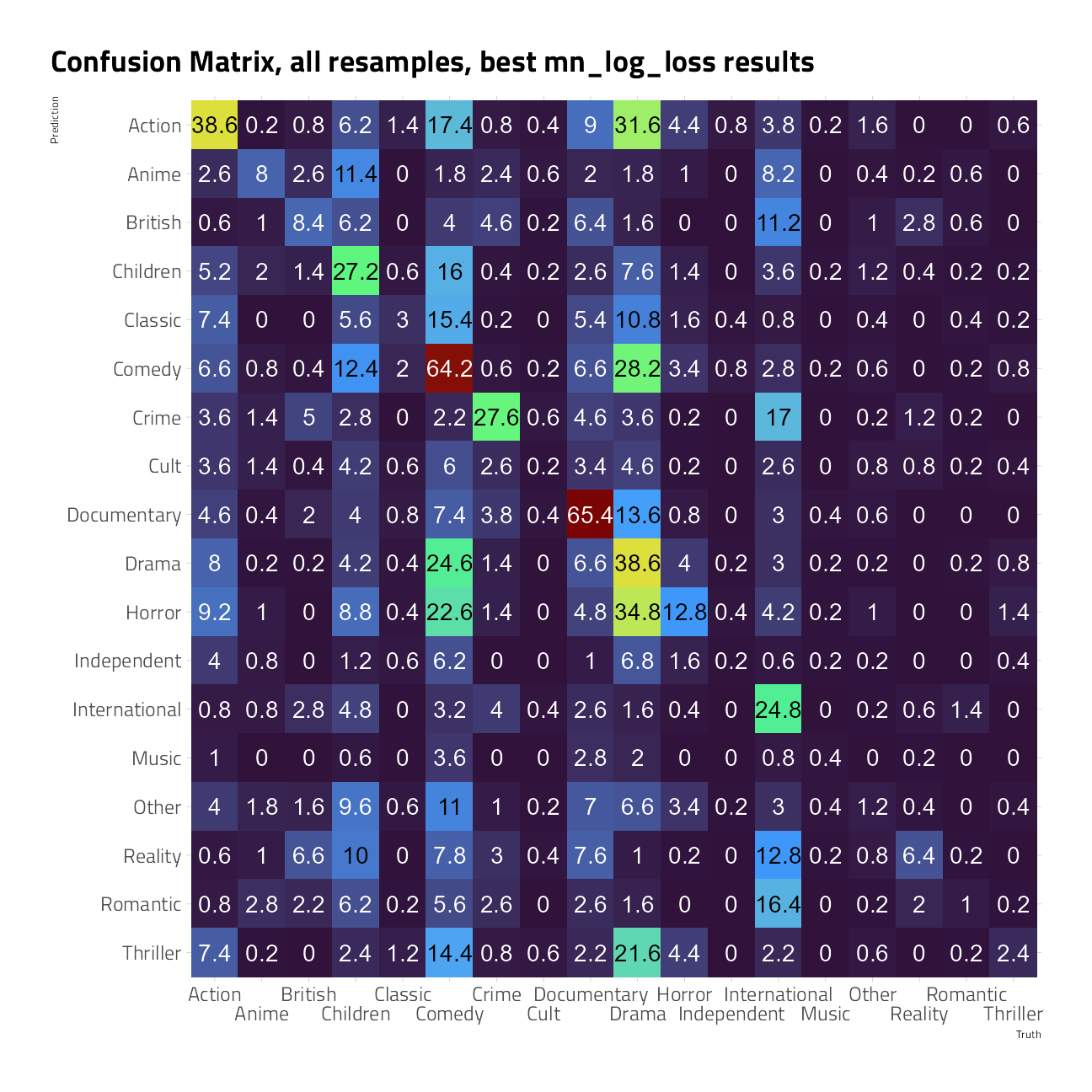

We can also create a confusion matrix from the resamples using conf_mat_resampled(), which computes a separate confusion matrix for each resample and then averages the cell counts.

show_best(cv_glmnet, metric = "mn_log_loss") %>%

select(-.estimator)show_best(cv_glmnet, metric = "roc_auc") %>%

select(-.estimator)cv_glmnet %>%

conf_mat_resampled(

tidy = FALSE,

parameters = select_best(cv_glmnet,

metric = "mn_log_loss"

)

) %>%

autoplot(type = "heatmap") +

labs(title = "Confusion Matrix, all resamples, best mn_log_loss results")

Generally, it is a good idea to evaluate the models over multiple metrics so that different aspects of the model fit are taken into account. Also, it often makes sense to choose a slightly suboptimal parameter combination that is associated with a simpler model. For this model, simplicity corresponds to larger penalty values.

best_results <- cv_glmnet %>%

select_best(metric = "mn_log_loss")

test_results <- glm_wf %>%

finalize_workflow(best_results) %>%

last_fit(split = netflix_split)The test results show:

collect_metrics(test_results)This is a good test result, as the model run on unseen data delivers performance comparable to the training figures, so does not over-fit. Even so, I’d like to explore other machine learning engines, including deep learning, to improve classificaiton accuracy.

sessionInfo()R version 4.1.1 (2021-08-10)

Platform: x86_64-w64-mingw32/x64 (64-bit)

Running under: Windows 10 x64 (build 22000)

Matrix products: default

locale:

[1] LC_COLLATE=English_United States.1252

[2] LC_CTYPE=English_United States.1252

[3] LC_MONETARY=English_United States.1252

[4] LC_NUMERIC=C

[5] LC_TIME=English_United States.1252

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] glmnet_4.1-2 Matrix_1.3-4 vctrs_0.3.8

[4] rlang_0.4.11 text2vec_0.6 SnowballC_0.7.0

[7] stopwords_2.2 stacks_0.2.1 tidyposterior_0.1.0

[10] finetune_0.1.0 discrim_0.1.3 textrecipes_0.4.1

[13] themis_0.1.4 yardstick_0.0.8 workflowsets_0.1.0

[16] workflows_0.2.3 tune_0.1.6 rsample_0.1.0

[19] recipes_0.1.17 parsnip_0.1.7.900 modeldata_0.1.1

[22] infer_1.0.0 dials_0.0.10 scales_1.1.1

[25] broom_0.7.9 tidymodels_0.1.4 tidytext_0.3.2

[28] hrbrthemes_0.8.0 lubridate_1.7.10 forcats_0.5.1

[31] stringr_1.4.0 dplyr_1.0.7 purrr_0.3.4

[34] readr_2.0.2 tidyr_1.1.4 tibble_3.1.4

[37] ggplot2_3.3.5 tidyverse_1.3.1 workflowr_1.6.2

loaded via a namespace (and not attached):

[1] rappdirs_0.3.3 R.methodsS3_1.8.1 ragg_1.1.3

[4] bit64_4.0.5 knitr_1.36 dygraphs_1.1.1.6

[7] R.utils_2.11.0 styler_1.6.2 data.table_1.14.2

[10] rpart_4.1-15 inline_0.3.19 hardhat_0.1.6

[13] doParallel_1.0.16 generics_0.1.0 GPfit_1.0-8

[16] RhpcBLASctl_0.21-247 callr_3.7.0 usethis_2.0.1

[19] RANN_2.6.1 future_1.22.1 conflicted_1.0.4

[22] bit_4.0.4 tzdb_0.1.2 tokenizers_0.2.1

[25] xml2_1.3.2 httpuv_1.6.3 StanHeaders_2.21.0-7

[28] assertthat_0.2.1 viridis_0.6.1 gower_0.2.2

[31] xfun_0.26 ggdist_3.0.0 hms_1.1.1

[34] jquerylib_0.1.4 bayesplot_1.8.1 evaluate_0.14

[37] promises_1.2.0.1 fansi_0.5.0 dbplyr_2.1.1

[40] readxl_1.3.1 igraph_1.2.6 DBI_1.1.1

[43] htmlwidgets_1.5.4 stats4_4.1.1 rsparse_0.4.0

[46] ellipsis_0.3.2 crosstalk_1.1.1 selectr_0.4-2

[49] backports_1.2.1 V8_3.4.2 markdown_1.1

[52] prismatic_1.0.0 textdata_0.4.1 RcppParallel_5.1.4

[55] here_1.0.1 cachem_1.0.6 withr_2.4.2

[58] vroom_1.5.5 checkmate_2.0.0 xts_0.12.1

[61] prettyunits_1.1.1 crayon_1.4.1 labeling_0.4.2

[64] pkgconfig_2.0.3 nlme_3.1-152 nnet_7.3-16

[67] globals_0.14.0 lifecycle_1.0.1 miniUI_0.1.1.1

[70] skimr_2.1.3 colourpicker_1.1.1 extrafontdb_1.0

[73] unbalanced_2.0 modelr_0.1.8 distributional_0.2.2

[76] cellranger_1.1.0 rprojroot_2.0.2 matrixStats_0.61.0

[79] loo_2.4.1 boot_1.3-28 zoo_1.8-9

[82] reprex_2.0.1 base64enc_0.1-3 whisker_0.4

[85] ggridges_0.5.3 processx_3.5.2 viridisLite_0.4.0

[88] float_0.2-6 ROSE_0.0-4 R.oo_1.24.0

[91] pROC_1.18.0 mlr_2.19.0 shape_1.4.6

[94] parallelly_1.28.1 R.cache_0.15.0 shinystan_2.5.0

[97] magrittr_2.0.1 ParamHelpers_1.14 plyr_1.8.6

[100] threejs_0.3.3 compiler_4.1.1 rstantools_2.1.1

[103] lme4_1.1-27.1 cli_3.0.1 DiceDesign_1.9

[106] listenv_0.8.0 janeaustenr_0.1.5 ps_1.6.0

[109] MASS_7.3-54 tidyselect_1.1.1 stringi_1.7.5

[112] textshaping_0.3.5 butcher_0.1.5 highr_0.9

[115] yaml_2.2.1 grid_4.1.1 sass_0.4.0

[118] fastmatch_1.1-3 tools_4.1.1 future.apply_1.8.1

[121] parallel_4.1.1 rstudioapi_0.13 foreach_1.5.1

[124] git2r_0.28.0 gridExtra_2.3 prodlim_2019.11.13

[127] farver_2.1.0 digest_0.6.28 FNN_1.1.3

[130] shiny_1.7.1 lava_1.6.10 Rcpp_1.0.7

[133] later_1.3.0 httr_1.4.2 gdtools_0.2.3

[136] rsconnect_0.8.24 colorspace_2.0-2 rvest_1.0.1

[139] fs_1.5.0 lgr_0.4.3 splines_4.1.1

[142] rematch2_2.1.2 shinythemes_1.2.0 systemfonts_1.0.2

[145] xtable_1.8-4 rstanarm_2.21.1 jsonlite_1.7.2

[148] nloptr_1.2.2.2 BBmisc_1.11 timeDate_3043.102

[151] rstan_2.21.2 ipred_0.9-12 R6_2.5.1

[154] mlapi_0.1.0 lhs_1.1.3 pillar_1.6.3

[157] htmltools_0.5.2 mime_0.12 glue_1.4.2

[160] fastmap_1.1.0 minqa_1.2.4 DT_0.19

[163] class_7.3-19 codetools_0.2-18 pkgbuild_1.2.0

[166] furrr_0.2.3 utf8_1.2.2 lattice_0.20-44

[169] bslib_0.3.0 curl_4.3.2 tidytuesdayR_1.0.1

[172] gtools_3.9.2 shinyjs_2.0.0 Rttf2pt1_1.3.8

[175] survival_3.2-11 parallelMap_1.5.1 repr_1.1.3

[178] rmarkdown_2.11 munsell_0.5.0 iterators_1.0.13

[181] haven_2.4.3 reshape2_1.4.4 gtable_0.3.0

[184] extrafont_0.17