Comparison of linear regression methods in the four scenarios from Zou & Hastie (2005)

Peter Carbonetto, Gao Wang and Matthew Stephens

April 2, 2019

Last updated: 2019-04-09

Checks: 6 0

Knit directory: dsc-linreg/analysis/

This reproducible R Markdown analysis was created with workflowr (version 1.2.0.9000). The Report tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(1) was run prior to running the code in the R Markdown file. Setting a seed ensures that any results that rely on randomness, e.g. subsampling or permutations, are reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility. The version displayed above was the version of the Git repository at the time these results were generated.

Note that you need to be careful to ensure that all relevant files for the analysis have been committed to Git prior to generating the results (you can use wflow_publish or wflow_git_commit). workflowr only checks the R Markdown file, but you know if there are other scripts or data files that it depends on. Below is the status of the Git repository when the results were generated:

Ignored files:

Ignored: .sos/

Ignored: analysis/.sos/

Ignored: dsc/.sos/

Ignored: dsc/linreg/

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the R Markdown and HTML files. If you’ve configured a remote Git repository (see ?wflow_git_remote), click on the hyperlinks in the table below to view them.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| Rmd | b2c80eb | Peter Carbonetto | 2019-04-09 | wflow_publish(“results_overview.Rmd”) |

| html | 5a34c5e | Peter Carbonetto | 2019-04-09 | Re-built results_overview with new design of DSC; new results |

| Rmd | 2a86b01 | Peter Carbonetto | 2019-04-09 | wflow_publish(“results_overview.Rmd”) |

| Rmd | ada904c | Peter Carbonetto | 2019-04-09 | Fixed a couple more bugs in the fit modules. |

| Rmd | ba71a22 | Peter Carbonetto | 2019-04-09 | Added score modules to pipeline. |

| Rmd | 5004487 | Peter Carbonetto | 2019-04-09 | Edited preamble in results_overview.Rmd. |

| Rmd | 093d5e6 | Peter Carbonetto | 2019-04-09 | Some revisions to the text in the README and home page. |

| html | 58540be | Peter Carbonetto | 2019-04-09 | Added links and other content to home page. |

| Rmd | d4d0415 | Peter Carbonetto | 2019-04-09 | Re-organized some files, and moved usage instructions to workflowr page. |

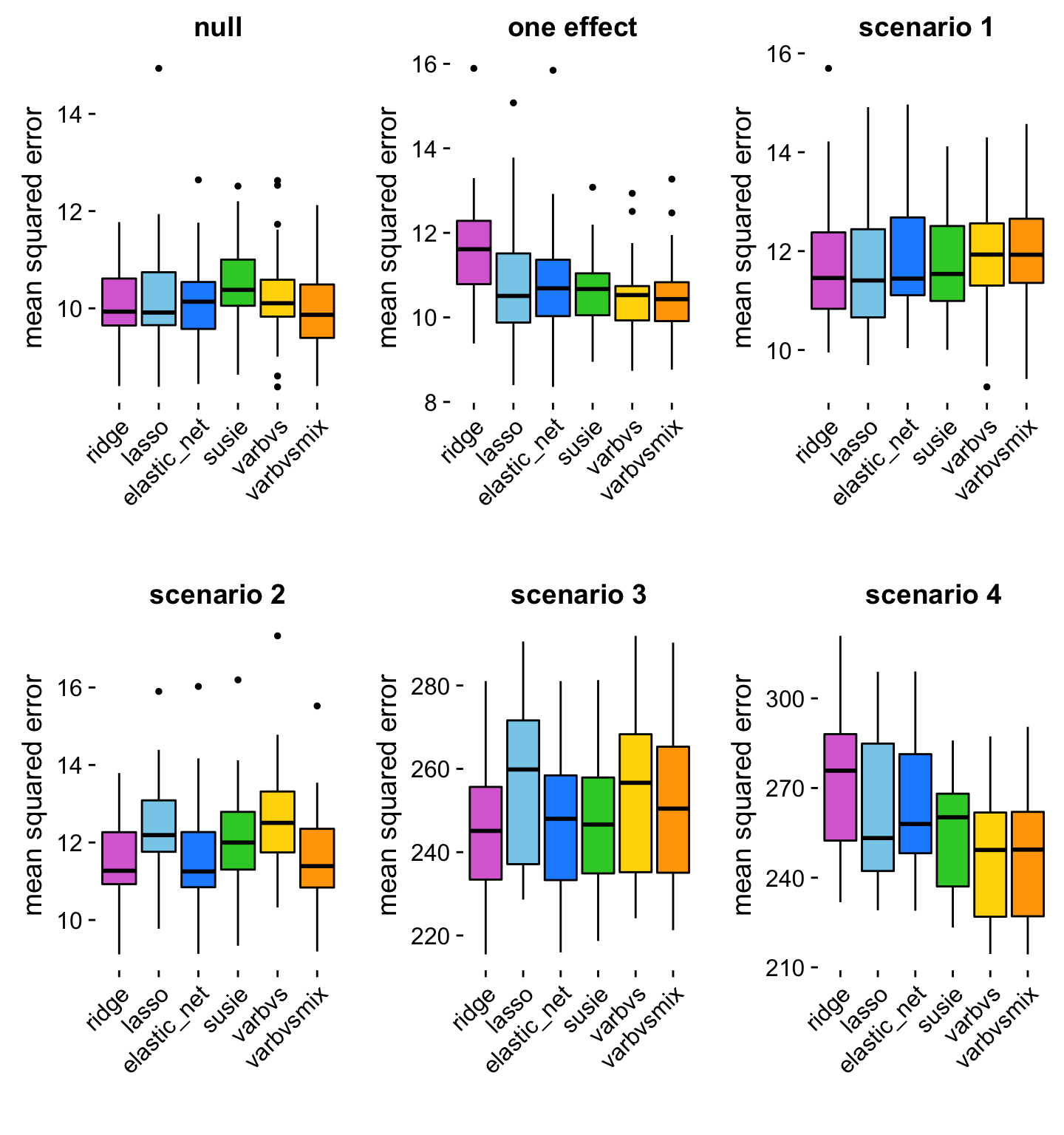

In this short analysis, we compare the prediction accuracy of several linear regression in the four simulation examples of Zou & Hastie (2005). We also include two additional scenarios, similar to Examples 1 and 2 from Zou & Hastie (2005): a “null” scenario, in which the predictors have no effect on the outcome; and a “one effect” scenario, in which only one of the predictors affects the outcome.

The six methods compared are:

ridge regression;

the Lasso;

the Elastic Net;

“Sum of Single Effects” (SuSiE) regression, described here;

variational inference for Bayesian variable selection, or “varbvs”, described here; and

“varbvsmix”, an elaboration of varbvs that replaces the single normal prior with a mixture-of-normals.

Load packages

Load a few packages and custom functions used in the analysis below.

library(dscrutils)

library(ggplot2)

library(cowplot)

source("../code/plots.R")Import DSC results

Here we use function “dscquery” from the dscrutils package to extract the DSC results we are interested in—the mean squared error in the predictions from each method and in each simulation scenario. After this call, the “dsc” data frame should contain results for 720 pipelines—6 methods times 6 scenarios times 20 data sets simulated in each scenario.

library(dscrutils)

methods <- c("ridge","lasso","elastic_net","susie","varbvs","varbvsmix")

dsc <- dscquery("../dsc/linreg",c("fit","mse.err"),"simulate.scenario",

verbose = FALSE)

dsc <- transform(dsc,

simulate = factor(simulate),

fit = factor(fit,methods))

nrow(dsc)

# [1] 720Note that you will need to run the DSC before querying the results; see here for instructions on running the DSC. If you did not run the DSC to generate these results, you can replace the dscquery call above by this line to load the pre-extracted results stored in a CSV file:

dsc <- read.csv("../output/linreg_mse.csv")This is how the CSV file was created:

write.csv(dsc,"../output/linreg_mse.csv",row.names = FALSE,quote = FALSE)Summarize and discuss simulation results

The boxplots below summarize the prediction errors in each of the simulations.

p1 <- mse.boxplot(subset(dsc,simulate == "null_effects")) + ggtitle("null")

p2 <- mse.boxplot(subset(dsc,simulate == "one_effect")) + ggtitle("one effect")

p3 <- mse.boxplot(subset(dsc,simulate.scenario == 1)) + ggtitle("scenario 1")

p4 <- mse.boxplot(subset(dsc,simulate.scenario == 2)) + ggtitle("scenario 2")

p5 <- mse.boxplot(subset(dsc,simulate.scenario == 3)) + ggtitle("scenario 3")

p6 <- mse.boxplot(subset(dsc,simulate.scenario == 4)) + ggtitle("scenario 4")

p <- plot_grid(p1,p2,p3,p4,p5,p6)

print(p)

| Version | Author | Date |

|---|---|---|

| 5a34c5e | Peter Carbonetto | 2019-04-09 |

Here are a few initial (i.e., imprecise) impressions from these plots.

In most cases, the Elastic Net does at least as well, or better than the Lasso, which is what we would expect.

Ridge regression actually achieves excellent accuracy in all cases except Scenario 4. Ridge regression is expected to do less well in Scenario 4 because the majority of the true coefficients are zero, so a sparse model would be favoured.

In Scenario 4 where the predictors are correlated in a structured way, and the effects are sparse, varbvs and varbvsmix perform better than the other methods.

varbvsmix yields competitive predictions in all four scenarios.

Session information

This is the version of R and the packages that were used to generate these results.

sessionInfo()

# R version 3.4.3 (2017-11-30)

# Platform: x86_64-apple-darwin15.6.0 (64-bit)

# Running under: macOS High Sierra 10.13.6

#

# Matrix products: default

# BLAS: /Library/Frameworks/R.framework/Versions/3.4/Resources/lib/libRblas.0.dylib

# LAPACK: /Library/Frameworks/R.framework/Versions/3.4/Resources/lib/libRlapack.dylib

#

# locale:

# [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

#

# attached base packages:

# [1] stats graphics grDevices utils datasets methods base

#

# other attached packages:

# [1] cowplot_0.9.4 ggplot2_3.1.0 dscrutils_0.3.5

#

# loaded via a namespace (and not attached):

# [1] Rcpp_1.0.0 knitr_1.20 whisker_0.3-2

# [4] magrittr_1.5 workflowr_1.2.0.9000 tidyselect_0.2.5

# [7] munsell_0.4.3 colorspace_1.4-0 R6_2.2.2

# [10] rlang_0.3.1 dplyr_0.8.0.1 stringr_1.3.1

# [13] plyr_1.8.4 tools_3.4.3 grid_3.4.3

# [16] gtable_0.2.0 withr_2.1.2 git2r_0.23.3

# [19] htmltools_0.3.6 assertthat_0.2.0 yaml_2.2.0

# [22] lazyeval_0.2.1 rprojroot_1.3-2 digest_0.6.17

# [25] tibble_2.1.1 crayon_1.3.4 purrr_0.2.5

# [28] fs_1.2.6 glue_1.3.0 evaluate_0.11

# [31] rmarkdown_1.10 labeling_0.3 stringi_1.2.4

# [34] pillar_1.3.1 compiler_3.4.3 scales_0.5.0

# [37] backports_1.1.2 pkgconfig_2.0.2

sessionInfo()

# R version 3.4.3 (2017-11-30)

# Platform: x86_64-apple-darwin15.6.0 (64-bit)

# Running under: macOS High Sierra 10.13.6

#

# Matrix products: default

# BLAS: /Library/Frameworks/R.framework/Versions/3.4/Resources/lib/libRblas.0.dylib

# LAPACK: /Library/Frameworks/R.framework/Versions/3.4/Resources/lib/libRlapack.dylib

#

# locale:

# [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

#

# attached base packages:

# [1] stats graphics grDevices utils datasets methods base

#

# other attached packages:

# [1] cowplot_0.9.4 ggplot2_3.1.0 dscrutils_0.3.5

#

# loaded via a namespace (and not attached):

# [1] Rcpp_1.0.0 knitr_1.20 whisker_0.3-2

# [4] magrittr_1.5 workflowr_1.2.0.9000 tidyselect_0.2.5

# [7] munsell_0.4.3 colorspace_1.4-0 R6_2.2.2

# [10] rlang_0.3.1 dplyr_0.8.0.1 stringr_1.3.1

# [13] plyr_1.8.4 tools_3.4.3 grid_3.4.3

# [16] gtable_0.2.0 withr_2.1.2 git2r_0.23.3

# [19] htmltools_0.3.6 assertthat_0.2.0 yaml_2.2.0

# [22] lazyeval_0.2.1 rprojroot_1.3-2 digest_0.6.17

# [25] tibble_2.1.1 crayon_1.3.4 purrr_0.2.5

# [28] fs_1.2.6 glue_1.3.0 evaluate_0.11

# [31] rmarkdown_1.10 labeling_0.3 stringi_1.2.4

# [34] pillar_1.3.1 compiler_3.4.3 scales_0.5.0

# [37] backports_1.1.2 pkgconfig_2.0.2