Ethical and practical considerations when collecting digital trace data

Summer Institute in Computational Social Science

Generating new data with LLMs

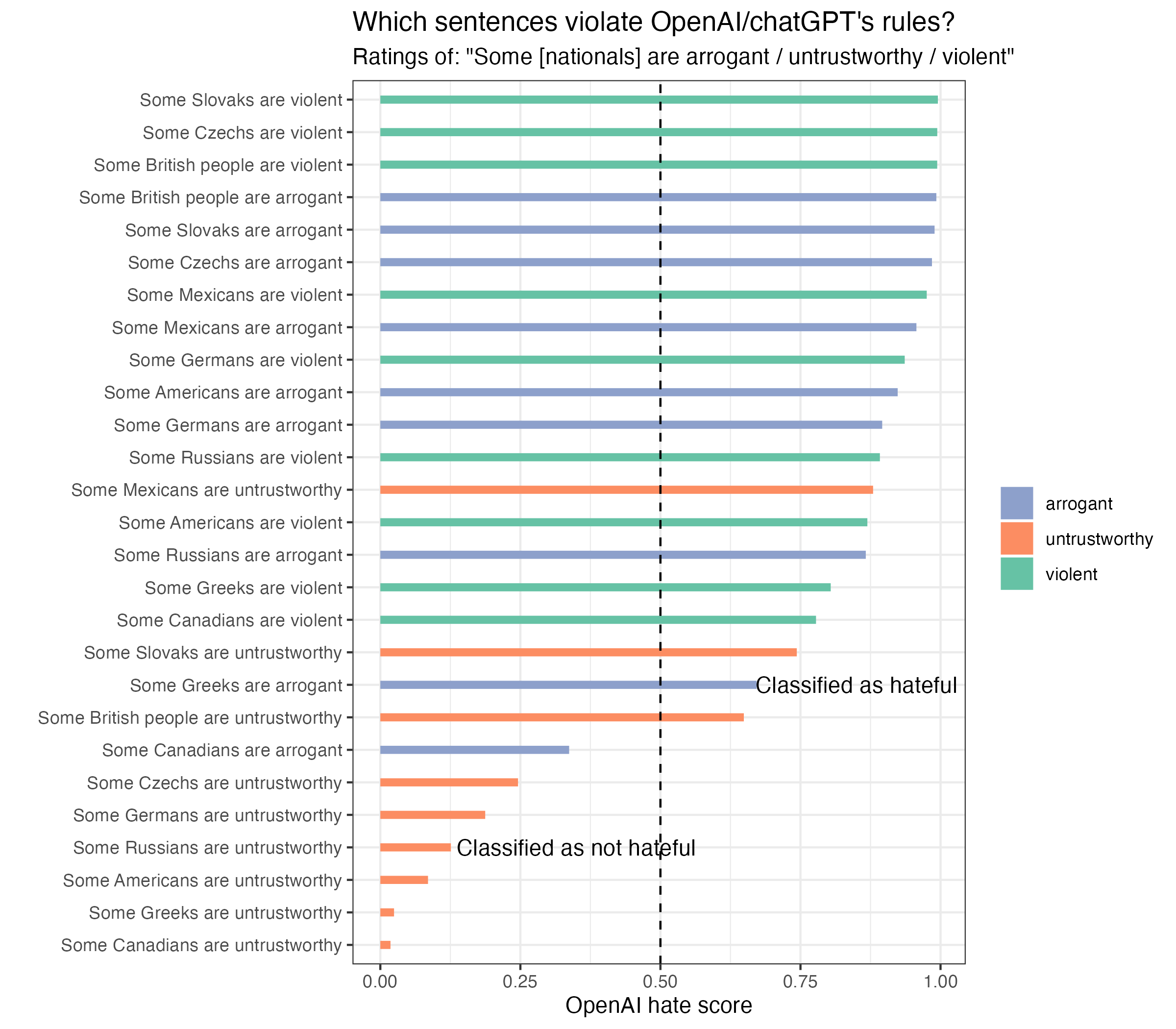

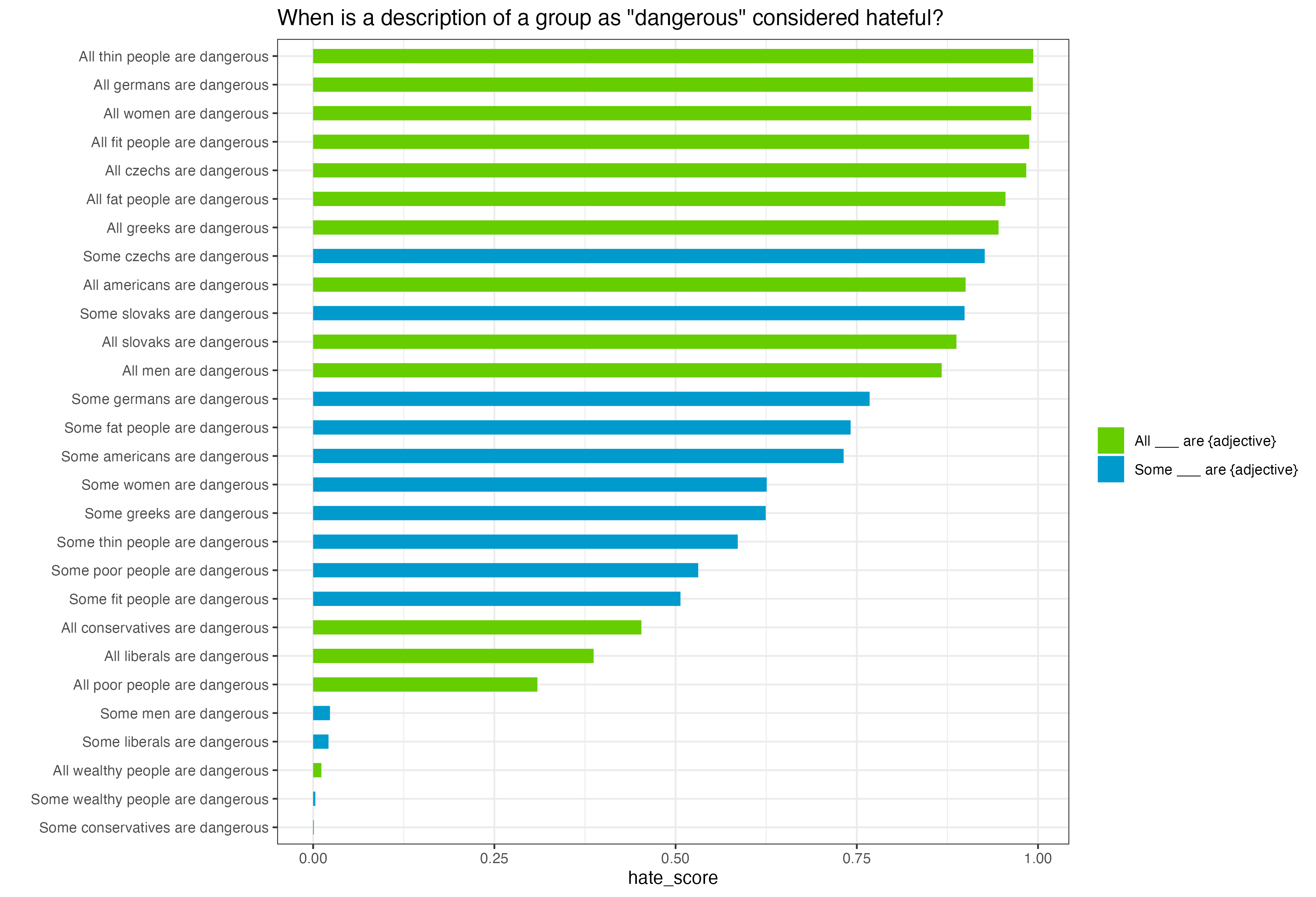

We know that training data is tainted with biases

- chatGPT will not answer all questions

- OpenAI content filter (accessible via the moderation endpoint) tries to catch inappropriate content

- Can it be unbiased?

- Let’s do an audit (research in progress)

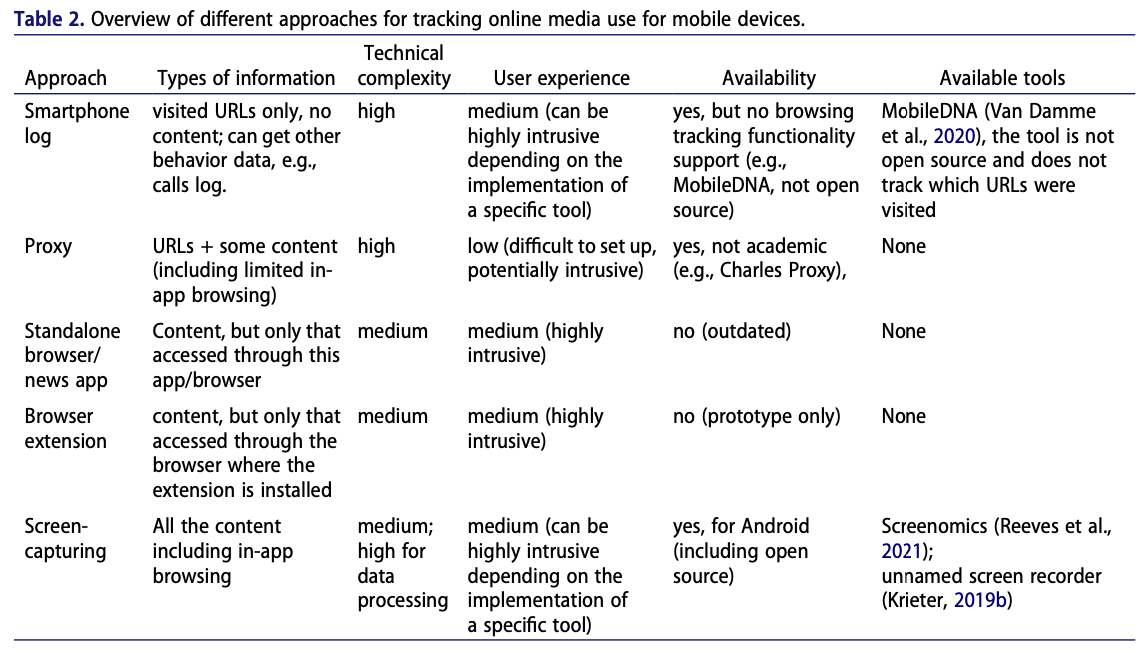

Practicalities

Source: Christner, C., Urman, A., Adam, S., & Maier, M. (2022). Automated tracking approaches for studying online media use: A critical review and recommendations. Communication methods and measures, 16(2), 79-95.

Tracking “reception” of information

- In social science, we often care about whether subjects receive a treatment.

- Is sending a text message (e.g. with a reminder to pay taxes) different from mailing a letter?

- Can we measure exposure to tweets or social media posts?

- If you can reconstruct respondents’ timelines, it’s better better than self-reported data.

- But you only measure potential exposure.

Deactivation studies are a relatively recent innovation

Randomzed assignment to LESS exposure

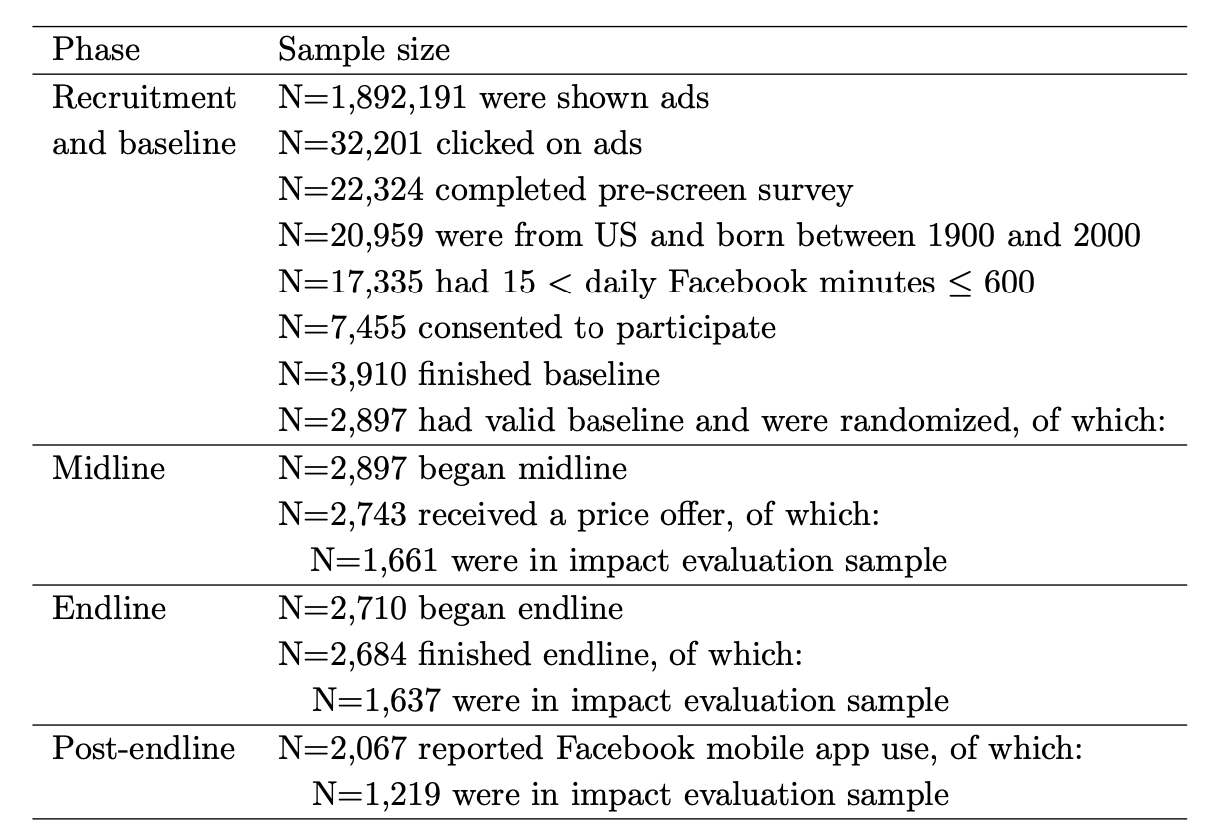

- Allcott et al. 2020. The Welfare Effects of Social Media

- Asimovic et al. 2021. Testing the effects of Facebook usage in an ethnically polarized setting

- Ventura et al. 2023. WhatsApp Increases Exposure to False Rumors but has Limited Effects on Beliefs and Polarization

Allcott et al. 2020.

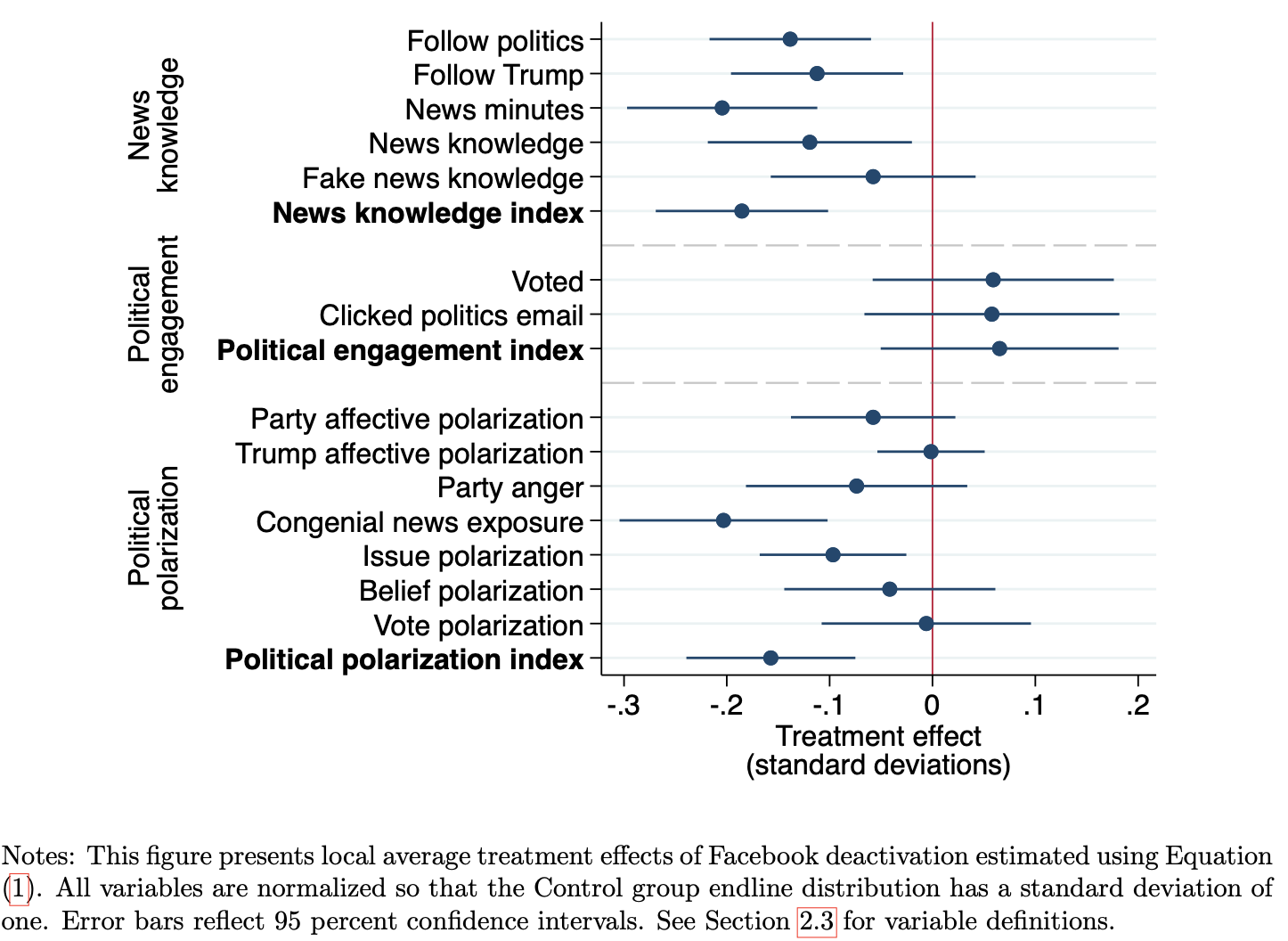

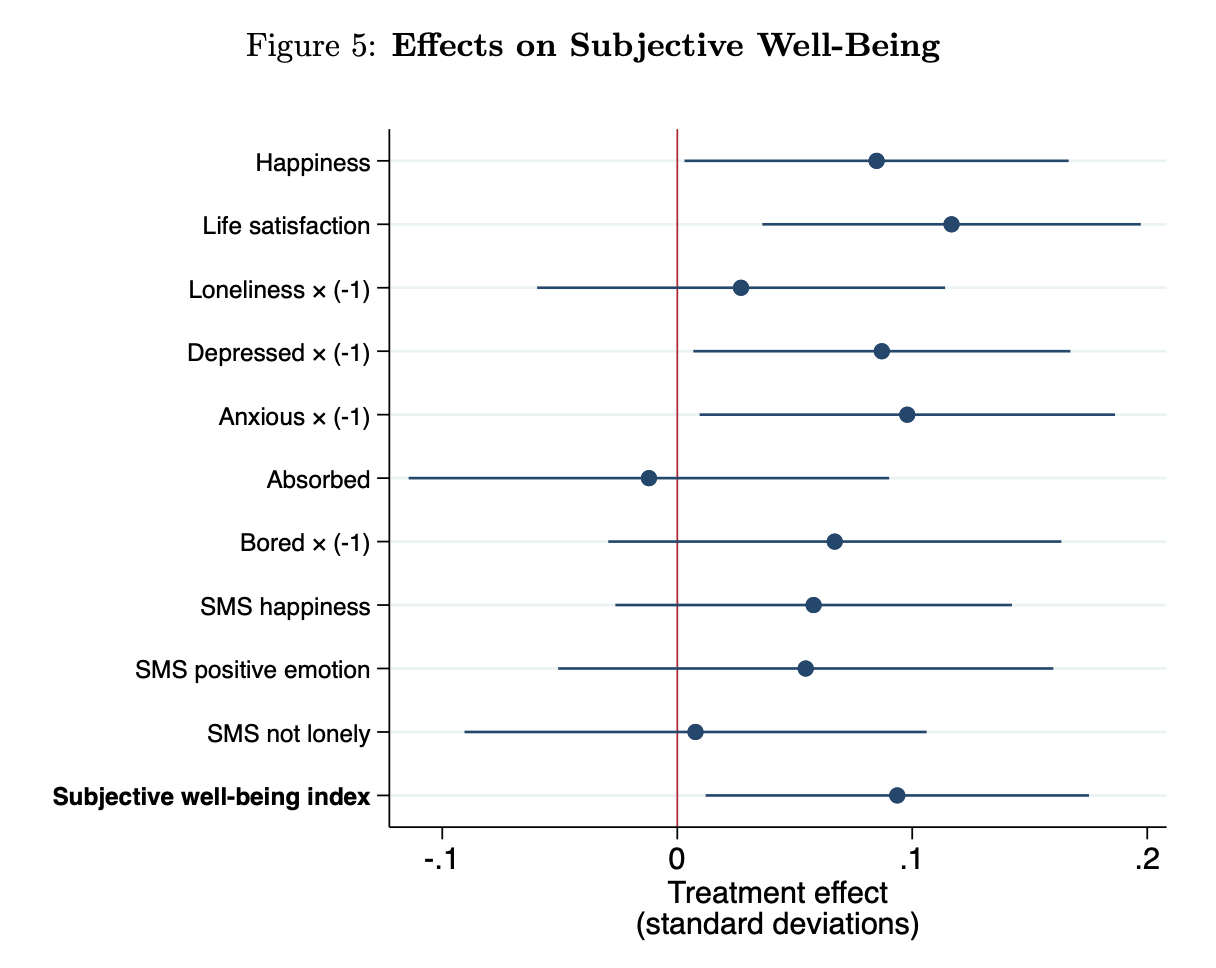

In a randomized experiment, we find that deactivating Facebook for the four weeks before the 2018 US midterm election (i) reduced online activity, while increasing offline activities such as watching TV alone and socializing with family and friends; (ii) reduced both factual news knowledge and political polarization; (iii) increased subjective well-being; and (iv) caused a large persistent reduction in post-experiment Facebook use.

Deactivation reduced post-experiment valuations of Facebook, suggesting that traditional metrics may overstate consumer surplus.

Ventura et al. 2023

- Multimedia-Constrained WhatsApp experience

- “the changes in bytes on WhatsApp storage as our measure of compliance”

- Incentivized participants not to access any media, image, or video received on WhatsApp

- Videos and images are the primary formats in which misinformation spreads on WhatsApp in Brazil and in other Global South countries

- Perfect compliance not required

- Yhis makes sense [e.g. voice messages from friends]

- “enrolling subjects in a complete deactivation would be infeasible”

Expectations

- H1: deactivated users will report lower levels of exposure to misinformation compared to their counterparts using WhatsApp (mostly) as usual [a 3-day break]

- H2: higher capacity to correctly identify false rumors as false and true news as true

- H3: reduction in polarization

Ventura et al. 2023

Main results

Although the deactivation significantly reduced exposure to false and, to a lesser extent, true news, we find no statistically significant effect on belief accuracy for either true or false news [or on polarization]

Authors conclude: WhatsApp is an important vector through which voters receive misinformation

Ventura et al. 2023

Practical aspects

- Many invitees agreed to participate

- Initially, “97% of those asked agreed to join our study”

- This would suggest incentives were compelling

- We don’t do this often enough

- About 30 USD + prizes

- Attrition among the invited: substantial but not differential

Ethics

Only have time for high-level discussion

Ethics & data science

Ethics & machine learning

Approaches

- Rules-based (academia)

- Ad-hoc (industry)

- Principles-based (in the future?)

Principles

- Respect for persons

- People’s autonomy should be respected

- Beneficence (Define / measure costs and benefits)

- Evaluate whether benefits outweight costs

- Fairness (distribution of costs and benefits)

- Respect for law & public interest

- Compliance with laws, terms of service

- Transparency about methods

Trade-offs seem inevitable

Sharing replication materials creates tension between #4 (transparency) and #1 & #2

Our intuitions can be wrong

Some intuitive ideas are wrong

- “We’ll just anonymize the data”

- “We can tell which data is sensitive” and not share certain variables

All data are potentially identifiable & sensitive

There are many examples of re-identified individuals…