Simulation of STR Pairs and Calculation of Likelihood Ratios

Tina Lasisi

2024-03-03 20:02:58

Last updated: 2024-03-03

Checks: 7 0

Knit directory: PODFRIDGE/

This reproducible R Markdown analysis was created with workflowr (version 1.7.1). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20230302) was run prior to running

the code in the R Markdown file. Setting a seed ensures that any results

that rely on randomness, e.g. subsampling or permutations, are

reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility.

The results in this page were generated with repository version 2596546. See the Past versions tab to see a history of the changes made to the R Markdown and HTML files.

Note that you need to be careful to ensure that all relevant files for

the analysis have been committed to Git prior to generating the results

(you can use wflow_publish or

wflow_git_commit). workflowr only checks the R Markdown

file, but you know if there are other scripts or data files that it

depends on. Below is the status of the Git repository when the results

were generated:

Ignored files:

Ignored: .DS_Store

Ignored: .Rhistory

Ignored: .Rproj.user/

Ignored: analysis/.DS_Store

Ignored: output/.DS_Store

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the repository in which changes were

made to the R Markdown (analysis/STR-simulation.Rmd) and

HTML (docs/STR-simulation.html) files. If you’ve configured

a remote Git repository (see ?wflow_git_remote), click on

the hyperlinks in the table below to view the files as they were in that

past version.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| Rmd | 2596546 | Tina Lasisi | 2024-03-03 | wflow_publish("analysis/*", republish = TRUE, all = TRUE, verbose = TRUE) |

Introduction

This document provides a comprehensive guide on simulating Short Tandem Repeat (STR) pairs, and calculating shared alleles, relatedness scores, and likelihood ratios across different populations. It aims to elucidate the methodologies applied in forensic genetics for estimating the degree of relationship between individuals using genetic markers.

Background

From Weight-of-evidence for forensic DNA profiles book

Likelihood ratio for a single locus is:

\[ R=\kappa_0+\kappa_1 / R_X^p+\kappa_2 / R_X^u \] Where \(\kappa\) is the probability of having 0, 1 or 2 alleles IBD for a given relationship.

The \(R_X\) terms are quantifying the “surprisingness” of a particular pattern of allele sharing.

The \(R_X^p\) terms attached to the \(kappa_1\) are defined in the following table:

\[ \begin{aligned} &\text { Table 7.2 Single-locus LRs for paternity when } \mathcal{C}_M \text { is unavailable. }\\ &\begin{array}{llc} \hline c & Q & R_X \times\left(1+2 F_{S T}\right) \\ \hline \mathrm{AA} & \mathrm{AA} & 3 F_{S T}+\left(1-F_{S T}\right) p_A \\ \mathrm{AA} & \mathrm{AB} & 2\left(2 F_{S T}+\left(1-F_{S T}\right) p_A\right) \\ \mathrm{AB} & \mathrm{AA} & 2\left(2 F_{S T}+\left(1-F_{S T}\right) p_A\right) \\ \mathrm{AB} & \mathrm{AC} & 4\left(F_{S T}+\left(1-F_{S T}\right) p_A\right) \\ \mathrm{AB} & \mathrm{AB} & 4\left(F_{S T}+\left(1-F_{S T}\right) p_A\right)\left(F_{S T}+\left(1-F_{S T}\right) p_B\right) /\left(2 F_{S T}+\left(1-F_{S T}\right)\left(p_A+p_B\right)\right) \\ \hline \end{array} \end{aligned} \]

For our purposes we will take out the \(F_{S T}\) values. So the table will be as follows:

\[ \begin{aligned} &\begin{array}{llc} \hline c & Q & R_X \\ \hline \mathrm{AA} & \mathrm{AA} & p_A \\ \mathrm{AA} & \mathrm{AB} & 2 p_A \\ \mathrm{AB} & \mathrm{AA} & 2p_A \\ \mathrm{AB} & \mathrm{AC} & 4p_A \\ \mathrm{AB} & \mathrm{AB} & 4 p_A p_B/(p_A+p_B) \\ \hline \end{array} \end{aligned} \]

If none of the alleles match, then the \(\kappa_1 / R_X^p = 0\).

The \(R_X^u\) terms attached to the \(kappa_2\) are defined as:

If both alleles match and are homozygous the equation is 6.4 (pg 85). Single locus match probability: \(\mathrm{CSP}=\mathcal{G}_Q=\mathrm{AA}\) \[ \frac{\left(2 F_{S T}+\left(1-F_{S T}\right) p_A\right)\left(3 F_{S T}+\left(1-F_{S T}\right) p_A\right)}{\left(1+F_{S T}\right)\left(1+2 F_{S T}\right)} \] Simplified to: \[ p_A{ }^2 \]

If both alleles match and are heterozygous, the equation is 6.5 (pg 85) Single locus match probability: \(\mathrm{CSP}=\mathcal{G}_Q=\mathrm{AB}\) \[ 2 \frac{\left(F_{S T}+\left(1-F_{S T}\right) p_A\right)\left(F_{S T}+\left(1-F_{S T}\right) p_B\right)}{\left(1+F_{S T}\right)\left(1+2 F_{S T}\right)} \] Simplified to:

\[ 2 p_A p_B \] If both alleles do not match then \(\kappa_2 / R_X^u = 0\).

Rscript for simulation

The R script “code/known-vs-tested_simulation_script.R” simulates STR pairs and calculates likelihood ratios, shared alleles, and relatedness scores across different populations. The script is divided into the following sections:

Initialization: Load required R packages and set the working directory.

Parameter Configuration: Input parameters for the number of simulations for unrelated and related pairs are specified, allowing adjustable sample sizes.

Data Preparation: Aggregate CODIS allele frequency data from CSV files representing different populations into a single dataset.

IBD Probability Definitions: Construct a table detailing the probabilities of sharing zero, one, or two alleles identical by descent (IBD) for various relationship types.

Allele Frequency Calculation: Calculate allele frequencies within the population for each simulated pair to determine the likelihood of observing specific allele combinations.

Likelihood Ratio Computation: Calculate likelihood ratios for each pair using allele frequencies and IBD probabilities, focusing on \(R_{Xp}\) and \(R_{Xu}\) values.

Simulation of Individual Pairs: Generate alleles for individuals based on population-specific allele frequencies to simulate STR pairs.

Shared Allele Analysis: Evaluate the number of alleles shared between pairs, categorizing them by their genetic relationship.

Aggregate Analysis and Visualization: Summarize the results and generate visual representations, such as box plots and heatmaps, to illustrate the relationship between known and tested relationships across populations.

Result Exportation: Export aggregated results as CSV files for further analysis.

Due to the computational cost of simulating large numbers of STR pairs, the script has been run separately and we visualize the results below.

Simulation results

Rows: 360000 Columns: 6

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (3): population, known_relationship_type, tested_relationship_type

dbl (3): replicate_id, num_shared_alleles_sum, log_R_sum

ℹ Use `spec()` to retrieve the full column specification for this data.

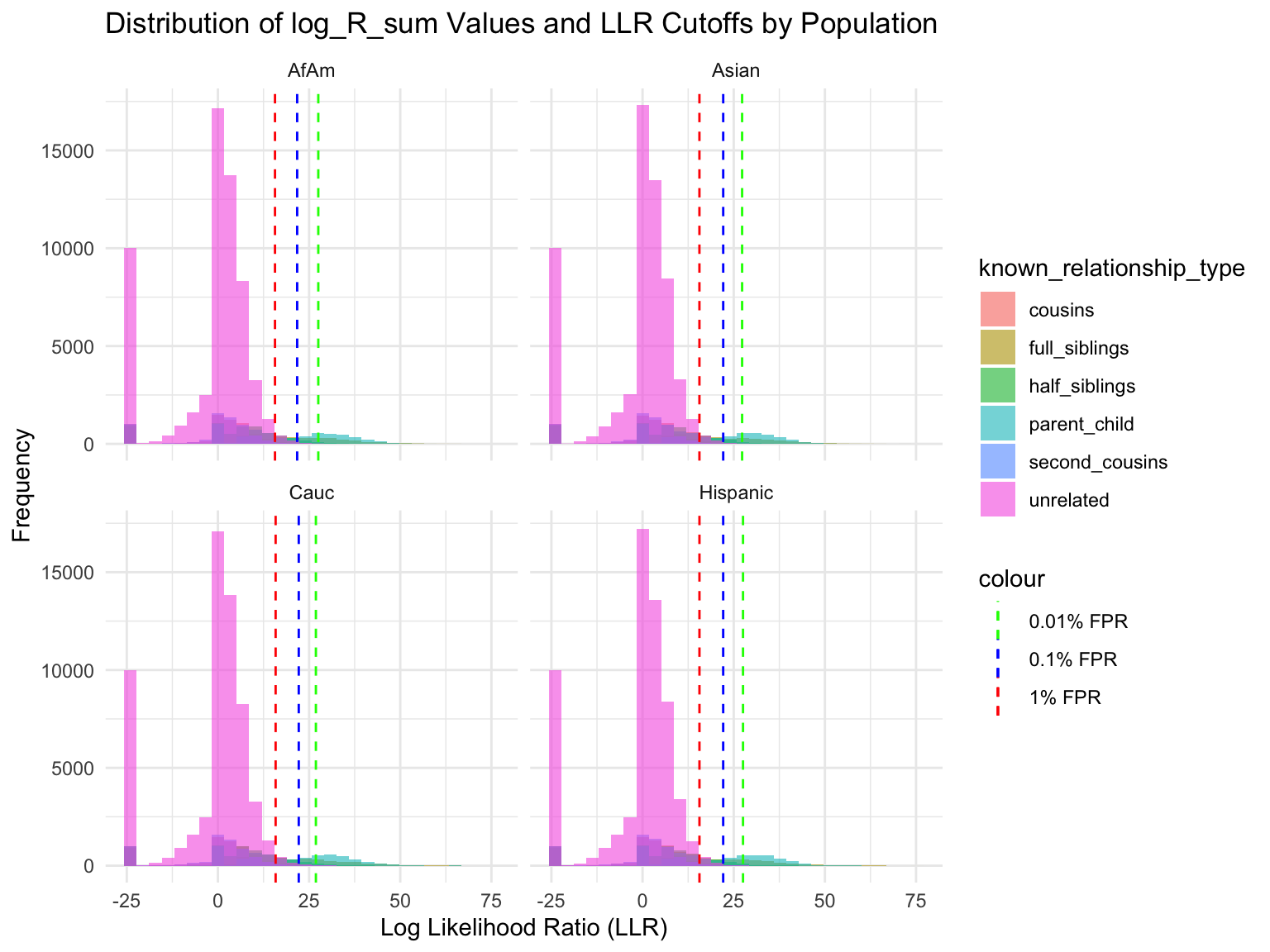

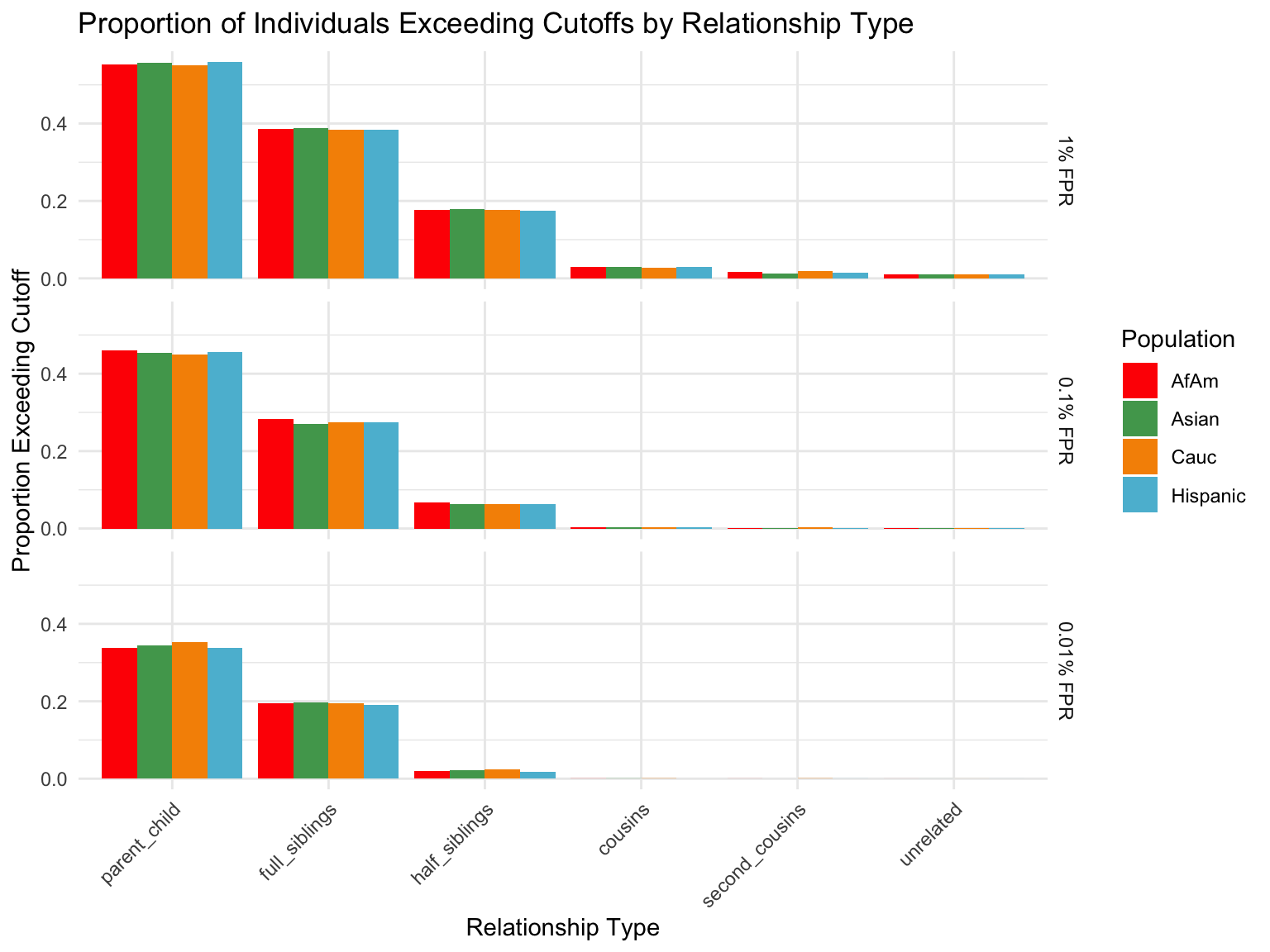

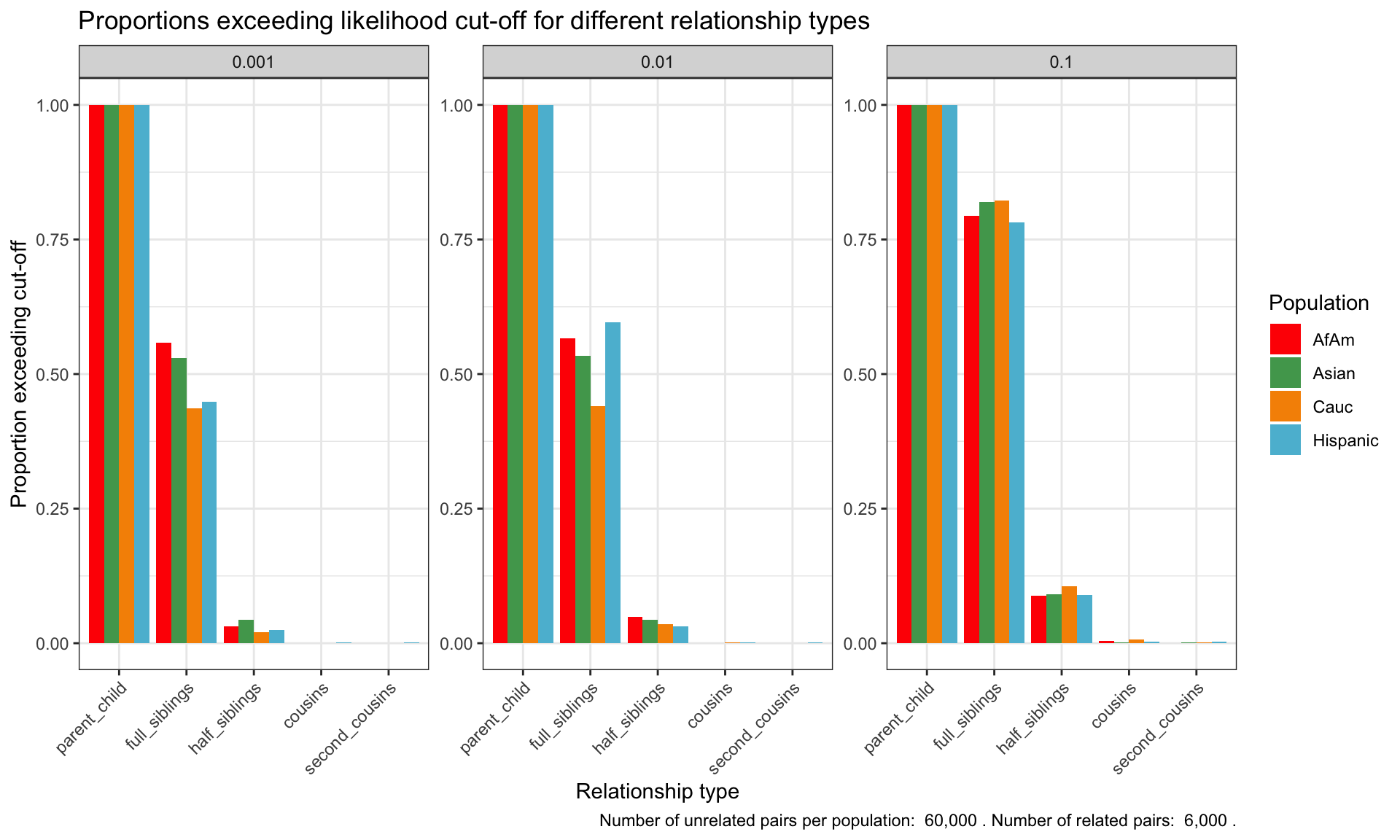

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.Proportion of individuals of known relationship type exceeding likelihood cut-off

population relationship_type fp_rate prop_exceeding

1 AfAm parent_child 0.1 1.000

2 Asian parent_child 0.1 1.000

3 Cauc parent_child 0.1 1.000

4 Hispanic parent_child 0.1 1.000

5 AfAm full_siblings 0.1 0.794

6 Asian full_siblings 0.1 0.820`summarise()` has grouped output by 'population'. You can override using the

`.groups` argument.

Cut-offs for each FPR

`summarise()` has grouped output by 'population'. You can override using the

`.groups` argument.# A tibble: 24 × 7

population known_relationship_type mean_log_R_sum median_log_R_sum

<chr> <chr> <dbl> <dbl>

1 AfAm cousins -0.533 2.42

2 AfAm full_siblings 10.5 11.5

3 AfAm half_siblings 3.85 6.80

4 AfAm parent_child 19.6 19.0

5 AfAm second_cousins -1.23 1.78

6 AfAm unrelated -2.00 0.914

7 Asian cousins -0.538 2.42

8 Asian full_siblings 10.5 11.6

9 Asian half_siblings 3.82 6.78

10 Asian parent_child 19.5 18.9

# ℹ 14 more rows

# ℹ 3 more variables: min_log_R_sum <dbl>, max_log_R_sum <dbl>, count <int>