Results

2020-August-02

Last updated: 2021-01-03

Checks: 7 0

Knit directory: PredictOutbredCrossVar/

This reproducible R Markdown analysis was created with workflowr (version 1.6.2). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20191123) was run prior to running the code in the R Markdown file. Setting a seed ensures that any results that rely on randomness, e.g. subsampling or permutations, are reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility.

The results in this page were generated with repository version e7306d3. See the Past versions tab to see a history of the changes made to the R Markdown and HTML files.

Note that you need to be careful to ensure that all relevant files for the analysis have been committed to Git prior to generating the results (you can use wflow_publish or wflow_git_commit). workflowr only checks the R Markdown file, but you know if there are other scripts or data files that it depends on. Below is the status of the Git repository when the results were generated:

Ignored files:

Ignored: .DS_Store

Ignored: .Rhistory

Ignored: .Rproj.user/

Ignored: analysis/.DS_Store

Ignored: code/.DS_Store

Ignored: data/.DS_Store

Ignored: manuscript/.DS_Store

Ignored: output/.DS_Store

Ignored: output/crossPredictions/.DS_Store

Ignored: output/crossPredictions/.gitignore

Ignored: output/crossPredictions/Icon

Ignored: output/crossPredictions/July2020/

Ignored: output/crossPredictions/defunctDirectionalDomResults/

Ignored: output/crossPredictions/mt_Repeat1_Fold1_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat1_Fold2_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat1_Fold3_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat1_Fold4_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat1_Fold5_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat2_Fold1_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat2_Fold2_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat2_Fold3_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat2_Fold4_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat2_Fold5_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat3_Fold1_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat3_Fold2_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat3_Fold3_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat3_Fold4_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat3_Fold5_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat4_Fold1_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat4_Fold2_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat4_Fold3_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat4_Fold4_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat4_Fold5_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat5_Fold1_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat5_Fold2_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat5_Fold3_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat5_Fold4_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/mt_Repeat5_Fold5_trainset_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/otherRetiredEarlyResults/

Ignored: output/crossPredictions/predUntestedCrossBVs_ReDoSelfs_A_predVarAndCovarBVs.rds

Ignored: output/crossPredictions/predUntestedCrossTGVs_ReDoSelfs_AD_predVarsAndCovars.rds

Ignored: output/crossPredictions/predictedCrossVars_chunk1_2Dec2020.rds

Ignored: output/crossPredictions/predictedCrossVars_chunk2_2Dec2020.rds

Ignored: output/crossPredictions/predictedCrossVars_chunk3_2Dec2020.rds

Ignored: output/crossPredictions/predictedCrossVars_chunk4_2Dec2020.rds

Ignored: output/crossPredictions/predictedCrossVars_chunk5_2Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk1_15Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk1_2Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk2_15Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk2_2Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk3_15Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk3_2Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk4_15Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk4_2Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk5_2Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarTGVs_chunk1_15Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarTGVs_chunk1_2Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarTGVs_chunk2_15Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarTGVs_chunk2_2Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarTGVs_chunk3_15Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarTGVs_chunk3_2Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarTGVs_chunk4_15Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarTGVs_chunk4_2Dec2020.rds

Ignored: output/crossPredictions/predictedDirectionalDomCrossVarTGVs_chunk5_2Dec2020.rds

Ignored: output/crossPredictions/retired_ReDoSelfs/

Ignored: output/crossRealizations/.DS_Store

Ignored: output/mtMarkerEffects/Icon

Untracked files:

Untracked: Abstract_EdingburghCompGenomicsTalk_2020June02.gdoc

Untracked: Icon

Untracked: ScratchSlidesAndNotes.gslides

Untracked: analysis/ICQG6.Rmd

Untracked: analysis/Icon

Untracked: archive/

Untracked: code/Icon

Untracked: data/Icon

Untracked: manuscript/Figures.gslides

Untracked: manuscript/SupplementaryTable06.csv

Untracked: manuscript/SupplementaryTable07.csv

Untracked: manuscript/SupplementaryTable08.csv

Untracked: manuscript/SupplementaryTable09.csv

Untracked: manuscript/SupplementaryTable17.csv

Untracked: manuscript/SupplementaryTable18.csv

Untracked: output/Figures/

Untracked: output/Icon

Untracked: output/Tables/

Untracked: output/crossRealizations/Icon

Untracked: predCrossVar/

Untracked: rsyncs.R

Untracked: rsyncs2.R

Untracked: setupOnServer.R

Unstaged changes:

Modified: analysis/NGCleadersCall.Rmd

Modified: data/Madd_awc.rds

Modified: data/Mdom_awc.rds

Modified: data/blups_forawcdata.rds

Modified: data/dosages_awc.rds

Modified: data/genmap_awc_May2020.rds

Modified: data/haps_awc.rds

Modified: data/iita_blupsForCrossVal_72619.rds

Modified: data/parentwise_crossVal_folds.rds

Modified: data/ped_awc.rds

Modified: data/recombFreqMat_1minus2c_awcmap_May2020.rds

Modified: manuscript/SupplementaryTables.xlsx

Modified: output/accuraciesMeans.rds

Modified: output/accuraciesUC.rds

Modified: output/accuraciesVars.rds

Modified: output/crossPredictions/TableS7_predictedCrossVars.rds

Modified: output/crossPredictions/mt_Repeat1_Fold1_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat1_Fold1_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat1_Fold1_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat1_Fold1_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat1_Fold2_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat1_Fold2_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat1_Fold2_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat1_Fold2_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat1_Fold3_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat1_Fold3_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat1_Fold3_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat1_Fold3_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat1_Fold4_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat1_Fold4_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat1_Fold4_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat1_Fold4_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat1_Fold5_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat1_Fold5_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat1_Fold5_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat1_Fold5_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat2_Fold1_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat2_Fold1_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat2_Fold1_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat2_Fold1_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat2_Fold2_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat2_Fold2_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat2_Fold2_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat2_Fold2_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat2_Fold3_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat2_Fold3_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat2_Fold3_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat2_Fold3_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat2_Fold4_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat2_Fold4_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat2_Fold4_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat2_Fold4_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat2_Fold5_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat2_Fold5_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat2_Fold5_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat2_Fold5_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat3_Fold1_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat3_Fold1_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat3_Fold1_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat3_Fold1_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat3_Fold2_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat3_Fold2_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat3_Fold2_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat3_Fold2_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat3_Fold3_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat3_Fold3_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat3_Fold3_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat3_Fold3_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat3_Fold4_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat3_Fold4_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat3_Fold4_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat3_Fold4_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat3_Fold5_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat3_Fold5_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat3_Fold5_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat3_Fold5_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat4_Fold1_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat4_Fold1_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat4_Fold1_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat4_Fold1_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat4_Fold2_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat4_Fold2_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat4_Fold2_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat4_Fold2_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat4_Fold3_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat4_Fold3_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat4_Fold3_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat4_Fold3_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat4_Fold4_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat4_Fold4_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat4_Fold4_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat4_Fold4_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat4_Fold5_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat4_Fold5_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat4_Fold5_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat4_Fold5_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat5_Fold1_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat5_Fold1_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat5_Fold1_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat5_Fold1_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat5_Fold2_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat5_Fold2_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat5_Fold2_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat5_Fold2_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat5_Fold3_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat5_Fold3_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat5_Fold3_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat5_Fold3_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat5_Fold4_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat5_Fold4_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat5_Fold4_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat5_Fold4_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat5_Fold5_trainset_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/mt_Repeat5_Fold5_trainset_A_predVarsAndCovars.rds

Modified: output/crossPredictions/mt_Repeat5_Fold5_trainset_DirectionalDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/mt_Repeat5_Fold5_trainset_DirectionalDom_predVarsAndCovars.rds

Modified: output/crossPredictions/predUntestedCrossBVs_chunk1_A_predVarAndCovarBVs.rds

Modified: output/crossPredictions/predUntestedCrossBVs_chunk1_DirDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/predUntestedCrossBVs_chunk2_A_predVarAndCovarBVs.rds

Modified: output/crossPredictions/predUntestedCrossBVs_chunk2_DirDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/predUntestedCrossBVs_chunk3_A_predVarAndCovarBVs.rds

Modified: output/crossPredictions/predUntestedCrossBVs_chunk3_DirDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/predUntestedCrossBVs_chunk4_A_predVarAndCovarBVs.rds

Modified: output/crossPredictions/predUntestedCrossBVs_chunk4_DirDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/predUntestedCrossBVs_chunk5_A_predVarAndCovarBVs.rds

Deleted: output/crossPredictions/predUntestedCrossBVs_chunk5_DirDom_predVarAndCovarBVs.rds

Modified: output/crossPredictions/predUntestedCrossTGVs_chunk1_AD_predVarsAndCovars.rds

Modified: output/crossPredictions/predUntestedCrossTGVs_chunk1_DirDom_predVarsAndCovars.rds

Modified: output/crossPredictions/predUntestedCrossTGVs_chunk2_AD_predVarsAndCovars.rds

Modified: output/crossPredictions/predUntestedCrossTGVs_chunk2_DirDom_predVarsAndCovars.rds

Modified: output/crossPredictions/predUntestedCrossTGVs_chunk3_AD_predVarsAndCovars.rds

Modified: output/crossPredictions/predUntestedCrossTGVs_chunk3_DirDom_predVarsAndCovars.rds

Modified: output/crossPredictions/predUntestedCrossTGVs_chunk4_AD_predVarsAndCovars.rds

Modified: output/crossPredictions/predUntestedCrossTGVs_chunk4_DirDom_predVarsAndCovars.rds

Modified: output/crossPredictions/predUntestedCrossTGVs_chunk5_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrossTGVs_chunk5_DirDom_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_209parents_chunk1_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_209parents_chunk1_A_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_209parents_chunk2_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_209parents_chunk2_A_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_209parents_chunk3_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_209parents_chunk3_A_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_209parents_chunk4_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_209parents_chunk4_A_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_209parents_chunk5_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_209parents_chunk5_A_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_top100stdSI_chunk1_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_top100stdSI_chunk1_A_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_top100stdSI_chunk2_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_top100stdSI_chunk2_A_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_top100stdSI_chunk3_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_top100stdSI_chunk3_A_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_top100stdSI_chunk4_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_top100stdSI_chunk4_A_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_top100stdSI_chunk5_AD_predVarsAndCovars.rds

Deleted: output/crossPredictions/predUntestedCrosses_top100stdSI_chunk5_A_predVarsAndCovars.rds

Modified: output/crossPredictions/predictedCrossMeans.rds

Modified: output/crossPredictions/predictedCrossMeans_DirectionalDom_tidy_withSelIndices.rds

Deleted: output/crossPredictions/predictedCrossMeans_GCA_SCA.rds

Modified: output/crossPredictions/predictedCrossMeans_tidy_withSelIndices.rds

Modified: output/crossPredictions/predictedCrossVars_DirectionalDom_tidy_withSelIndices.rds

Deleted: output/crossPredictions/predictedCrossVars_GCA_SCA.rds

Deleted: output/crossPredictions/predictedCrossVars_chunk1.rds

Deleted: output/crossPredictions/predictedCrossVars_chunk2.rds

Deleted: output/crossPredictions/predictedCrossVars_chunk3.rds

Deleted: output/crossPredictions/predictedCrossVars_chunk4.rds

Deleted: output/crossPredictions/predictedCrossVars_chunk5.rds

Modified: output/crossPredictions/predictedCrossVars_tidy_withSelIndices.rds

Modified: output/crossPredictions/predictedDirectionalDomCrossMeans.rds

Deleted: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk1.rds

Deleted: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk2.rds

Deleted: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk3.rds

Deleted: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk4.rds

Deleted: output/crossPredictions/predictedDirectionalDomCrossVarBVs_chunk5.rds

Deleted: output/crossPredictions/predictedDirectionalDomCrossVars_chunk1.rds

Deleted: output/crossPredictions/predictedDirectionalDomCrossVars_chunk2.rds

Deleted: output/crossPredictions/predictedDirectionalDomCrossVars_chunk3.rds

Deleted: output/crossPredictions/predictedDirectionalDomCrossVars_chunk4.rds

Deleted: output/crossPredictions/predictedDirectionalDomCrossVars_chunk5.rds

Modified: output/crossPredictions/predictedUntestedCrossMeansBV.rds

Modified: output/crossPredictions/predictedUntestedCrossMeansDirDom.rds

Modified: output/crossPredictions/predictedUntestedCrossMeansTGV.rds

Modified: output/crossPredictions/predictedUntestedCrossMeans_SelIndices.rds

Modified: output/crossPredictions/predictedUntestedCrossMeans_tidy_traits.rds

Modified: output/crossPredictions/predictedUntestedCrossVars_SelIndices.rds

Modified: output/crossPredictions/predictedUntestedCrossVars_tidy_traits.rds

Modified: output/crossRealizations/realizedCrossMeans.rds

Modified: output/crossRealizations/realizedCrossMeans_BLUPs.rds

Modified: output/crossRealizations/realizedCrossMetrics.rds

Modified: output/crossRealizations/realizedCrossVars.rds

Modified: output/crossRealizations/realizedCrossVars_BLUPs.rds

Modified: output/crossRealizations/realized_cross_means_and_covs_traits.rds

Modified: output/crossRealizations/realized_cross_means_and_vars_selindices.rds

Modified: output/gblups_DirectionalDom_parentwise_crossVal_folds.rds

Modified: output/gblups_geneticgroups.rds

Modified: output/gblups_parentwise_crossVal_folds.rds

Modified: output/gebvs_ModelA_GroupAll_stdSI.rds

Modified: output/mtMarkerEffects/mt_All_A.rds

Modified: output/mtMarkerEffects/mt_All_AD.rds

Modified: output/mtMarkerEffects/mt_All_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_GG_A.rds

Modified: output/mtMarkerEffects/mt_GG_AD.rds

Modified: output/mtMarkerEffects/mt_GG_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold1_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold1_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold1_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold1_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold1_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold1_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold2_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold2_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold2_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold2_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold2_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold2_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold3_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold3_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold3_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold3_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold3_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold3_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold4_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold4_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold4_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold4_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold4_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold4_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold5_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold5_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold5_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold5_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold5_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat1_Fold5_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold1_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold1_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold1_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold1_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold1_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold1_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold2_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold2_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold2_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold2_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold2_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold2_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold3_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold3_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold3_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold3_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold3_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold3_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold4_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold4_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold4_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold4_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold4_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold4_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold5_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold5_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold5_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold5_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold5_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat2_Fold5_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold1_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold1_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold1_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold1_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold1_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold1_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold2_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold2_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold2_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold2_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold2_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold2_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold3_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold3_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold3_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold3_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold3_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold3_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold4_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold4_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold4_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold4_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold4_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold4_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold5_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold5_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold5_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold5_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold5_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat3_Fold5_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold1_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold1_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold1_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold1_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold1_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold1_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold2_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold2_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold2_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold2_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold2_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold2_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold3_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold3_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold3_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold3_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold3_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold3_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold4_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold4_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold4_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold4_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold4_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold4_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold5_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold5_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold5_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold5_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold5_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat4_Fold5_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold1_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold1_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold1_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold1_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold1_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold1_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold2_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold2_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold2_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold2_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold2_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold2_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold3_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold3_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold3_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold3_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold3_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold3_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold4_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold4_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold4_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold4_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold4_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold4_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold5_testset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold5_testset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold5_testset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold5_trainset_A.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold5_trainset_AD.rds

Modified: output/mtMarkerEffects/mt_Repeat5_Fold5_trainset_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_TMS13_A.rds

Modified: output/mtMarkerEffects/mt_TMS13_AD.rds

Modified: output/mtMarkerEffects/mt_TMS13_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_TMS14_A.rds

Modified: output/mtMarkerEffects/mt_TMS14_AD.rds

Modified: output/mtMarkerEffects/mt_TMS14_DirectionalDom.rds

Modified: output/mtMarkerEffects/mt_TMS15_A.rds

Modified: output/mtMarkerEffects/mt_TMS15_AD.rds

Modified: output/mtMarkerEffects/mt_TMS15_DirectionalDom.rds

Modified: output/obsVSpredMeans.rds

Modified: output/obsVSpredUC.rds

Modified: output/obsVSpredVars.rds

Modified: output/pmv_DirectionalDom_varcomps_geneticgroups.rds

Modified: output/pmv_varcomps_geneticgroups.rds

Modified: output/pmv_varcomps_geneticgroups_tidy_includingSIvars.rds

Modified: workflowr_log.R

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the repository in which changes were made to the R Markdown (analysis/Results.Rmd) and HTML (docs/Results.html) files. If you’ve configured a remote Git repository (see ?wflow_git_remote), click on the hyperlinks in the table below to view the files as they were in that past version.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| html | 22e6c87 | wolfemd | 2021-01-03 | Build site. |

| Rmd | ef3f7b3 | wolfemd | 2021-01-02 | Compile submission version of all Rmds with outstanding, uncommitted |

| Rmd | 2228d20 | wolfemd | 2020-12-15 | Work in progress. Finished re-doing predictions with both self-cross handling and PMV VarD bugs fixed. Discovered NEW bug in DirDom results. |

| html | 34c84f3 | wolfemd | 2020-10-27 | Build site. |

| Rmd | 57325b7 | wolfemd | 2020-10-27 | Figures and results for second COMPLETE draft. Publish final changes |

| Rmd | b4edd2c | wolfemd | 2020-10-27 | Start workflowr project. |

| Rmd | 7cb77ef | wolfemd | 2020-10-27 | Revised and improved the “exploration of untested crosses”. Includes a network analysis of parents and matings selected. Returns the selfs to all analyses and actually highlights them now. |

| html | 3dbb1e8 | wolfemd | 2020-10-08 | Site built for first COMPLETE draft, shared with co-authors. |

| html | 9714c34 | wolfemd | 2020-09-05 | Build site. |

| Rmd | c2fd60b | wolfemd | 2020-09-05 | Supress warnings and messages. Add (some) Figure legends. |

| html | b06eee7 | wolfemd | 2020-08-31 | Build site. |

| Rmd | 849d7c1 | wolfemd | 2020-08-31 | Track manuscript draft, figures and sup. tables. All results drafted with references to sup. figures and tables in order committed here. |

| Rmd | d4f0da1 | wolfemd | 2020-08-27 | Old version of Sup. Figures (just misc old code for plots), commmiting for posterity before assembling proper draft from figures in Results.Rmd. |

library(tidyverse); library(magrittr)Initial summaries

Summary of the pedigree and germplasm

ped<-readRDS(here::here("data","ped_awc.rds"))

ped %>%

count(sireID,damID) %$% summary(n) Min. 1st Qu. Median Mean 3rd Qu. Max.

1.000 2.000 4.000 6.924 10.000 72.000 ped %>%

pivot_longer(cols=c(sireID,damID),names_to = "MaleOrFemale", values_to = "Parent") %>%

group_by(Parent) %>%

summarize(Ncontributions=n()) %$% summary(Ncontributions) Min. 1st Qu. Median Mean 3rd Qu. Max.

1.00 4.00 16.00 30.61 36.00 256.00 There were 3199 comprising 462 families, derived from 209 parents in our pedigree. Parents were used an average of 31 (median 16, range 1-256) times as sire and/or dam in the pedigree. The mean family size was 7 (median 4, range 1-72).

propHom<-readxl::read_xlsx(here::here("manuscript","SupplementaryTables.xlsx"),sheet = "TableS14")

summary(propHom$PropSNP_homozygous) Min. 1st Qu. Median Mean 3rd Qu. Max.

0.7639 0.8254 0.8364 0.8363 0.8476 0.9273 The average proportion homozygous was 0.84 (range 0.76-0.93) across the 3199 pedigree members (computed over 33370 variable SNP; Table S14).

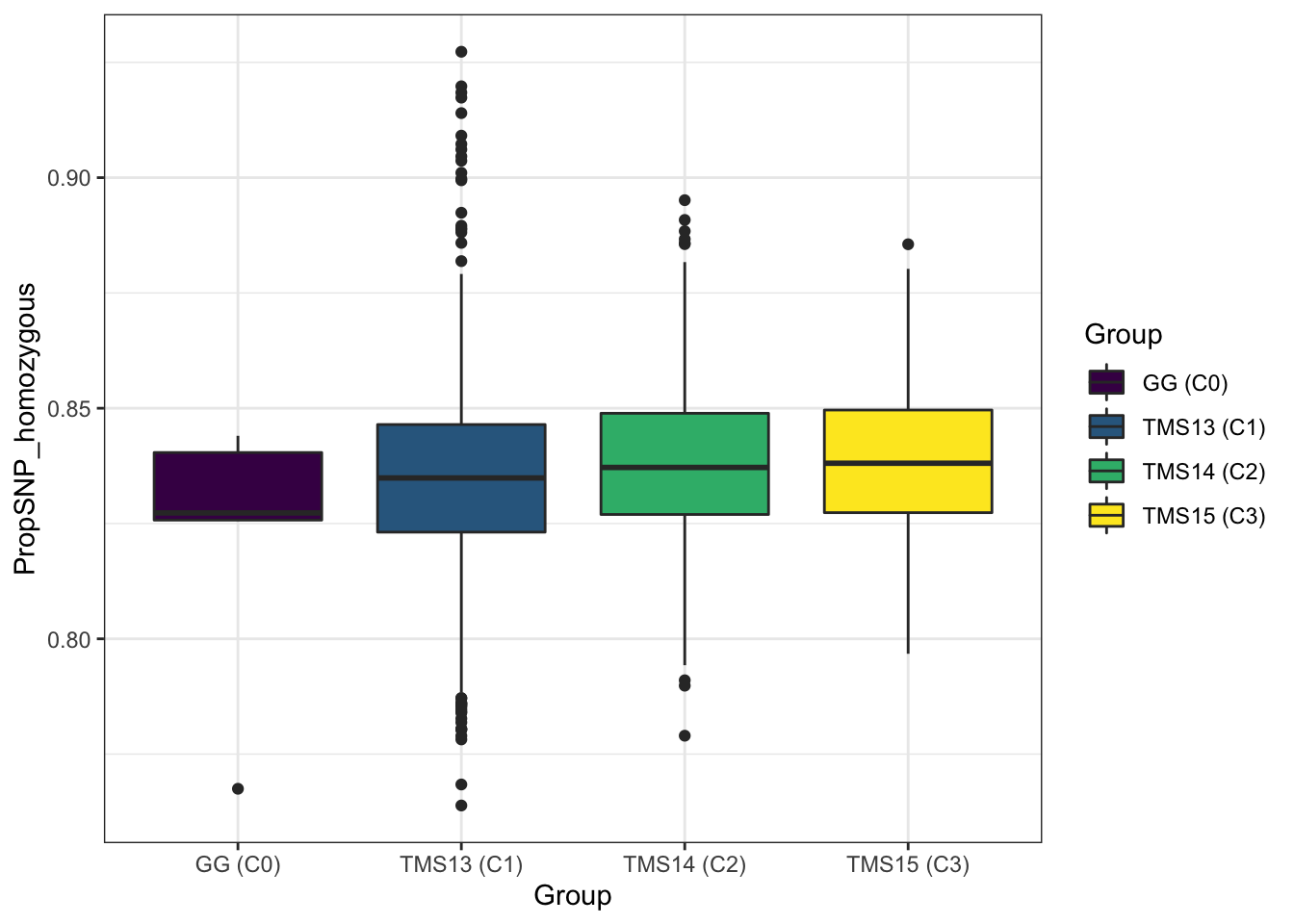

As expected for a population under recurrent selection, the homozygosity rate increases (though only fractionally) from the C0 (mean 0.826), C1 (0.835), C2 (0.838), C3 (0.839) (Figure S01).

propHom %>%

mutate(Group=ifelse(!grepl("TMS13|TMS14|TMS15", GID),"GG (C0)",NA),

Group=ifelse(grepl("TMS13", GID),"TMS13 (C1)",Group),

Group=ifelse(grepl("TMS14", GID),"TMS14 (C2)",Group),

Group=ifelse(grepl("TMS15", GID),"TMS15 (C3)",Group)) %>%

group_by(Group) %>%

summarize(meanPropHom=round(mean(PropSNP_homozygous),3))# A tibble: 4 x 2

Group meanPropHom

<chr> <dbl>

1 GG (C0) 0.826

2 TMS13 (C1) 0.835

3 TMS14 (C2) 0.838

4 TMS15 (C3) 0.839propHom %>%

mutate(Group=ifelse(!grepl("TMS13|TMS14|TMS15", GID),"GG (C0)",NA),

Group=ifelse(grepl("TMS13", GID),"TMS13 (C1)",Group),

Group=ifelse(grepl("TMS14", GID),"TMS14 (C2)",Group),

Group=ifelse(grepl("TMS15", GID),"TMS15 (C3)",Group)) %>%

ggplot(.,aes(x=Group,y=PropSNP_homozygous,fill=Group)) + geom_boxplot() +

theme_bw() +

scale_fill_viridis_d()

Summary of the cross-validation scheme

## Table S2: Summary of cross-validation scheme

parentfold_summary<-readxl::read_xlsx(here::here("manuscript","SupplementaryTables.xlsx"),sheet = "TableS02")

parentfold_summary %>%

summarize_if(is.numeric,~ceiling(mean(.)))# A tibble: 1 x 4

Ntestparents Ntrainset Ntestset NcrossesToPredict

<dbl> <dbl> <dbl> <dbl>

1 42 1833 1494 167parentfold_summary %>%

summarize_if(is.numeric,~ceiling(min(.))) %>% mutate(Value="Min") %>%

bind_rows(parentfold_summary %>%

summarize_if(is.numeric,~ceiling(max(.))) %>% mutate(Value="Max"))# A tibble: 2 x 5

Ntestparents Ntrainset Ntestset NcrossesToPredict Value

<dbl> <dbl> <dbl> <dbl> <chr>

1 41 1245 1003 143 Min

2 42 2323 2081 204 Max Across the 5 replications of 5-fold cross-validation the average number of samples was 1833 (range 1245-2323) for training sets and 1494 (range 1003-2081) for testing sets. The 25 training-testing pairs set-up an average of 167 (range 143-204) crosses-to-predict (Table S02).

Summary of the BLUPs and sel. index

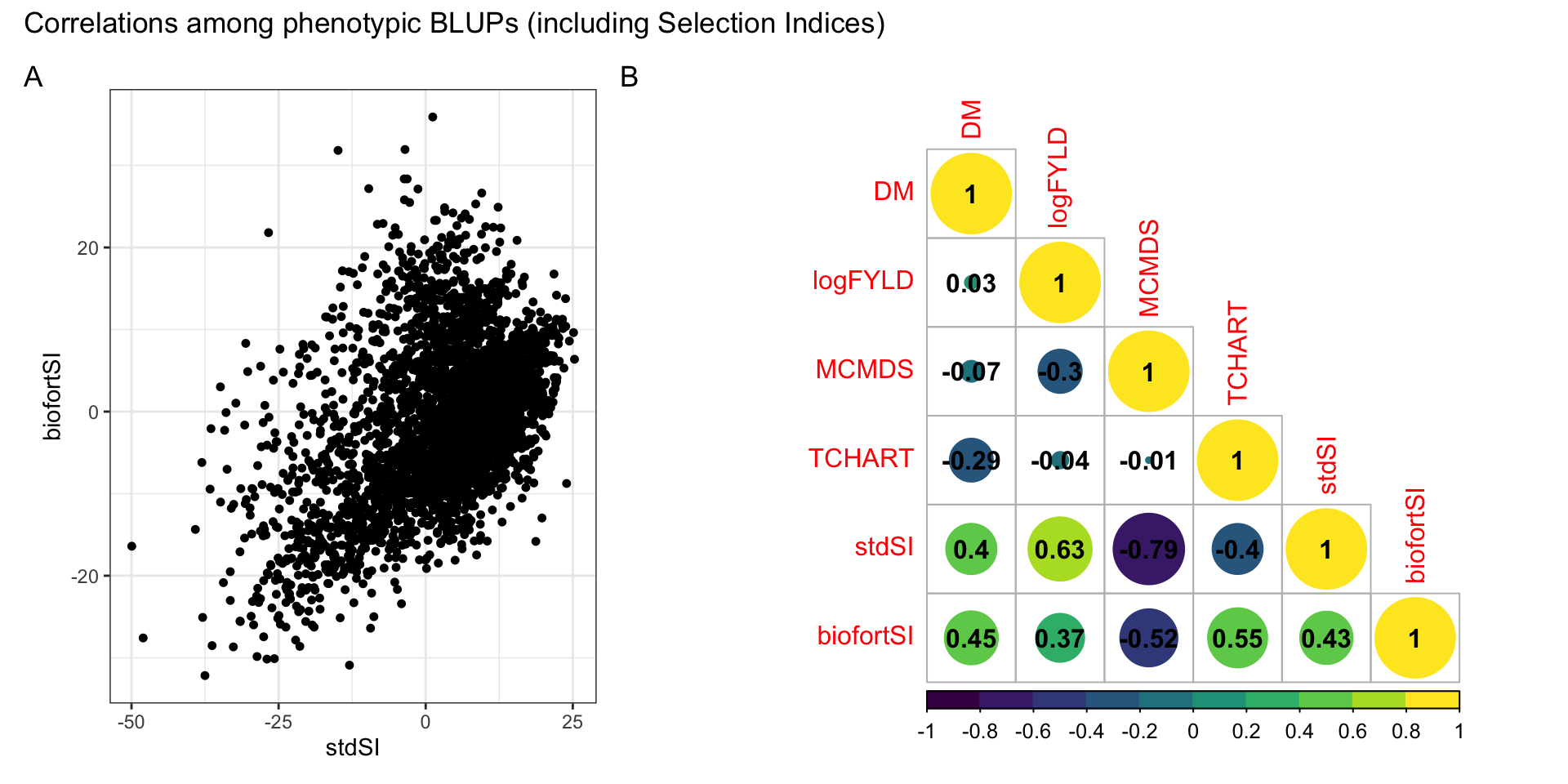

The correlation between phenotypic BLUPs for the two SI (stdSI and biofortSI; Table S01) was 0.43 (Figure S02). The correlation between DM and TCHART BLUPs, for which we had a priori expectations, was -0.29.

library(tidyverse); library(magrittr);

# Selection weights -----------

indices<-readxl::read_xlsx(here::here("manuscript","SupplementaryTables.xlsx"),sheet = "TableS01")

# BLUPs -----------

blups<-readRDS(here::here("data","blups_forawcdata.rds")) %>%

select(Trait,blups) %>%

unnest(blups) %>%

select(Trait,germplasmName,BLUP) %>%

spread(Trait,BLUP) %>%

select(germplasmName,all_of(c("DM","logFYLD","MCMDS","TCHART")))

blups %<>%

select(germplasmName,all_of(indices$Trait)) %>%

mutate(stdSI=blups %>%

select(all_of(indices$Trait)) %>%

as.data.frame(.) %>%

as.matrix(.)%*%indices$stdSI,

biofortSI=blups %>%

select(all_of(indices$Trait)) %>%

as.data.frame(.) %>%

as.matrix(.)%*%indices$biofortSI)Correlations among phenotypic BLUPs (including Selection Indices)

#```{r, fig.show="hold", out.width="50%"}

library(patchwork)

p1<-ggplot(blups,aes(x=stdSI,y=biofortSI)) + geom_point(size=1.25) + theme_bw()

corMat<-cor(blups[,-1],use = 'pairwise.complete.obs')

(p1 | ~corrplot::corrplot(corMat, type = 'lower', col = viridis::viridis(n = 10), diag = T,addCoef.col = "black")) +

plot_layout(nrow=1, widths = c(0.35,0.65)) +

plot_annotation(tag_levels = 'A',

title = 'Correlations among phenotypic BLUPs (including Selection Indices)')

Predictions of means

library(tidyverse); library(magrittr);

# Table S6: Predicted and observed cross means

# obsVSpredMeans<-readxl::read_xlsx(here::here("manuscript","SupplementaryTables.xlsx"),sheet = "TableS06")

# Table S10: Accuracies predicting the mean

accMeans<-readxl::read_xlsx(here::here("manuscript","SupplementaryTables.xlsx"),sheet = "TableS10")Compare validation data types

accMeans %>% #count(ValidationData,Model,VarComp)

spread(ValidationData,Accuracy) %>%

ggplot(.,aes(x=iidBLUPs,y=GBLUPs,color=predOf,shape=Trait)) +

geom_point() +

geom_abline(slope=1,color='darkred') +

facet_wrap(~predOf+Model,scales = 'free') +

theme_bw() + scale_color_viridis_d()

accMeans %>%

spread(ValidationData,Accuracy) %>%

mutate(diffAcc=GBLUPs-iidBLUPs) %$% summary(diffAcc) Min. 1st Qu. Median Mean 3rd Qu. Max.

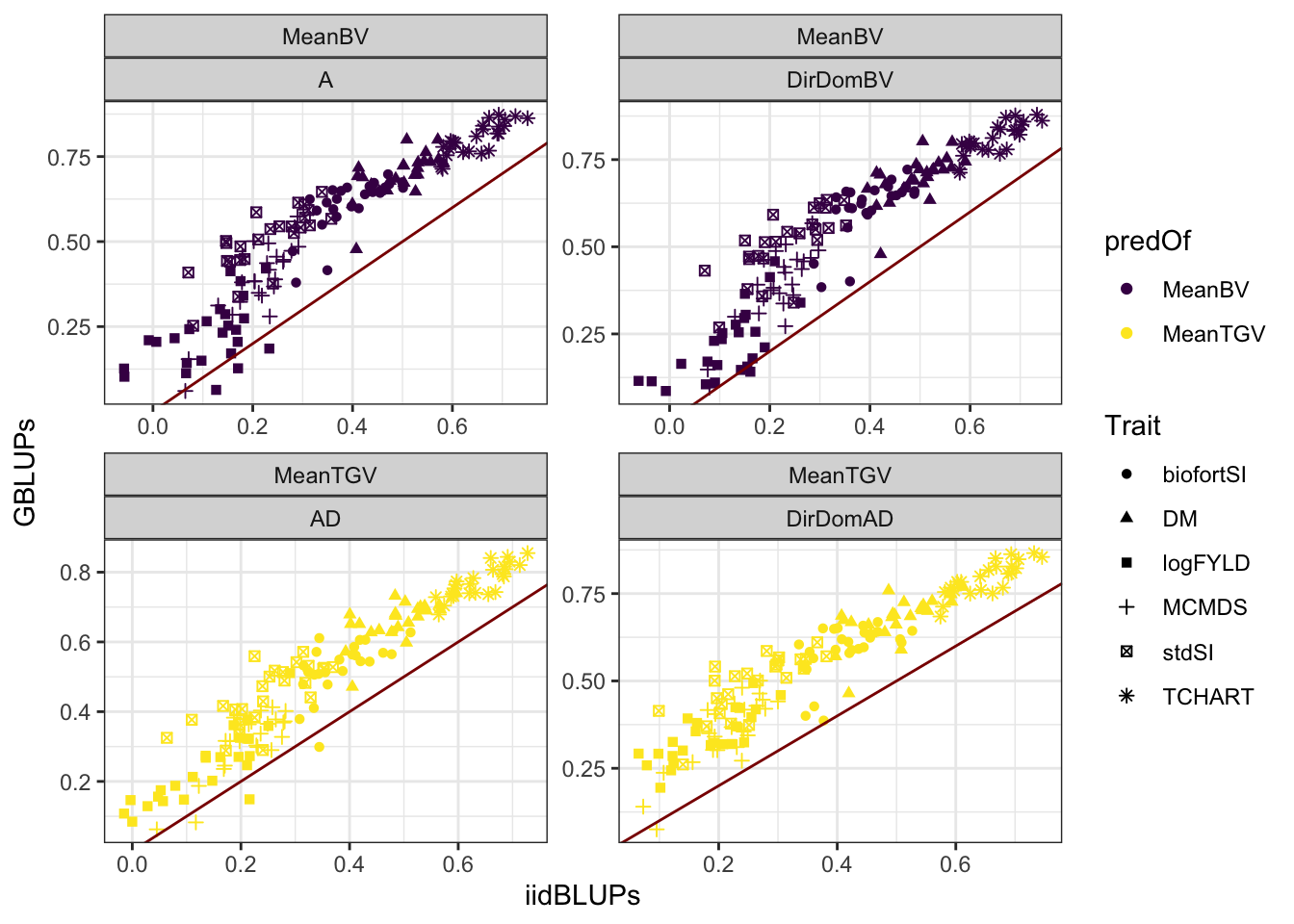

-0.06718 0.12992 0.17184 0.17368 0.21438 0.38530 Prediction accuracy using GBLUPs as validation give a nearly uniform higher correlation (mean 0.17 higher).

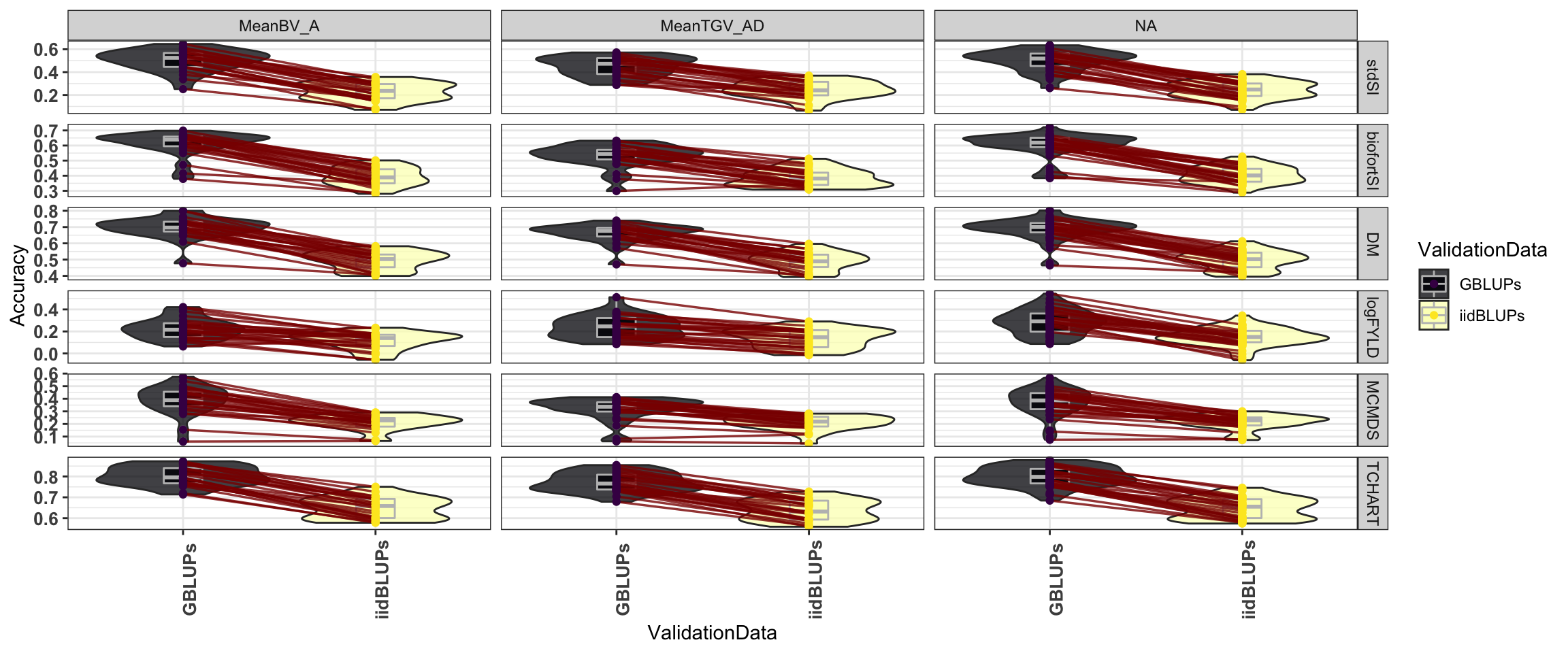

The figure below tries to show that accuracies per trait-fold-rep-Model do not re-rank much from iid-to-GBLUP validation data.

forplot<-accMeans %>%

mutate(Pred=paste0(predOf,"_",Model),

Pred=factor(Pred,levels=c("MeanBV_A","MeanBV_DirDom","MeanTGV_AD","MeanTGV_DirDom")),

Trait=factor(Trait,levels=c("stdSI","biofortSI","DM","logFYLD","MCMDS","TCHART")),

predOf=factor(predOf,levels=c("MeanBV","MeanTGV")),

Model=factor(Model,levels=c("A","AD","DirDom")),

RepFold=paste0(Repeat,"_",Fold,"_",Trait))

forplot %>%

ggplot(aes(x=ValidationData,y=Accuracy)) +

geom_violin(data=forplot,aes(fill=ValidationData), alpha=0.75) +

geom_boxplot(data=forplot,aes(fill=ValidationData), alpha=0.85, color='gray',width=0.2) +

geom_line(data=forplot,aes(group=RepFold),color='darkred',size=0.6,alpha=0.8) +

geom_point(data=forplot,aes(color=ValidationData, group=RepFold),size=1.5) +

theme_bw() +

scale_fill_viridis_d(option = "A") +

scale_color_viridis_d() +

theme(axis.text.x = element_text(face='bold', size=10, angle=90),

axis.text.y = element_text(face='bold', size=10)) +

facet_grid(Trait~Pred, scales='free_y')

labs(title = "Accuracies per trait-fold-rep-Model do not re-rank much from iid-to-GBLUP validation data")$title

[1] "Accuracies per trait-fold-rep-Model do not re-rank much from iid-to-GBLUP validation data"

attr(,"class")

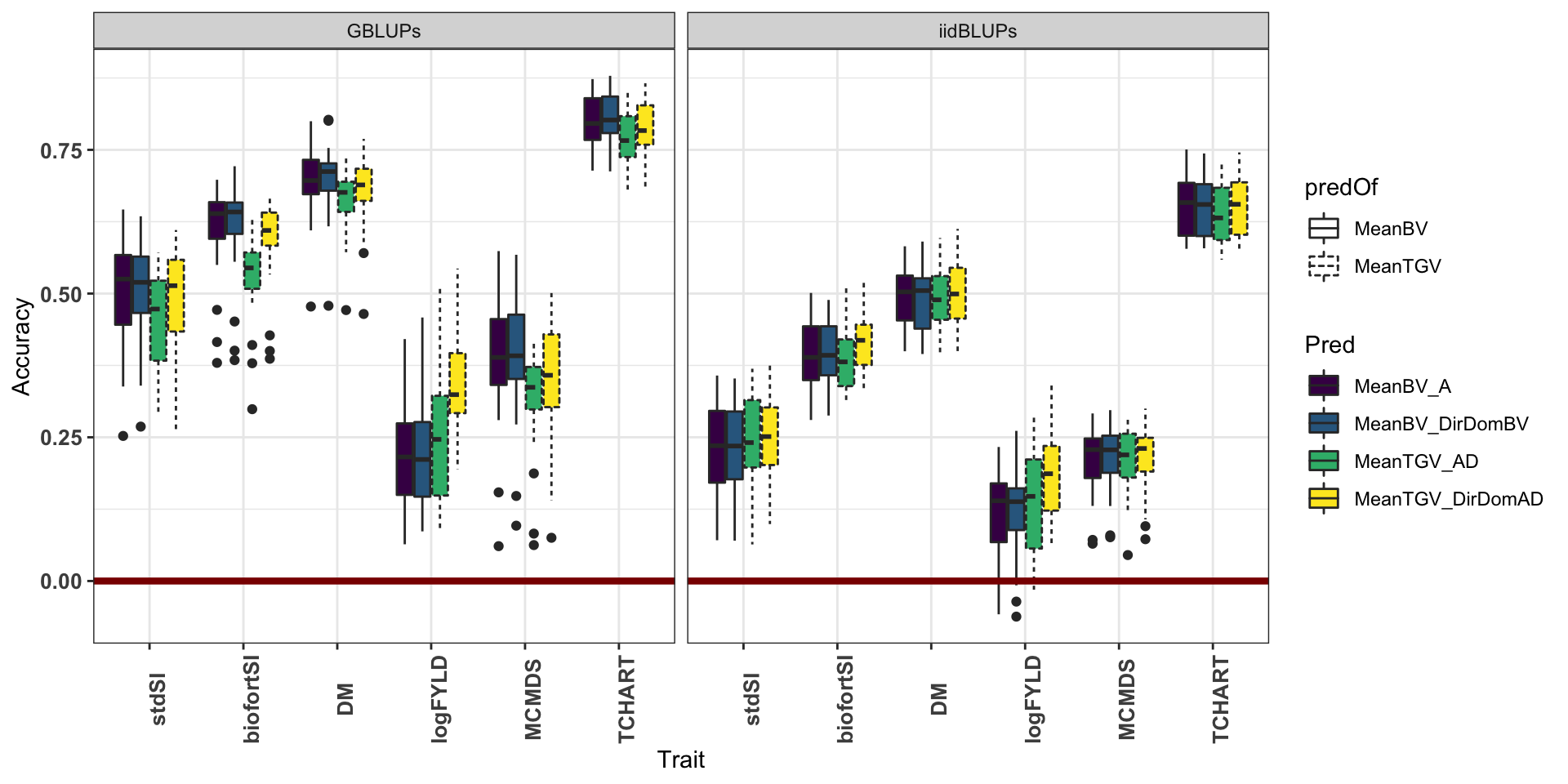

[1] "labels"accMeans %>%

mutate(Pred=paste0(predOf,"_",Model),

Pred=factor(Pred,levels=c("MeanBV_A","MeanBV_DirDomBV","MeanTGV_AD","MeanTGV_DirDomAD")),

Trait=factor(Trait,levels=c("stdSI","biofortSI","DM","logFYLD","MCMDS","TCHART")),

predOf=factor(predOf,levels=c("MeanBV","MeanTGV")),

Model=factor(Model,levels=c("A","AD","DirDom"))) %>%

ggplot(.,aes(x=Trait,y=Accuracy,fill=Pred,linetype=predOf)) +

geom_boxplot() + theme_bw() + scale_fill_viridis_d() +

geom_hline(yintercept = 0, color='darkred', size=1.5) +

theme(axis.text.x = element_text(face='bold', size=10, angle=90),

axis.text.y = element_text(face='bold', size=10)) +

facet_grid(.~ValidationData)

From hereon, for means, only considering GBLUP validation data.

Compare models

accMeans %>%

filter(ValidationData=="GBLUPs") %>%

group_by(Model,predOf) %>%

summarize(meanAcc=mean(Accuracy)) %>%

mutate_if(is.numeric,~round(.,3)) %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom")) %>%

spread(predOf,meanAcc) %>%

mutate(diffAcc=MeanTGV-MeanBV)# A tibble: 2 x 4

# Groups: Model [2]

Model MeanBV MeanTGV diffAcc

<chr> <dbl> <dbl> <dbl>

1 ClassicAD 0.538 0.495 -0.043

2 DirDom 0.541 0.543 0.002On average, across traits, the accuracy of predicting family-mean TGV were lower by -0.043 (0.002) for the ClassicAD (and DirDom) models.

accMeans %>%

filter(ValidationData=="GBLUPs") %>%

group_by(Model,predOf,Trait) %>%

summarize(meanAcc=mean(Accuracy)) %>%

mutate_if(is.numeric,~round(.,3)) %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom")) %>%

spread(predOf,meanAcc) %>%

mutate(diffAcc=MeanTGV-MeanBV)# A tibble: 12 x 5

# Groups: Model [2]

Model Trait MeanBV MeanTGV diffAcc

<chr> <chr> <dbl> <dbl> <dbl>

1 ClassicAD biofortSI 0.609 0.531 -0.0780

2 ClassicAD DM 0.697 0.663 -0.0340

3 ClassicAD logFYLD 0.227 0.241 0.0140

4 ClassicAD MCMDS 0.389 0.316 -0.073

5 ClassicAD stdSI 0.503 0.449 -0.0540

6 ClassicAD TCHART 0.803 0.773 -0.03

7 DirDom biofortSI 0.612 0.589 -0.023

8 DirDom DM 0.698 0.679 -0.0190

9 DirDom logFYLD 0.222 0.349 0.127

10 DirDom MCMDS 0.393 0.355 -0.038

11 DirDom stdSI 0.51 0.491 -0.019

12 DirDom TCHART 0.81 0.792 -0.018 But on a per-trait basis, for yield MeanTGV>MeanBV by 0.01 in the ClassicAD model, and was even higher (by 0.13) for the DirDom model.

accMeans %>%

filter(ValidationData=="GBLUPs") %>%

group_by(Model,predOf) %>%

summarize(meanAcc=mean(Accuracy)) %>%

mutate_if(is.numeric,~round(.,3)) %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom")) %>%

spread(Model,meanAcc) %>%

mutate(diffAcc=DirDom-ClassicAD)# A tibble: 2 x 4

predOf ClassicAD DirDom diffAcc

<chr> <dbl> <dbl> <dbl>

1 MeanBV 0.538 0.541 0.003

2 MeanTGV 0.495 0.543 0.048For both BV (0.003) and TGV (0.05), the DirDom model was on average more accurate.

accMeans %>%

filter(ValidationData=="GBLUPs") %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom")) %>%

group_by(Model,predOf,Trait) %>%

summarize(meanAcc=mean(Accuracy)) %>%

mutate_if(is.numeric,~round(.,3)) %>%

spread(Model,meanAcc) %>%

mutate(diffAcc=DirDom-ClassicAD) %>%

select(predOf,Trait,diffAcc) %>%

spread(predOf,diffAcc)# A tibble: 6 x 3

Trait MeanBV MeanTGV

<chr> <dbl> <dbl>

1 biofortSI 0.003 0.0580

2 DM 0.001 0.016

3 logFYLD -0.005 0.108

4 MCMDS 0.004 0.0390

5 stdSI 0.007 0.0420

6 TCHART 0.007 0.019 # ggplot(.,aes(x=MeanBV,y=MeanTGV,label=Trait)) + geom_label() + geom_point() + theme_bw() +

# labs(title="Compare diff Acc (DirDom-ClassicAD)") + geom_abline(slope=1)The accuracy for yield was higher for TGV than BV by 0.11had the highest increase (0.231 for BVs and 0.181 for TGVs) when using the DirDom vs. the ClassicAD model. DM and the StdSI were both more poorly predicted.

Predictions of variances and covariances

## Table S7: Predicted cross variances

predVars<-read.csv(here::here("manuscript","SupplementaryTable07.csv"),stringsAsFactors = F)PMV vs. VPM

First thing is to compare the PMV and VPM results. Ideally, they will be the same in accuracy and provide similar rankings. VPM is much faster and we would prefer to use it, e.g. for the predictions of untested crosses.

predVars %>%

group_by(Model,VarComp) %>%

summarize(corPMV_VPM=cor(VPM,PMV),

pctIncreasePMV_over_VPM=mean((PMV-VPM)/VPM))# A tibble: 6 x 4

# Groups: Model [4]

Model VarComp corPMV_VPM pctIncreasePMV_over_VPM

<chr> <chr> <dbl> <dbl>

1 A VarA 0.987 9.29

2 AD VarA 0.986 16.0

3 AD VarD 0.974 12.5

4 DirDomAD VarA 0.983 10.4

5 DirDomAD VarD 0.970 16.9

6 DirDomBV VarA 0.986 11.7 Across all predictions and models, the correlation between the PMV and VPM was very high.

predVars %>%

group_by(Model,VarComp) %>%

summarize(corPMV_VPM=cor(VPM,PMV),

pctIncreasePMV_over_VPM=mean((PMV-VPM)/VPM)) %$% mean(corPMV_VPM)[1] 0.9809162However, there is a difference in scale between predictions by VPM and PMV as seen in the figure below.

predVars %>%

mutate(VarCovar=paste0(Trait1,"_",Trait2),

Pred=paste0(Model,"_",VarComp),

diffPredVar=PMV-VPM) %>%

ggplot(.,aes(x=Pred,y=diffPredVar,fill=Pred,linetype=VarComp)) +

geom_boxplot() + facet_wrap(~VarCovar,scales='free',nrow=2) +

geom_hline(yintercept = 0) + theme_bw() +

theme(axis.text.x = element_text(angle=90)) +

scale_fill_viridis_d() +

labs(title="The difference between PMV and VPM for variance and covariance predictions",

y="diffPredVar = PMV minus VPM ")

PMV gave consistently higher variance predictions and larger covariance (\(|\sigma|\)) (either more negative i.e. DM-TCHART or more positive i.e. MCMDS_TCHART).

What about prediction accuracy according to PMV vs. VPM?

## Table S11: Accuracies predicting the variances

accVars<-readxl::read_xlsx(here::here("manuscript","SupplementaryTables.xlsx"),sheet = "TableS11")

accVars %>%

select(-AccuracyCor) %>%

spread(VarMethod,AccuracyWtCor) %>%

mutate(diffAcc=PMV-VPM) %$% summary(diffAcc) Min. 1st Qu. Median Mean 3rd Qu. Max.

-0.60769 -0.14047 -0.05226 -0.07392 0.01550 0.62149 PMV-based estimates of prediction accuracy were nearly uniformly lower (mean decrease in acc. -0.07).

accVars %>%

select(-AccuracyCor) %>%

spread(VarMethod,AccuracyWtCor) %>%

mutate(VarCovar=paste0(Trait1,"_",Trait2),

Pred=paste0(Model,"_",predOf),

diffAcc=PMV-VPM) %>%

ggplot(.,aes(x=Pred,y=diffAcc,fill=Pred,linetype=predOf)) +

geom_boxplot() + facet_wrap(~VarCovar,scales='free',nrow=2) +

geom_hline(yintercept = 0) + theme_bw() +

theme(axis.text.x = element_text(angle=90)) +

scale_fill_viridis_d() +

labs(title="The difference between PMV and VPM in terms of prediction accuracy",

y="diffPredAcc = predAccPMV minus predAccVPM ")

We will proceed with PMV results, except for the exploratory analyses, where we will save time/computation and use the VPM.

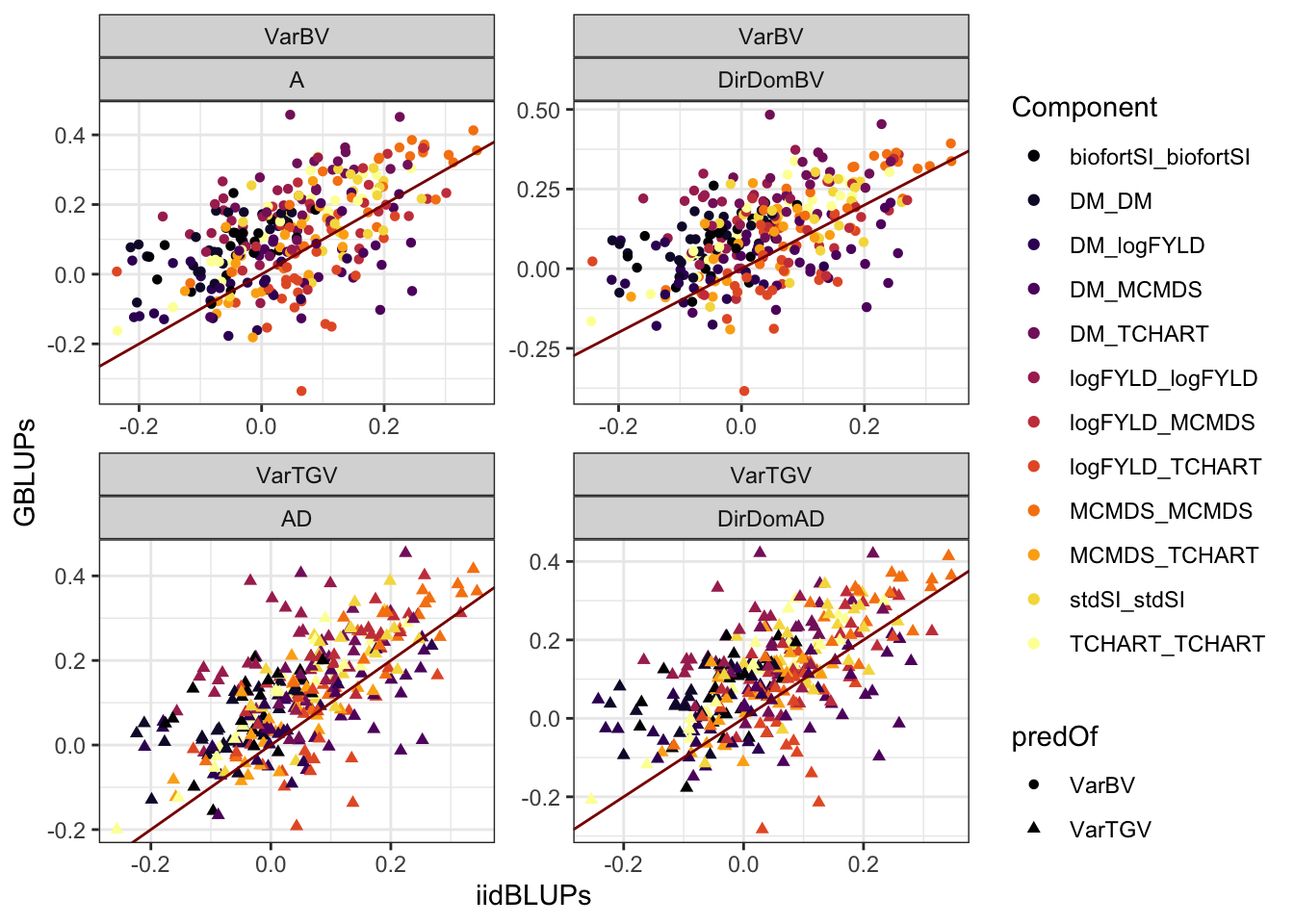

Compare validation data types

accVars %>%

filter(VarMethod=="PMV") %>%

select(-AccuracyCor) %>% #count(ValidationData,Model,VarComp)

spread(ValidationData,AccuracyWtCor) %>%

mutate(Component=paste0(Trait1,"_",Trait2)) %>%

ggplot(.,aes(x=iidBLUPs,y=GBLUPs,shape=predOf,color=Component)) +

geom_point() +

geom_abline(slope=1,color='darkred') +

facet_wrap(~predOf+Model,scales = 'free') +

theme_bw() + scale_color_viridis_d(option = "B")

accVars %>%

filter(VarMethod=="PMV") %>%

select(-AccuracyCor) %>%

spread(ValidationData,AccuracyWtCor) %>%

mutate(diffAcc=GBLUPs-iidBLUPs) %$% summary(diffAcc) Min. 1st Qu. Median Mean 3rd Qu. Max.

-0.400391 0.002691 0.073790 0.067121 0.136685 0.437231 Similar to the means, GBLUPs as validation data for variances was higher on average (mean 0.073).

Below I make several plots of the data to explore the consequences of using GBLUPs vs. iidBLUPs as validation data. The first one shows (for simplicity just for the variances on the SI’s) that there is re-ranking of accuracies b/t validation data-types. The boxplots that follow try to determine if similar conclusions would be reached from either validation data.

forplot<-accVars %>%

filter(VarMethod=="PMV") %>%

filter(Trait1==Trait2,grepl("SI",Trait1)) %>%

mutate(Pred=paste0(predOf,"_",Model),

Pred=factor(Pred,levels=c("VarBV_A","VarBV_DirDomBV","VarTGV_AD","VarTGV_DirDomAD")),

Trait1=factor(Trait1,levels=c("stdSI","biofortSI")),#,"DM","logFYLD","MCMDS","TCHART")),

Trait2=factor(Trait2,levels=c("stdSI","biofortSI")),#,"DM","logFYLD","MCMDS","TCHART")),

Component=paste0(Trait1,"_",Trait2),

predOf=factor(predOf,levels=c("VarBV","VarTGV")),

Model=factor(Model,levels=c("A","AD","DirDomBV","DirDomAD")),

RepFold=paste0(Repeat,"_",Fold,"_",Component))

forplot %>%

ggplot(aes(x=ValidationData,y=AccuracyWtCor)) +

geom_violin(data=forplot,aes(fill=ValidationData), alpha=0.75) +

geom_boxplot(data=forplot,aes(fill=ValidationData), alpha=0.85, color='gray',width=0.2) +

geom_line(data=forplot,aes(group=RepFold),color='darkred',size=0.6,alpha=0.8) +

geom_point(data=forplot,aes(color=ValidationData, group=RepFold),size=1.5) +

theme_bw() +

scale_fill_viridis_d(option = "A") +

scale_color_viridis_d() +

theme(axis.text.x = element_text(face='bold', size=10, angle=90),

axis.text.y = element_text(face='bold', size=10)) +

facet_grid(Component~Pred, scales='free_y') +

labs(title="Plot of variance-prediction accuracy: Re-ranking according choice of validation?")

| Version | Author | Date |

|---|---|---|

| 22e6c87 | wolfemd | 2021-01-03 |

forplot<-accVars %>%

filter(VarMethod=="PMV") %>%

mutate(Pred=paste0(predOf,"_",Model),

Pred=factor(Pred,levels=c("VarBV_A","VarTGV_AD","VarBV_DirDomBV","VarTGV_DirDomAD")),

Trait1=factor(Trait1,levels=c("stdSI","biofortSI","DM","logFYLD","MCMDS","TCHART")),

Trait2=factor(Trait2,levels=c("stdSI","biofortSI","DM","logFYLD","MCMDS","TCHART")),

Component=paste0(Trait1,"_",Trait2),

predOf=factor(predOf,levels=c("VarBV","VarTGV")),

Model=factor(Model,levels=c("A","AD","DirDomBV","DirDomAD")),

RepFold=paste0(Repeat,"_",Fold,"_",Component))

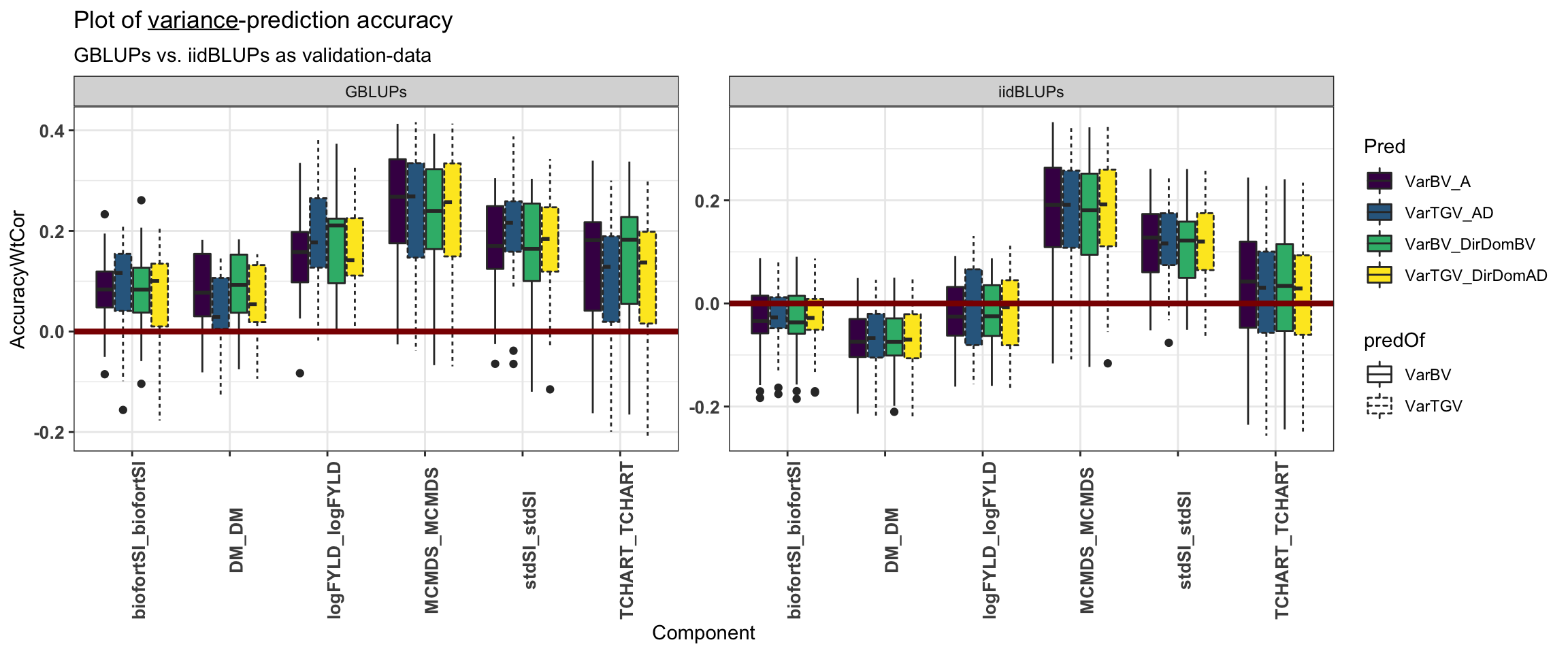

forplot %>%

filter(Trait1==Trait2) %>%

ggplot(.,aes(x=Component,y=AccuracyWtCor,fill=Pred,linetype=predOf)) +

geom_boxplot() + theme_bw() + scale_fill_viridis_d() +

geom_hline(yintercept = 0, color='darkred', size=1.5) +

theme(axis.text.x = element_text(face='bold', size=10, angle=90),

axis.text.y = element_text(face='bold', size=10)) +

facet_wrap(~ValidationData,scales='free') +

ggtitle(expression(paste("Plot of ", underline(variance), "-prediction accuracy"))) +

labs(subtitle="GBLUPs vs. iidBLUPs as validation-data")

forplot %>%

filter(VarMethod=="PMV") %>%

filter(Trait1!=Trait2) %>%

ggplot(.,aes(x=Component,y=AccuracyWtCor,fill=Pred,linetype=predOf)) +

geom_boxplot() + theme_bw() + scale_fill_viridis_d() +

geom_hline(yintercept = 0, color='darkred', size=1.5) +

theme(axis.text.x = element_text(face='bold', size=10, angle=90),

axis.text.y = element_text(face='bold', size=10)) +

facet_wrap(~ValidationData,scales='free') +

ggtitle(expression(paste("Plot of ", underline(co), "variance-prediction accuracy"))) +

labs(subtitle="GBLUPs vs. iidBLUPs as validation-data")

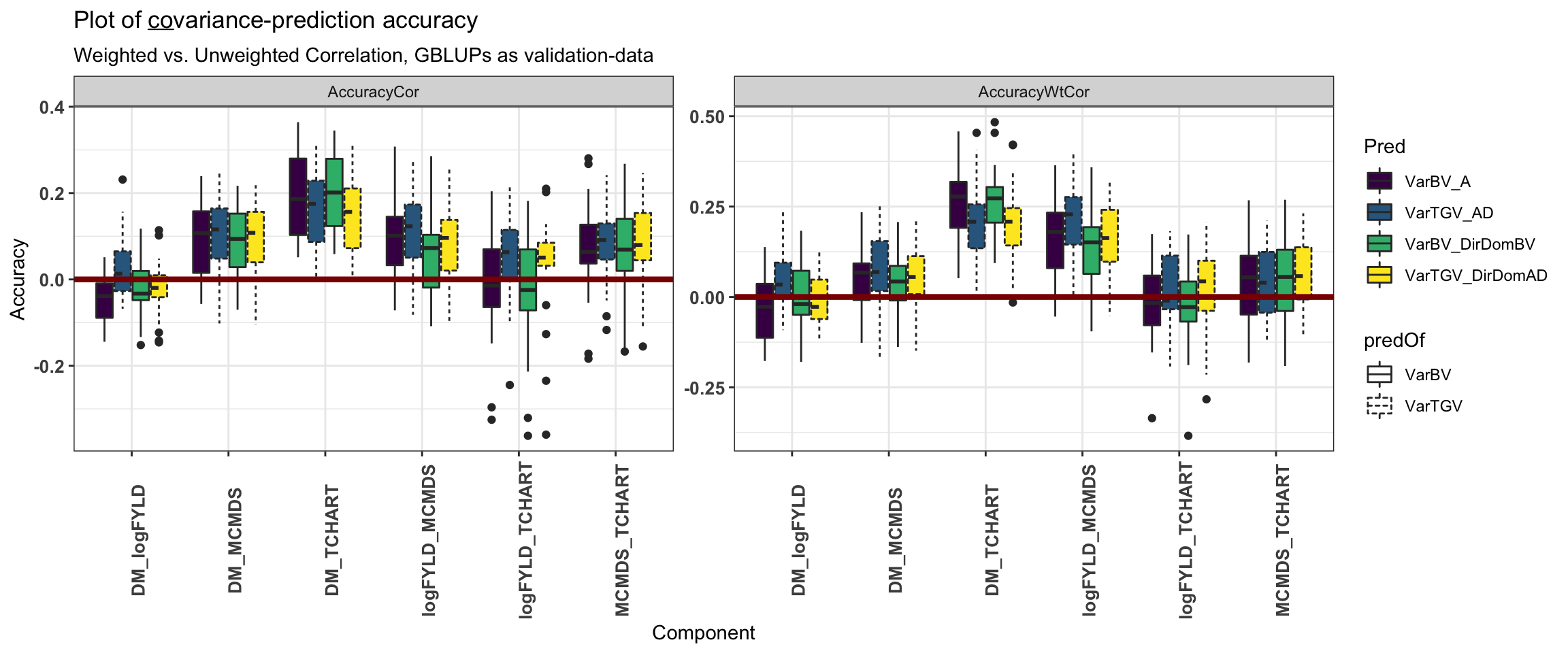

The two sets of boxplots (variances and covariances) above suggest that we would reach similar but less strong conclusions above the difference between prediction models and variance components. Moreover, we would reach similar conclusions about which trait variances and trait-trait covariances are best or worst predicted. We consider for our primary conclusions, the prediction accuracy with GBLUP-derived validation data.

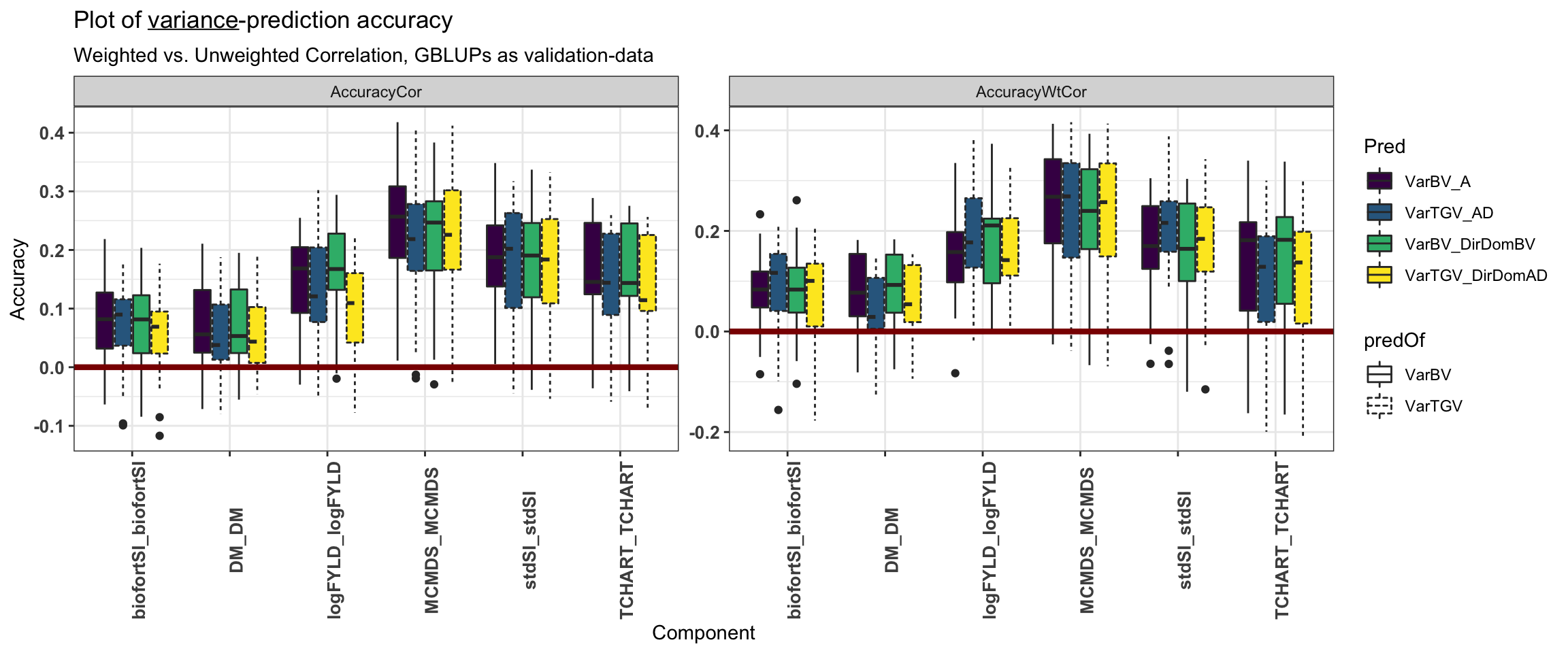

What difference does the weighted correlation make?

For variances (but not means), we chose to weight prediction-observation pairs according to the number of family members (GBLUPs-as-validation) or the number of observed non-missing iid-BLUPs per family per trait (iidBLUPs-as-validation) when computing prediction accuracies. The weighted correlations should be justified because large families (or heavily phenotyped ones) should have better-estimated variances than small ones. Below, we consider briefly the effect weighted correlations have on results.

accVars %>%

group_by(VarMethod,ValidationData,predOf,Model) %>%

summarize(corWT_vs_noWT=cor(AccuracyWtCor,AccuracyCor)) %$% summary(corWT_vs_noWT) Min. 1st Qu. Median Mean 3rd Qu. Max.

0.7844 0.8261 0.8727 0.8674 0.9039 0.9396 accVars %>%

group_by(VarMethod,ValidationData,predOf,Model,Trait1,Trait2) %>%

summarize(corWT_vs_noWT=cor(AccuracyWtCor,AccuracyCor)) %$% summary(corWT_vs_noWT) Min. 1st Qu. Median Mean 3rd Qu. Max.

0.5549 0.7381 0.8107 0.7935 0.8627 0.9188 We found that the weighted-vs-unweighted accuracies are themselves similar highly correlated (mean cor. 0.87) across traits, varcomps, models and validation-data types.

accVars %>%

mutate(diffAcc=AccuracyWtCor-AccuracyCor) %$% summary(diffAcc) Min. 1st Qu. Median Mean 3rd Qu. Max.

-0.21630 -0.03242 0.01519 0.01500 0.06172 0.22821 Definitely not a consistent increase or decrease in accuracy according to weighting.

accVars %>%

mutate(diffAcc=AccuracyWtCor-AccuracyCor) %>%

group_by(VarMethod,ValidationData,predOf,Model) %>%

summarize(meanDiffAcc_WT_vs_noWT=mean(diffAcc))# A tibble: 16 x 5

# Groups: VarMethod, ValidationData, predOf [8]

VarMethod ValidationData predOf Model meanDiffAcc_WT_vs_noWT

<chr> <chr> <chr> <chr> <dbl>

1 PMV GBLUPs VarBV A 0.00656

2 PMV GBLUPs VarBV DirDomBV 0.00817

3 PMV GBLUPs VarTGV AD 0.0135

4 PMV GBLUPs VarTGV DirDomAD 0.0121

5 PMV iidBLUPs VarBV A 0.00567

6 PMV iidBLUPs VarBV DirDomBV 0.00610

7 PMV iidBLUPs VarTGV AD 0.00880

8 PMV iidBLUPs VarTGV DirDomAD 0.00716

9 VPM GBLUPs VarBV A 0.0287

10 VPM GBLUPs VarBV DirDomBV 0.0260

11 VPM GBLUPs VarTGV AD 0.0334

12 VPM GBLUPs VarTGV DirDomAD 0.0350

13 VPM iidBLUPs VarBV A 0.0111

14 VPM iidBLUPs VarBV DirDomBV 0.00975

15 VPM iidBLUPs VarTGV AD 0.0140

16 VPM iidBLUPs VarTGV DirDomAD 0.0140 Across varcomps, models, validation-data and var. methods (PMV vs. VPM), very close to mean 0 diff. b/t WT and no WT, but generally WT>noWT.

accVars %>%

filter(VarMethod=="PMV",ValidationData=="GBLUPs") %>%

mutate(Pred=paste0(predOf,"_",Model),

Pred=factor(Pred,levels=c("VarBV_A","VarTGV_AD","VarBV_DirDomBV","VarTGV_DirDomAD")),

diffAcc=AccuracyWtCor-AccuracyCor,

Component=paste0(Trait1,"_",Trait2)) %>%

group_by(Pred,Component) %>%

summarize(meanDiffAcc_WT_vs_noWT=round(mean(diffAcc),3)) %>%

spread(Pred,meanDiffAcc_WT_vs_noWT)# A tibble: 12 x 5

Component VarBV_A VarTGV_AD VarBV_DirDomBV VarTGV_DirDomAD

<chr> <dbl> <dbl> <dbl> <dbl>

1 biofortSI_biofortSI -0.005 0.023 0.004 0.014

2 DM_DM 0.014 -0.016 0.018 -0.004

3 DM_logFYLD 0.014 0.019 0.013 0.015

4 DM_MCMDS -0.031 -0.024 -0.043 -0.029

5 DM_TCHART 0.065 0.049 0.062 0.057

6 logFYLD_logFYLD 0.002 0.056 0.006 0.052

7 logFYLD_MCMDS 0.065 0.101 0.066 0.078

8 logFYLD_TCHART -0.007 -0.034 0.006 -0.012

9 MCMDS_MCMDS 0.007 0.033 0.01 0.02

10 MCMDS_TCHART -0.028 -0.041 -0.027 -0.032

11 stdSI_stdSI -0.011 0.02 -0.008 0

12 TCHART_TCHART -0.009 -0.023 -0.007 -0.015Considering the boxplots below, conclusions appear to be at least qualitatively similar whether or not weighted correlations are considered as measures of accuracy.

forplot<-accVars %>%

filter(VarMethod=="PMV",ValidationData=="GBLUPs") %>%

pivot_longer(cols=contains("Cor"),names_to = "WT_or_NoWT", values_to = "Accuracy") %>%

mutate(Pred=paste0(predOf,"_",Model),

Pred=factor(Pred,levels=c("VarBV_A","VarTGV_AD","VarBV_DirDomBV","VarTGV_DirDomAD")),

Trait1=factor(Trait1,levels=c("stdSI","biofortSI","DM","logFYLD","MCMDS","TCHART")),

Trait2=factor(Trait2,levels=c("stdSI","biofortSI","DM","logFYLD","MCMDS","TCHART")),

Component=paste0(Trait1,"_",Trait2),

predOf=factor(predOf,levels=c("VarBV","VarTGV")),

Model=factor(Model,levels=c("A","AD","DirDomBV","DirDomAD")),

RepFold=paste0(Repeat,"_",Fold,"_",Component))

forplot %>%

filter(Trait1==Trait2) %>%

ggplot(.,aes(x=Component,y=Accuracy,fill=Pred,linetype=predOf)) +

geom_boxplot() + theme_bw() + scale_fill_viridis_d() +

geom_hline(yintercept = 0, color='darkred', size=1.5) +

theme(axis.text.x = element_text(face='bold', size=10, angle=90),

axis.text.y = element_text(face='bold', size=10)) +

facet_wrap(~WT_or_NoWT,scales='free') +

ggtitle(expression(paste("Plot of ", underline(variance), "-prediction accuracy"))) +

labs(subtitle="Weighted vs. Unweighted Correlation, GBLUPs as validation-data")

| Version | Author | Date |

|---|---|---|

| 22e6c87 | wolfemd | 2021-01-03 |

forplot %>%

filter(Trait1!=Trait2) %>%

ggplot(.,aes(x=Component,y=Accuracy,fill=Pred,linetype=predOf)) +

geom_boxplot() + theme_bw() + scale_fill_viridis_d() +

geom_hline(yintercept = 0, color='darkred', size=1.5) +

theme(axis.text.x = element_text(face='bold', size=10, angle=90),

axis.text.y = element_text(face='bold', size=10)) +

facet_wrap(~WT_or_NoWT,scales='free') +

ggtitle(expression(paste("Plot of ", underline(co), "variance-prediction accuracy"))) +

labs(subtitle="Weighted vs. Unweighted Correlation, GBLUPs as validation-data")

Compare models

We consider the accuracy of predicting variances (and subsequently also usefulness criteria) using the “PMV” variance method, GBLUPs as validation-data and family-size-weighted correlations.

library(tidyverse); library(magrittr);

## Table S11: Accuracies predicting the variances

accVars<-readxl::read_xlsx(here::here("manuscript","SupplementaryTables.xlsx"),sheet = "TableS11")accVars %>%

filter(ValidationData=="GBLUPs",VarMethod=="PMV") %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom"),

Component=ifelse(Trait1==Trait2,"Variance","Covariance"),

TraitType=ifelse(grepl("SI",Trait1),"SI","ComponentTrait")) %>%

group_by(Component) %>%

summarize(meanAcc=round(mean(AccuracyWtCor),3),

sdAcc=round(sd(AccuracyWtCor),3))# A tibble: 2 x 3

Component meanAcc sdAcc

<chr> <dbl> <dbl>

1 Covariance 0.085 0.135

2 Variance 0.143 0.119Most variance prediction accuracy estimates were positive, with a mean weighted correlation of 0.14. Mean accuracy for covariance prediction was less (0.09).

accVars %>%

filter(ValidationData=="GBLUPs",VarMethod=="PMV") %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom"),

Component=ifelse(Trait1==Trait2,"Variance","Covariance"),

TraitType=ifelse(grepl("SI",Trait1),"SI","ComponentTrait")) %>%

group_by(Component,TraitType,Trait1,Trait2) %>%

summarize(meanAcc=round(mean(AccuracyWtCor),3)) %>% arrange(desc(meanAcc))# A tibble: 12 x 5

# Groups: Component, TraitType, Trait1 [9]

Component TraitType Trait1 Trait2 meanAcc

<chr> <chr> <chr> <chr> <dbl>

1 Covariance ComponentTrait DM TCHART 0.236

2 Variance ComponentTrait MCMDS MCMDS 0.235

3 Variance SI stdSI stdSI 0.177

4 Covariance ComponentTrait logFYLD MCMDS 0.168

5 Variance ComponentTrait logFYLD logFYLD 0.167

6 Variance ComponentTrait TCHART TCHART 0.131

7 Variance SI biofortSI biofortSI 0.079

8 Variance ComponentTrait DM DM 0.066

9 Covariance ComponentTrait DM MCMDS 0.059

10 Covariance ComponentTrait MCMDS TCHART 0.047

11 Covariance ComponentTrait logFYLD TCHART 0.002

12 Covariance ComponentTrait DM logFYLD -0.001In contrast to results for predicting family means, the most accurately predicted trait-variances were MCMDS (mean acc. 0.24) and logFYLD (mean acc. 0.17) while Var(DM), for example, had among the lowest accuracies at 0.07). Interestingly, the DM-TCHART covariance was the most well predicted component (mean acc. 0.24). Accuracy for the selection index variances were intermediate (mean stdSI = 0.18, mean biofortSI = 0.08) compared to the component traits. Like the accuracy for means on the SI’s, accuracy for variance corresponding to the accuracy of the component traits. In contrast to predicting cross means on SI’s, for variances, the stdSI > biofortSI. This makes sense as the stdSI emphasized logFYLD and MCMDS, which are better predicted than DM, TCHART and related covariances.

accVars %>%

filter(ValidationData=="GBLUPs",VarMethod=="PMV") %>%

group_by(Model,predOf) %>%

summarize(meanAcc=mean(AccuracyWtCor)) %>%

mutate_if(is.numeric,~round(.,3)) %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom")) %>%

spread(predOf,meanAcc) %>%

mutate(diffAcc=VarTGV-VarBV)# A tibble: 2 x 4

# Groups: Model [2]

Model VarBV VarTGV diffAcc

<chr> <dbl> <dbl> <dbl>

1 ClassicAD 0.112 0.124 0.0120

2 DirDom 0.11 0.108 -0.002 There were, overall, only small differences in accuracy between prediction models (ClassicAD and DirDom) and var. components (VarBV, VarTGV). On average, across trait variances and covariances, the accuracy of predicting family-(co)variance in TGV were higher (than predicting VarBV) by 0.01 for the ClassicAD but lower by -0.002 for the DirDom model.

VarTGV: ClassicAD was best for most components.

VarBV: DirDom was best for most components.

# Interesting differences between accuracy VarBV vs. VarTGV?

accVars %>%

filter(ValidationData=="GBLUPs",VarMethod=="PMV") %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom"),

Component=ifelse(Trait1==Trait2,"Variance","Covariance"),

Trait=paste0(Trait1,"_",Trait2)) %>%

group_by(Model,predOf,Component,Trait,Trait1,Trait2) %>%

summarize(meanAcc=round(mean(AccuracyWtCor),3)) %>%

spread(predOf,meanAcc) %>%

mutate(diffAcc=VarTGV-VarBV) %>% arrange(Model,desc(diffAcc))# A tibble: 24 x 8

# Groups: Model, Component, Trait, Trait1 [24]

Model Component Trait Trait1 Trait2 VarBV VarTGV diffAcc

<chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl>

1 ClassicAD Covariance DM_logFYLD DM logFYLD -0.036 0.048 0.0840

2 ClassicAD Covariance logFYLD_MCMDS logFYLD MCMDS 0.166 0.213 0.0470

3 ClassicAD Variance logFYLD_logFYLD logFYLD logFYLD 0.147 0.193 0.046

4 ClassicAD Covariance logFYLD_TCHART logFYLD TCHART -0.016 0.024 0.04

5 ClassicAD Variance stdSI_stdSI stdSI stdSI 0.172 0.197 0.025

6 ClassicAD Covariance DM_MCMDS DM MCMDS 0.059 0.079 0.02

7 ClassicAD Variance biofortSI_biof… biofort… biofort… 0.076 0.09 0.0140

8 ClassicAD Covariance MCMDS_TCHART MCMDS TCHART 0.044 0.042 -0.00200

9 ClassicAD Variance MCMDS_MCMDS MCMDS MCMDS 0.243 0.239 -0.004

10 ClassicAD Variance TCHART_TCHART TCHART TCHART 0.147 0.113 -0.0340

# … with 14 more rowsInteresting differences between accuracy VarBV vs. VarTGV?

The largest increases (diffAcc = accVarTGV - accVarBV) were logFYLD-MCMDS (0.065) and logFYLD-TCHART (0.053) followed by the stdSI variance (0.04), all for the ClassicAD model. VarTGV was better predicted than VarBV for yield in the ClassicAD model (0.03), but decreased sharply (-0.08) for the DirDom model.

# Interesting differences between accuracy DirDom vs. ClassicAD?

accVars %>%

filter(ValidationData=="GBLUPs",VarMethod=="PMV") %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom"),

Component=ifelse(Trait1==Trait2,"Variance","Covariance"),

Trait=paste0(Trait1,"_",Trait2)) %>%

group_by(Model,predOf,Component,Trait,Trait1,Trait2) %>%

summarize(meanAcc=round(mean(AccuracyWtCor),3)) %>%

spread(Model,meanAcc) %>%

mutate(diffAcc=DirDom-ClassicAD) %>% arrange(predOf,desc(diffAcc))# A tibble: 24 x 8

# Groups: predOf, Component, Trait, Trait1 [24]

predOf Component Trait Trait1 Trait2 ClassicAD DirDom diffAcc

<chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl>

1 VarBV Covariance DM_logFYLD DM logFYLD -0.036 -0.008 0.0280

2 VarBV Variance logFYLD_logFYLD logFYLD logFYLD 0.147 0.171 0.024

3 VarBV Covariance DM_TCHART DM TCHART 0.261 0.266 0.005

4 VarBV Variance DM_DM DM DM 0.082 0.087 0.00500

5 VarBV Covariance MCMDS_TCHART MCMDS TCHART 0.044 0.046 0.002

6 VarBV Variance biofortSI_biof… biofort… biofort… 0.076 0.078 0.002

7 VarBV Variance TCHART_TCHART TCHART TCHART 0.147 0.146 -0.001

8 VarBV Covariance logFYLD_TCHART logFYLD TCHART -0.016 -0.02 -0.004

9 VarBV Variance stdSI_stdSI stdSI stdSI 0.172 0.163 -0.00900

10 VarBV Variance MCMDS_MCMDS MCMDS MCMDS 0.243 0.224 -0.0190

# … with 14 more rowsFocusing next on differences between the DirDom and ClassicAD models (diffAcc = DirDom - ClassicAD).

logFYLD and logFYLD-TCHART variances and covariances for BVs were up by 0.1 for DirDom. In contrast, both of these components for TGV were down (-0.01 logFYLD, -0.05 logFYLD-TCHART).

accVars %>%

filter(ValidationData=="GBLUPs",VarMethod=="PMV", grepl("SI",Trait1)) %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom"),

Component=ifelse(Trait1==Trait2,"Variance","Covariance"),

Trait=paste0(Trait1,"_",Trait2)) %>%

group_by(Trait,Trait1,Trait2) %>%

summarize(meanAcc=round(mean(AccuracyWtCor),3)) %>% ungroup() # A tibble: 2 x 4

Trait Trait1 Trait2 meanAcc

<chr> <chr> <chr> <dbl>

1 biofortSI_biofortSI biofortSI biofortSI 0.079

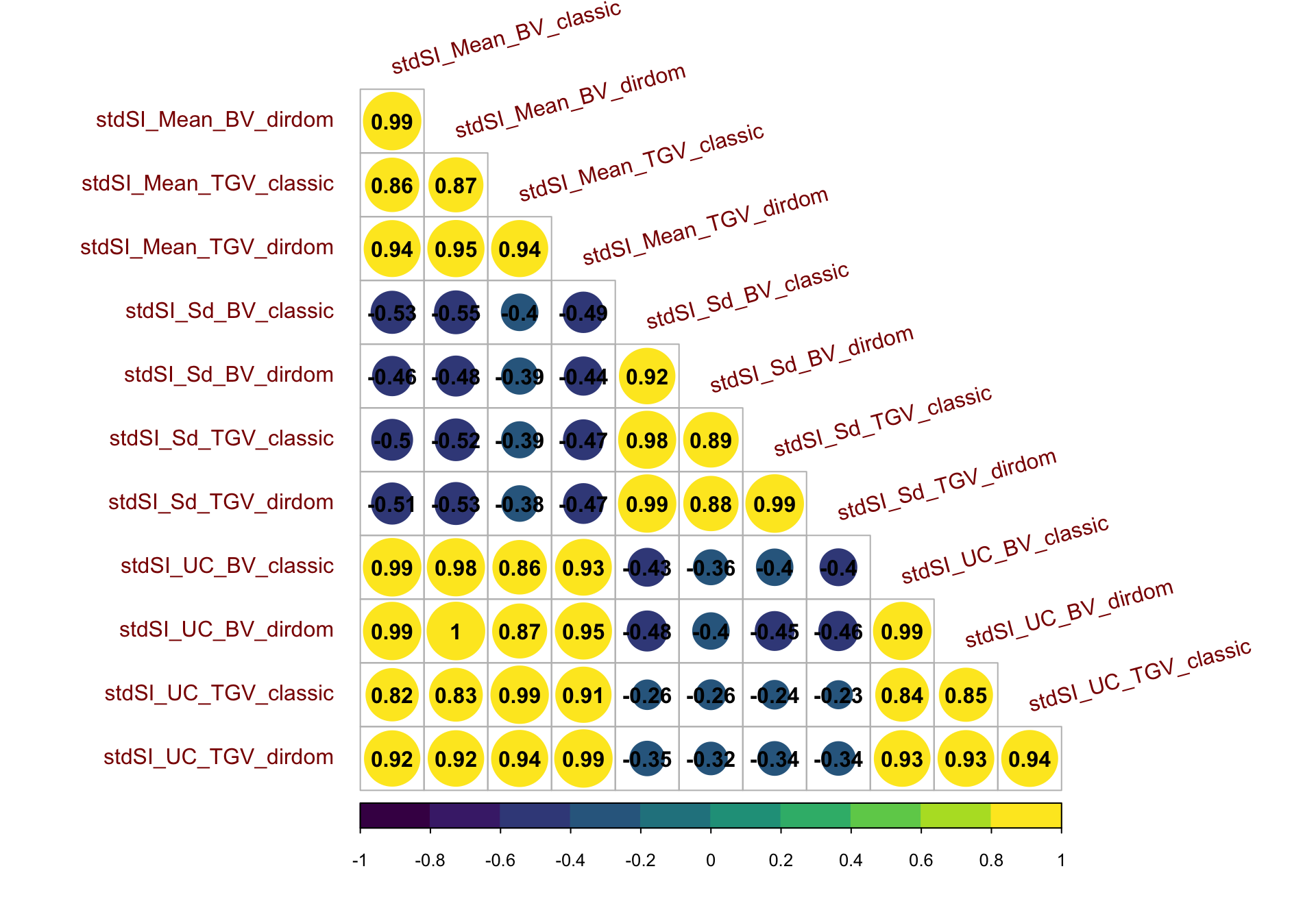

2 stdSI_stdSI stdSI stdSI 0.177Regarding the selection index variance accuracies:

On average, the accuracy for the StdSI was twice that of the biofortSI (0.18 vs. 0.08).

accVars %>%

filter(ValidationData=="GBLUPs",VarMethod=="PMV", grepl("SI",Trait1)) %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom"),

Component=ifelse(Trait1==Trait2,"Variance","Covariance"),

Trait=paste0(Trait1,"_",Trait2)) %>%

group_by(Model,predOf,Component,Trait,Trait1,Trait2) %>%

summarize(meanAcc=round(mean(AccuracyWtCor),3)) %>% ungroup() %>%

select(-Trait,-Trait2,-Component) %>% spread(predOf,meanAcc)# A tibble: 4 x 4

Model Trait1 VarBV VarTGV

<chr> <chr> <dbl> <dbl>

1 ClassicAD biofortSI 0.076 0.09

2 ClassicAD stdSI 0.172 0.197

3 DirDom biofortSI 0.078 0.07

4 DirDom stdSI 0.163 0.176For both models and both indices, VarTGV was better predicted than VarBV.

accVars %>%

filter(ValidationData=="GBLUPs",VarMethod=="PMV", grepl("SI",Trait1)) %>%

mutate(Model=ifelse(!grepl("DirDom",Model),"ClassicAD","DirDom"),

Component=ifelse(Trait1==Trait2,"Variance","Covariance"),

Trait=paste0(Trait1,"_",Trait2)) %>%

group_by(Model,predOf,Component,Trait,Trait1,Trait2) %>%

summarize(meanAcc=round(mean(AccuracyWtCor),3)) %>% ungroup() %>%

select(-Trait,-Trait2,-Component) %>% spread(Model,meanAcc)# A tibble: 4 x 4

predOf Trait1 ClassicAD DirDom

<chr> <chr> <dbl> <dbl>

1 VarBV biofortSI 0.076 0.078

2 VarBV stdSI 0.172 0.163

3 VarTGV biofortSI 0.09 0.07

4 VarTGV stdSI 0.197 0.176However, the DirDom model (compared to the ClassicAD model) increased accuracy slightly for both VarBV and VarTGV on the biofortSI, but decreased it on the StdSI.

Prediction of the UC

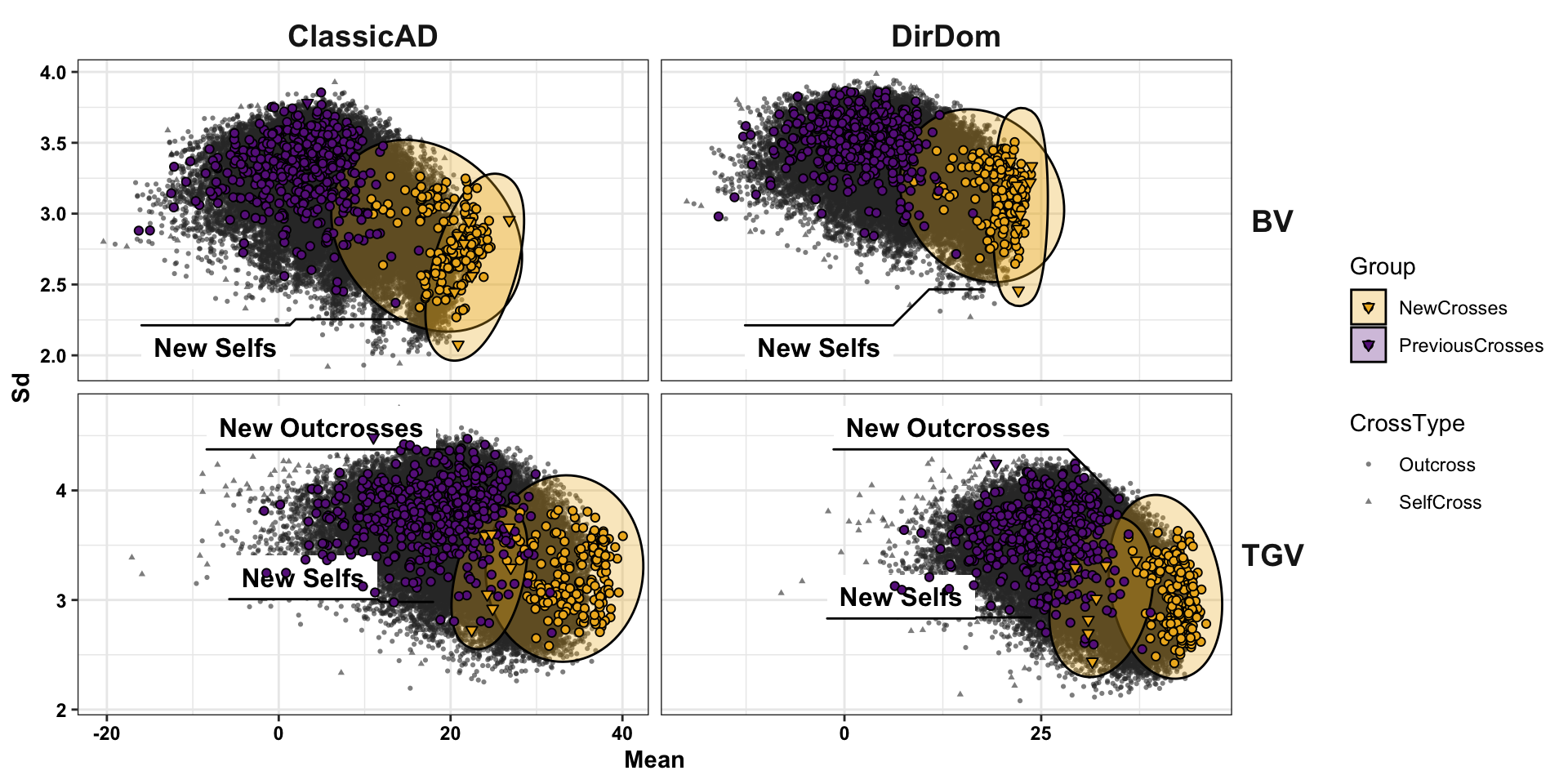

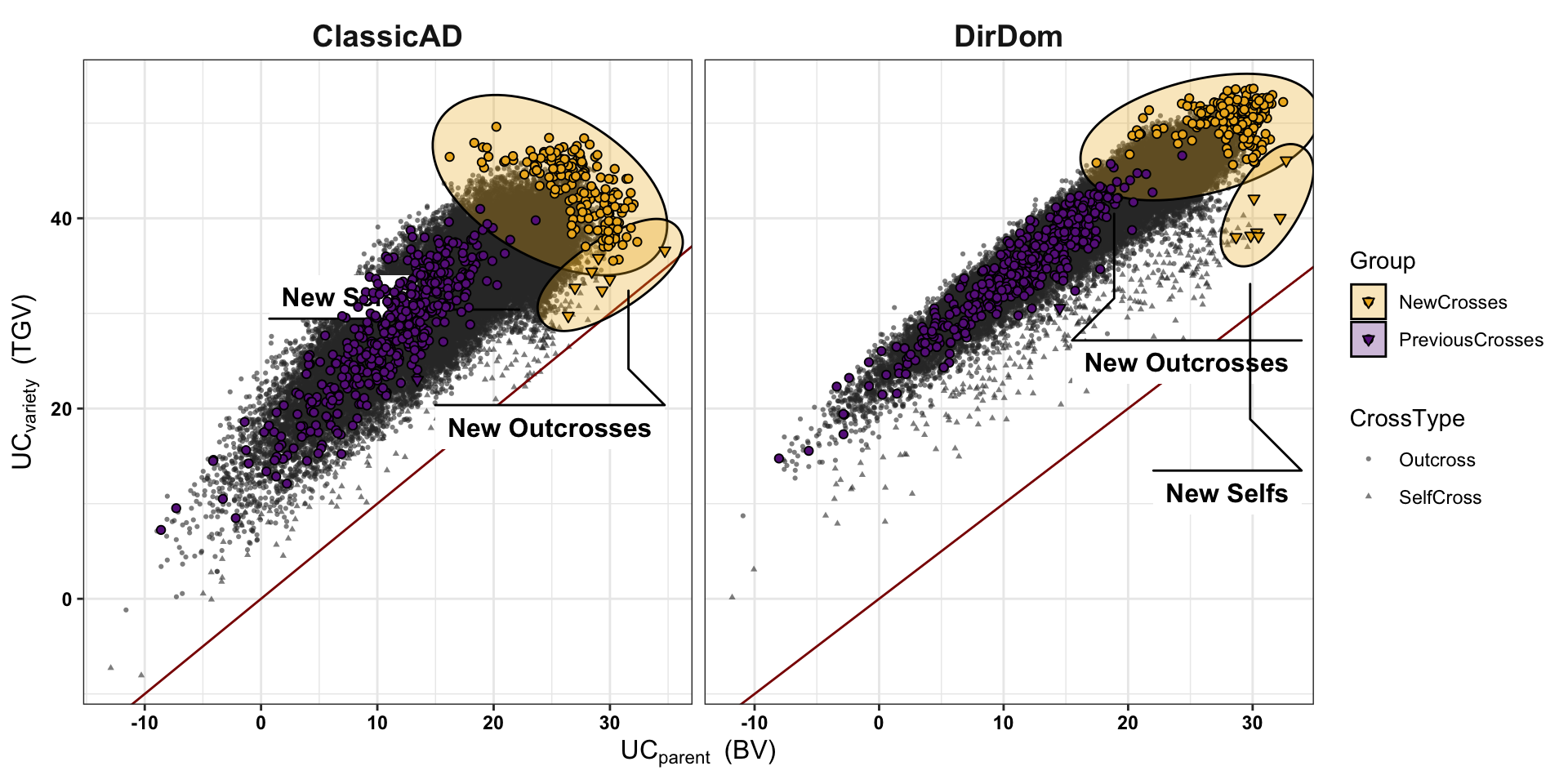

The usefulness criteria i.e. \(UC_{parent}\) and \(UC_{clone}\) are predicted by:

\[UC_{parent}=UC_{RS}=\mu_{BV} + (i_{RS} \times \sigma_{BV})\]

\[UC_{clone}=UC_{VDP}=\mu_{TGV} + (i_{VDP} \times \sigma_{TGV})\]

The observed (or realized) UC are the mean GEBV of family members who were themselves later used as parents.

In order to combined predicted means and variances into a UC, we first calculated the realized intensity of within-family selection (\(i_{RS}\) and \(i_{VDP}\)). For \(UC_{parent}\) we computed the \(i_{RS}\) based on the proportion of progeny from each family, that themselves later appeared in the pedigree as parents. For \(UC_{clone}\) we compute computed \(i_{VDP}\) based on the proportion of family-members that had at least one plot at each VDP stage (CET, PYT, AYT, UYT).

Below, we plot the proportion of each family selected (A) and the selection intensity (B) for each stage.

library(tidyverse); library(magrittr);

## Table S13: Realized within-cross selection metrics

crossmetrics<-readxl::read_xlsx(here::here("manuscript","SupplementaryTables.xlsx"),sheet = "TableS13")library(patchwork)

propPast<-crossmetrics %>%

mutate(Cycle=ifelse(!grepl("TMS13|TMS14|TMS15",sireID) & !grepl("TMS13|TMS14|TMS15",damID),"C0",

ifelse(grepl("TMS13",sireID) | grepl("TMS13",damID),"C1",

ifelse(grepl("TMS14",sireID) | grepl("TMS14",damID),"C2",

ifelse(grepl("TMS15",sireID) | grepl("TMS15",damID),"C3","mixed"))))) %>%

select(Cycle,starts_with("prop")) %>%

pivot_longer(cols = contains("prop"),values_to = "PropPast",names_to = "StagePast",names_prefix = "propPast|prop") %>%

rename(DescendentsOfCycle=Cycle) %>%

mutate(StagePast=gsub("UsedAs","",StagePast),

StagePast=factor(StagePast,levels=c("Parent","Phenotyped","CET","PYT","AYT"))) %>%

ggplot(.,aes(x=StagePast,y=PropPast,fill=DescendentsOfCycle)) +

geom_boxplot(position = 'dodge2',color='black') +

theme_bw() + scale_fill_viridis_d() + labs(y="Proportion of Family Selected") +

theme(legend.position = 'none')

realIntensity<-crossmetrics %>%

mutate(Cycle=ifelse(!grepl("TMS13|TMS14|TMS15",sireID) & !grepl("TMS13|TMS14|TMS15",damID),"C0",

ifelse(grepl("TMS13",sireID) | grepl("TMS13",damID),"C1",

ifelse(grepl("TMS14",sireID) | grepl("TMS14",damID),"C2",

ifelse(grepl("TMS15",sireID) | grepl("TMS15",damID),"C3","mixed"))))) %>%

select(Cycle,sireID,damID,contains("realIntensity")) %>%

pivot_longer(cols = contains("realIntensity"),names_to = "Stage", values_to = "Intensity",names_prefix = "realIntensity") %>%

rename(DescendentsOfCycle=Cycle) %>%

distinct %>% ungroup() %>%

mutate(Stage=factor(Stage,levels=c("Parent","CET","PYT","AYT","UYT"))) %>%

ggplot(.,aes(x=Stage,y=Intensity,fill=DescendentsOfCycle)) +

geom_boxplot(position = 'dodge2',color='black') +

theme_bw() + scale_fill_viridis_d() + labs(y="Stadardized Selection Intensity")

propPast + realIntensity +

plot_annotation(tag_levels = 'A',

title = 'Realized selection intensities: measuring post-cross selection') &

theme(plot.title = element_text(size = 14, face='bold'),

plot.tag = element_text(size = 13, face='bold'),

strip.text.x = element_text(size=11, face='bold'))

| Version | Author | Date |

|---|---|---|

| 22e6c87 | wolfemd | 2021-01-03 |